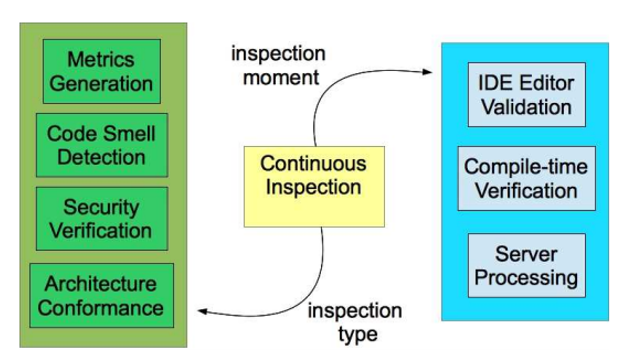

Continuous Inspection is a process to detect the possible bugs in the source code which may occur due to bad code quality and lack of effective peer code review.

The continuous integration server has access to the application source code and all the resources necessary to build it. As part of the build, some tools that perform static analysis are invoked to evaluate the quality and other aspects of the source code.

Some problems in the code can be detected soon, enabling their correction or a planning to address them on the next iterations.

The team is always aware of how is the code quality, and that gives them confidence in the software solution that they are developing.

Team members can learn with some problems detected by the tools. Indeed, the tools typically display user messages that have a good rational explanation for all verifications.

Developers are encouraged to create good quality code, since they know that it is valued by the team. Teams tend to strive to achieve ever better quality indicators, especially if those indicators are made visible to different teams on a dashboard for various projects.

Project managers, contracting organizations, and governance agents in general can use the metrics generated by the tools as key performance indicators to evaluate the quality of the code being delivered by the development team.

Reports generated by (widely used and trusted) tools help to make the claim before project managers and product owners to allocate effort to perform the code refactorings required to fix violations. The following three of these techniques for code analysis (code reviews, pair Programming, and static code analysis), however, are only marginally useful unless rigorously applied—their analysis benefits fade over time without proactive reinforcement.

Peer code reviews

Peer code review are run by humans, who tend to be emotional. This means that colleagues may not be able to tell other colleagues when their code stinks, and people collaborating in a work environment have the tendency to subjectively review one another’s work. There is also a time cost associated with code reviews, even in the most informal of environments.

Pair Programming

Pair programming (sometimes referred to as peer programming) is an agile software development technique in which two programmers work as a pair together on one workstation. One, the driver, writes code while the other, the observer, pointer or navigator, reviews each line of code as it is typed in

Static code analysis

Static source code analyzers attempt to find code sequences that, when executed, could result in buffer overflows, resource leaks, or many other security and reliability problems. Source code analyzers are effective at locating a significant class of flaws that are not detected by compilers during standard builds and often go undetected during runtime testing as well.

The analyzer looks for many types of flaws. It looks for bugs that would normally compile without error or warning. The following is a list of some of the more common errors that a modern static source code analyzer will detect the following:

- Potential NULL pointer dereferences

- Access beyond an allocated area , otherwise known as a buffer overflow

- Writes to potentially read-only memory

- Reads of potentially uninitialized objects

- Resource leaks (e.g., memory leaks and file descriptor leaks)

- Use of memory that has already been deallocated

- Out-of-scope memory usage (e.g., returning the address of an automatic variable from a subroutine)

- Failure to set a return value from a subroutine

- Buffer and array underflows

Advantage of Continuous Inspection

Awareness:

When developers focus on introducing new features, it is common to ignore existing design decisions. This is especially true if a developer that is making the change is not aware of the details of the architecture (new person).

Detectability:

In a large project, sometimes it is hard to evaluate code quality and if the architecture is being followed throughout the source code. The code may grow to a very large code base using different technologies, thus making it difficult to evaluate and detect violations in the architecture.

Early Diagnosis:

problems in the architecture are easier to be addressed when they are detected early. One reason for that is the fact that architecture issues that transpire to the source code tend to propagate due to copy and paste programming.

Software Qualities:

On the one hand, we would like to ensure that the quality attributes (e.g., modifiability, performance, portability) resulting from design decisions in the architecture are preserved in the implementation; on the other hand, as new requirements come in, it is important to adapt the software to implement them with agility. Many teams focus on addressing new functional requirements and often overlook software qualities.

Architecture Evolution:

the original architecture may need to change because of new requirements (e.g., ‘the application shall provide a native Android front-end’) or adoption of technology innovations (e.g., adopting the Spring Security Framework for web authentication/authorization). How can we safely evolve the architecture keeping things working and staying focused on the top priority requirements? It is important that as the system evolves, that the architecture evolves as well.

Technical Debt:

as changes are introduced into the system, it is normal to have some technical debt. If the debt goes unchecked and is never paid back, the software can become muddy [FY00] and the actual architecture of the existing implementation may not exhibit the desired modularity, interoperability, portability, and other qualities. In particular, any maintenance becomes a costly and risky task.

Inspection Cost:

To manually inspect code and detect problems, specifically with the architecture, can be very difficult and time consuming. This kind of inspection requires an experienced person with good knowledge of the architecture and coding guidelines, and can require a lot of time. It is useful if these can be automated, however automated tools often require an experience person to describe the important rules for the architecture.

Just like any other business, software application companies have to find a fine balance between application costs and profit margins. With respect to price, static code analysis tools are always cheaper than dynamic analysers.

Time: Many times lack of code quality or problems on the application architecture are identified, but the team does not have time or is not given time to stop adding features to correct them. Agile teams in general focus on features based upon the backlog items. If time is not allocated to refactor the code and to keep things clean, the system can erode to something muddy.

Metrics:

One of the most common analysis tool is the one that extract metrics from the source code. This metrics can also be compared to statistical thresholds that indicate if the measured value is low, average or high. The average value per package or module can help to identify areas or layers that require more attention. This kind of analysis is usually presented on reports accessible to all in the team. Examples of metrics include:

- Number of violations per category and ratio per KLOC.

- Tests coverage for either or both unit and integration tests.

- Size measures: LOC, number of classes, methods, etc.

- Number of cyclic dependencies.

- Cyclomatic complexity.

- Technical debt.

Code Smells Detection:

The combination of some issues in a class can reveal some design disharmonies, also known as code smells [Fow99]. Usually Continuous Inspection – 6 automated code smells detection is based on rules executed through static code analysis and based on parameterized metric values. For example, one can configure the number of lines of code (or number of instructions) that indicates the presence of the long method smell. The result of this type of analysis is usually presented in a report or is displayed as warnings during the build phase.

- Application security checks: some tools specialize in detecting security vulnerabilities in application programs. Examples include vulnerability to SQL injection and cross-site scripting, hard-coded passwords, overridden security critical methods.

- Architectural conformance: here the tool inspects the source code to make sure specific design rules are followed. These design rules typically include the allowed dependencies in the layered architecture—for example, a module in the business logic layer cannot depend on a module in the presentation layer.

Disadvantage of Implementing Continuous Inspection Setup

An over confidence in the tools can make the team relaxed in other aspects of the software that are not addressed by them.

The setup of these tools and their integration with build tools and IDEs are usually time consuming and should be included in the beginning of the project. If the static analysis tools are not properly configured and unnecessary verifications are enabled, there can be too many violations being reported. When continuous inspection reports or the IDE shows thousands of warnings and many of them are considered non-issues, the developer tends to ignore the entirety of the warnings. If the motivation and benefits of continuous inspection is not made clear to all developers, some of them may feel and complain that verifications are an annoyance that disturb and constrain his/her work and creative process. Often times development teams install and begin to use tools that calculate metrics on the software code, but they don’t take the time to study, explore, and configure the various metrics. For example, some tools calculate an overarching “quality index” that combines a ratio of code violations with test coverage and a measure of complexity. Some metrics are derived from other basic metrics using weighted equations. The default values used in the calculation as well as the weight of each factor may not be appropriate for a given software project, and the metrics can be skewed or misleading. For example, some tools calculate technical debt and one of the outputs is the dollar amount required to pay the debt. If the average salary of the developer was not configured in the tool, the debt amount may not be realistic.

The difference between human-based inspection and that done with a static analysis tool is twofold.

These tools are incredibly cheap to run often. They only require human intervention to configure and run once—after that, they are automated and provide a savings as compared to a person’s hourly rate.

These tools harness the unflinching and unrelenting objectiveness of a computer. A computer won’t offer compromises like “Your code looks fine if you say mine looks fine,” and it won’t ask for bio-breaks and personal time if you run an automated inspection tool every time the version control repository changes.

These tools are also customizable—organizations can choose the most relevant rules for their code base and run these rules every time code is checked into the version control repository.

Continuous Inspection best practices

- Must come from existing continuous integration process

- Available within 24 hours

- Meaningful to developers, leads and management

- Must involve reviewers

- Must tie to accountability points (Leads, reviewers, submitters, project, business function)

- Necessary for large projects –Agile and timely reporting within hours

- Necessary for zero-debt –Architects only have time for the big issues –Computer handles the smaller quality defects

- Allow for Exclusions, Integrations, class rename, code moves

- Classes exempted from quality –Registries –Test support

- Unfixable debt –Caused by someone else –Unreachable test cases –Exceptions to the metrics standard

- Technical Debt Defined

— Coverage – Uncovered conditions & Uncovered lines

— Complexity – Average method complexity & Total class complexity

— Compliance – 140 static code analysis rules Critical, major, minor weights

— Comments – Comment density

— Duplication -Duplicated lines

— Organization – Circular dependencies

Process of Continuous Inspection

Use available automated tools to continuously inspect code, generate a report on the overall code health, and point out if any violation was detected. These tools can be execute locally on the developer’s machine alone and by having the system communicate with a continuous integration server that builds the code at specific time intervals, or upon each code commit.

Quality Gates

Soft Gate

- Quality issues raised do not break the build, but may prompt further review by peers

Hard Gate

- Breaks the build

- Can be overridden after team review

Difference between dynamic code analysis and static code analysis

Static analysis is the testing and evaluation of an application by examining the code without executing the application whereas Dynamic analysis is the testing and evaluation of an application during runtime.

Many software defects that cause memory and threading errors can be detected both dynamically and statically. The two approaches are complementary because no single approach can find every error.

The primary advantage of dynamic analysis: It reveals subtle defects or vulnerabilities whose cause is too complex to be discovered by static analysis. Dynamic analysis can play a role in security assurance, but its primary goal is finding and debugging errors.

Should you start with static tools or dynamic tools or use both?

In addition to static analysis, which reviews code before it goes live, there are also dynamic analysis tools, which conduct automated scans of production Web applications to unearth vulnerabilities. In other words, dynamic tools test from the outside in, while static tools test from the inside out, says Neil McDonald, an analyst at Gartner.

Many organizations start with dynamic testing, just to get a quick assessment of where their applications stand, McDonald says. In some cases, the groups that start this initiative are in security or audit compliance departments and don’t have access to source code. The natural second step is to follow up with static analyzers, enabling developers to fix the problems found by dynamic analysis tools. Some companies continue using both, because each type yields different findings.

An important differentiator between the two types is that static analyzers give you the exact line of code causing the problem, while dynamic analyzers just identify the Web page or URL causing the issue. That’s why some vendors offer integration between the two types of tools.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com