The expression browser is available at /graph on the Prometheus server, allowing you to enter any expression and see its result either in a table or graphed over time.

This is primarily useful for ad-hoc queries and debugging. For graphs, use Grafana or Console templates.

Using the expression browser

As you can gather from localhost:9090/metrics, one metric that Prometheus exports about itself is named prometheus_target_interval_length_seconds (the actual amount of time between target scrapes). Enter the below into the expression console and then click "Execute":

-------------------------------------------

prometheus_target_interval_length_seconds

-------------------------------------------

This should return a number of different time series (along with the latest value recorded for each), each with the metric name prometheus_target_interval_length_seconds, but with different labels. These labels designate different latency percentiles and target group intervals.

If we are interested only in 99th percentile latencies, we could use this query:

-------------------------------------------

prometheus_target_interval_length_seconds{quantile="0.99"}

-------------------------------------------

To count the number of returned time series, you could write:

-------------------------------------------

count(prometheus_target_interval_length_seconds)

-------------------------------------------

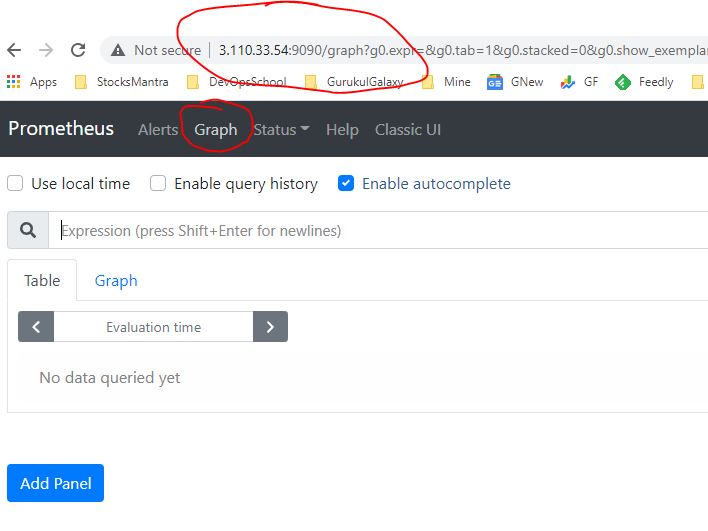

Using the graphing interface

To graph expressions, navigate to http://localhost:9090/graph and use the "Graph" tab.

For example, enter the following expression to graph the per-second rate of chunks being created in the self-scraped Prometheus:

-------------------------------------------

rate(prometheus_tsdb_head_chunks_created_total[1m])

-------------------------------------------

Experiment with the graph range parameters and other settings.Configure Prometheus to monitor the sample targets such as Node Exporter

Example queries that aggregate over thousands

Though not a problem in our example, queries that aggregate over thousands of time series can get slow when computed ad-hoc. To make this more efficient, Prometheus can prerecord expressions into new persisted time series via configured recording rules. Let's say we are interested in recording the per-second rate of cpu time (node_cpu_seconds_total) averaged over all cpus per instance (but preserving the job, instance and mode dimensions) as measured over a window of 5 minutes. We could write this as:

-------------------------------------------

avg by (job, instance, mode) (rate(node_cpu_seconds_total[5m]))

-------------------------------------------

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com