Evolution and Timeline of Machine Learning

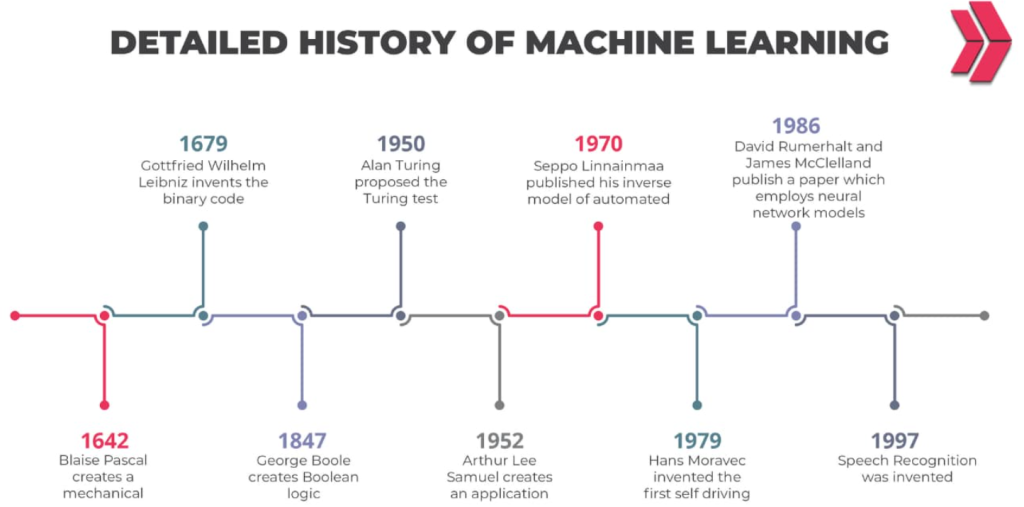

Machine Learning (ML) has evolved from a niche area of research to a cornerstone of modern technology, driving advancements in various fields, including artificial intelligence, data science, and automation. This timeline outlines the key milestones in the development of machine learning, tracing its journey from early theoretical concepts to the sophisticated models and applications we see today.

1940s – 1950s: The Foundations of Machine Learning

- 1943: McCulloch and Pitts’ Neural Network Model

- Warren McCulloch and Walter Pitts published a paper on a mathematical model for neural networks, introducing the concept of neurons as binary units that could perform simple computations, laying the groundwork for artificial neural networks.

- 1949: Hebbian Learning

- Donald Hebb introduced the idea of Hebbian learning in his book The Organization of Behavior, proposing that synaptic connections between neurons are strengthened when the neurons are activated simultaneously, forming the basis of unsupervised learning in neural networks.

- 1950: Turing Test

- Alan Turing proposed the Turing Test as a measure of machine intelligence, asking whether a machine could exhibit intelligent behavior indistinguishable from a human. Although not directly related to machine learning, this concept influenced the development of AI and ML.

1950s – 1960s: Early Concepts and Algorithms

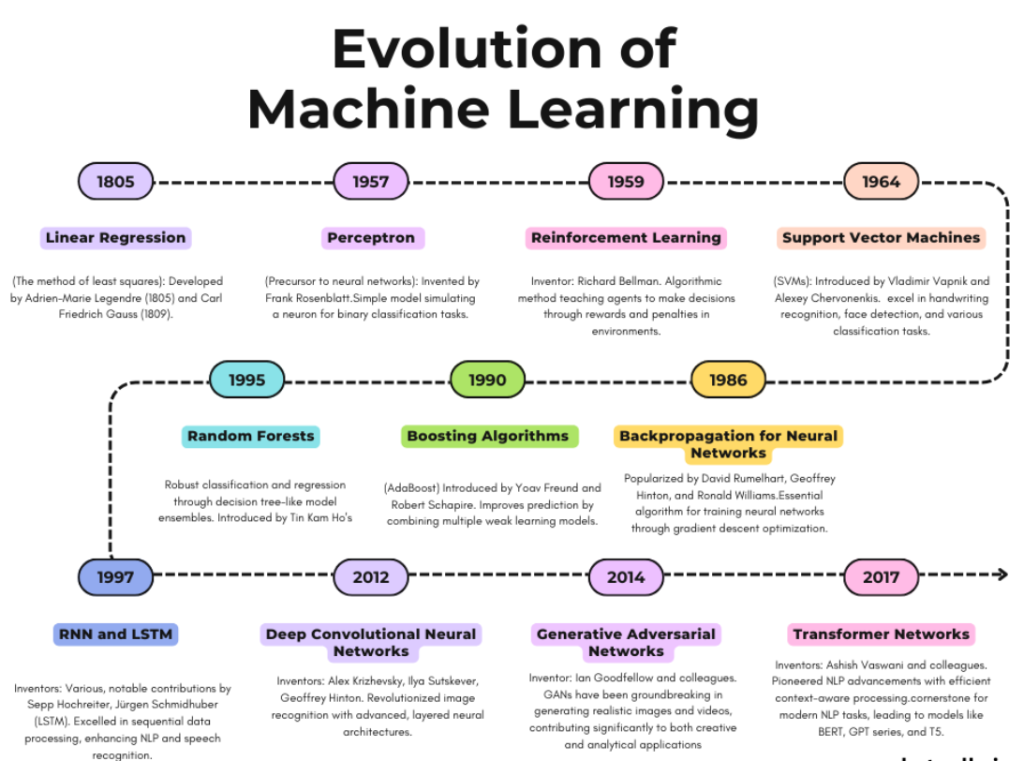

- 1957: Perceptron

- Frank Rosenblatt developed the Perceptron, an early type of artificial neural network capable of learning linearly separable patterns. It was one of the first models that could learn from data, marking a significant milestone in machine learning.

- 1959: The Term “Machine Learning” Coined

- Arthur Samuel, a pioneer in artificial intelligence, coined the term “machine learning” while developing a program that could learn to play checkers better than its creator. His work emphasized the idea that machines could learn from experience.

- 1960s: Nearest Neighbor and Decision Trees

- The Nearest Neighbor algorithm was developed as a simple method for classification and pattern recognition. Decision Trees, a fundamental method for classification and regression tasks, also began to gain traction during this period.

- 1967: K-Nearest Neighbors (K-NN)

- The K-Nearest Neighbors algorithm was formally introduced, allowing machines to classify objects based on their proximity to other objects in the dataset. This algorithm remains widely used in various ML tasks.

1970s – 1980s: The Rise of Statistical Learning

- 1970s: The Development of the Backpropagation Algorithm

- Although the idea was first introduced by Paul Werbos in 1974, backpropagation gained widespread attention in the 1980s. Backpropagation allowed neural networks to learn more effectively by adjusting weights based on the error of the output.

- 1979: First Autonomous Vehicle

- Stanford University’s Cart, one of the earliest autonomous vehicles, demonstrated the potential of machine learning in robotics. The vehicle could navigate obstacles using computer vision and pattern recognition algorithms.

- 1980s: Expert Systems and Decision Trees

- Expert systems, which used rule-based algorithms to mimic human decision-making, became popular. The development of decision tree algorithms like ID3 (Iterative Dichotomiser 3) further advanced machine learning in classification tasks.

- 1982: Hopfield Networks

- John Hopfield introduced Hopfield Networks, a type of recurrent neural network used for associative memory. This model demonstrated the potential of neural networks in solving complex optimization problems.

- 1986: Introduction of Backpropagation

- The backpropagation algorithm was popularized by Rumelhart, Hinton, and Williams, revolutionizing neural networks by enabling multi-layer networks to be trained more efficiently.

1990s: The Emergence of Modern Machine Learning

- 1990: Support Vector Machines (SVM)

- Vladimir Vapnik and his colleagues introduced Support Vector Machines, a powerful supervised learning algorithm used for classification and regression tasks. SVMs became one of the most popular and effective machine learning algorithms.

- 1995: Random Forests

- The Random Forest algorithm was developed by Tin Kam Ho, introducing a method of combining multiple decision trees to improve predictive performance and reduce overfitting.

- 1997: Recurrent Neural Networks (RNNs)

- RNNs, a class of neural networks capable of handling sequential data, became prominent, especially in tasks like language modeling and time-series prediction.

- 1998: MNIST Dataset

- The MNIST dataset of handwritten digits was introduced and became a standard benchmark for evaluating machine learning models, particularly in the field of computer vision.

2000s: The Rise of Big Data and Advanced Algorithms

- 2000: Introduction of Boosting Algorithms

- Boosting algorithms like AdaBoost (Adaptive Boosting) gained popularity, providing a method for improving the performance of weak learners by combining them into a strong learner.

- 2006: Deep Learning and the Emergence of Deep Belief Networks

- Geoffrey Hinton and his team introduced Deep Belief Networks (DBNs), marking the beginning of the deep learning revolution. DBNs could learn hierarchical representations of data, making them effective in tasks like image and speech recognition.

- 2009: The Netflix Prize

- Netflix awarded a $1 million prize to a team that developed an algorithm 10% more accurate than its existing recommendation system. This competition highlighted the potential of collaborative filtering and matrix factorization in machine learning.

- 2010: Introduction of Big Data Tools

- The rise of big data tools like Hadoop and Spark enabled the processing of large-scale datasets, which was critical for training complex machine learning models.

2010s: The Deep Learning Revolution

- 2012: AlexNet and the ImageNet Challenge

- AlexNet, a deep convolutional neural network (CNN) developed by Alex Krizhevsky, Geoffrey Hinton, and Ilya Sutskever, won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) by a significant margin. This victory marked the beginning of the deep learning era and demonstrated the power of deep neural networks in computer vision.

- 2014: Generative Adversarial Networks (GANs)

- Ian Goodfellow and his colleagues introduced Generative Adversarial Networks (GANs), a new class of machine learning models where two neural networks, a generator and a discriminator, are trained in tandem. GANs became widely used for generating realistic images, videos, and other data.

- 2014: Deep Reinforcement Learning

- Google DeepMind introduced Deep Q-Networks (DQNs), which combined reinforcement learning with deep learning to achieve superhuman performance in Atari games. This breakthrough led to significant advancements in reinforcement learning and AI.

- 2015: The Rise of Transfer Learning

- Transfer learning, a technique where a pre-trained model is fine-tuned on a new task, became popular, particularly in the field of natural language processing (NLP). Models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) demonstrated the effectiveness of transfer learning in various NLP tasks.

- 2017: Transformer Networks

- The Transformer architecture, introduced by Vaswani et al., revolutionized NLP by enabling more efficient and parallelizable models. Transformers formed the basis for state-of-the-art models like BERT, GPT, and T5.

- 2018: BERT and GPT-2

- Google introduced BERT, a transformer-based model that achieved state-of-the-art results on various NLP benchmarks. OpenAI released GPT-2, a large transformer-based model capable of generating coherent and contextually relevant text.

2020s: AI and Machine Learning at Scale

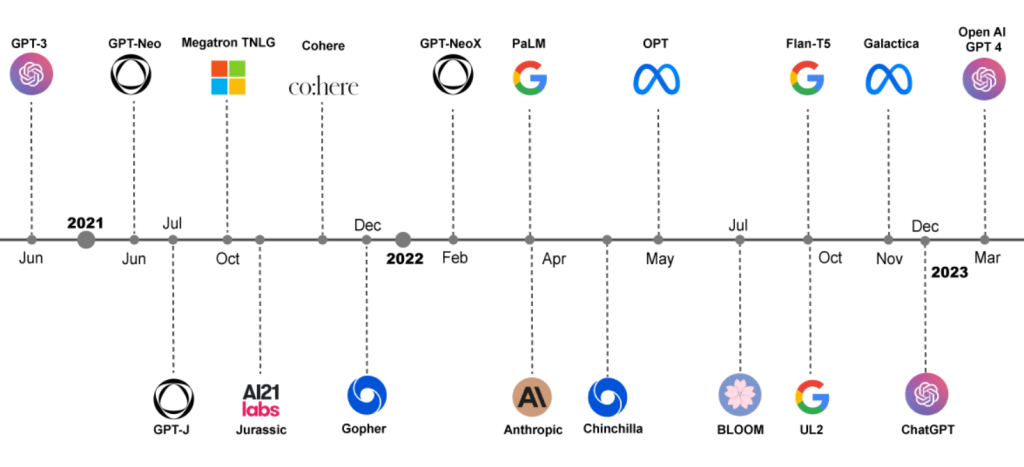

- 2020: GPT-3

- OpenAI released GPT-3, a 175 billion parameter transformer model, setting a new standard for NLP models. GPT-3 demonstrated the power of large-scale pre-training and fine-tuning, leading to numerous applications in text generation, summarization, and translation.

- 2020-2021: MLOps Adoption

- The adoption of MLOps practices became widespread as organizations recognized the need for scalable, reliable, and automated machine learning pipelines. MLOps integrates machine learning with DevOps practices to ensure models are deployed efficiently and maintained effectively.

- 2021: Transformers Beyond NLP

- Transformer models, originally designed for NLP, began to be applied to other domains like computer vision and protein folding. Vision Transformers (ViTs) demonstrated the potential of transformers in tasks traditionally dominated by convolutional neural networks.

- 2021: AI Ethics and Responsible AI

- As machine learning models became more pervasive, the focus on AI ethics, fairness, transparency, and accountability grew. Organizations began implementing practices to ensure their models were ethically sound and free from bias.

- 2022 and Beyond: Democratization of AI

- Tools and platforms for AutoML (Automated Machine Learning) and no-code/low-code AI became more accessible, allowing non-experts to build and deploy machine learning models. This democratization is expected to accelerate the adoption of AI across industries.

Conclusion

The evolution of machine learning has been marked by significant advancements, from early theoretical concepts to powerful algorithms and models that now drive much of the technology we use daily. The journey has been shaped by the contributions of researchers, the advent of big data, the rise of deep learning, and the integration of machine learning into various applications. As machine learning continues to evolve, it will undoubtedly play an even more significant role in shaping the future of technology, business, and society. The timeline of machine learning is a testament to the rapid pace of innovation and the ongoing quest to create intelligent systems that can learn, adapt, and transform the world around us.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com