Are you curious about how deep learning models are evaluated? In this blog post, we will delve into the different methods used to evaluate the performance of these models. From accuracy metrics to cross-validation techniques, we will explore the ins and outs of evaluating deep learning models.

Understanding Deep Learning Models

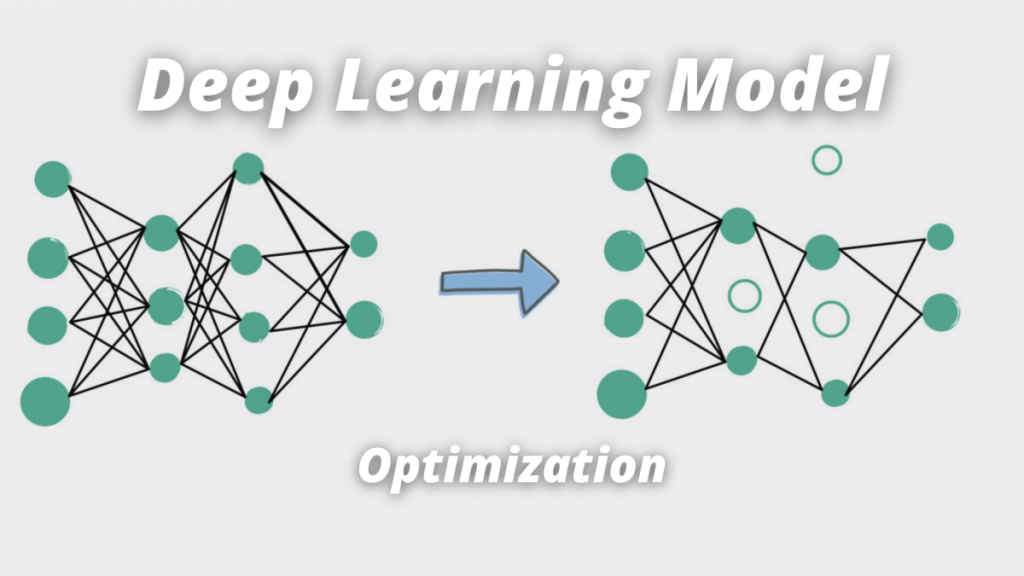

Before we dive into evaluation methods, let’s briefly discuss what deep learning models are. Deep learning is a subset of machine learning that involves training artificial neural networks to recognize patterns in data. These networks consist of layers of interconnected nodes that process information and make predictions.

Some common types of deep learning models include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs). Each type of model has its own unique architecture and is used for different applications.

Evaluating Model Performance

Now that we understand what deep learning models are, let’s discuss how we evaluate their performance. Evaluation is a crucial step in the deep learning process, as it allows us to determine whether a model is accurate and reliable.

Accuracy Metrics

One of the most common methods used to evaluate deep learning models is accuracy metrics. These metrics measure the performance of a model by comparing its predictions to the actual outcomes. Some popular accuracy metrics include precision, recall, and F1 score.

Precision measures the proportion of true positives (correct predictions) to all positive predictions. Recall measures the proportion of true positives to all actual positive outcomes. F1 score is the harmonic mean of precision and recall, and is often used as a balanced measure of a model’s performance.

Cross-Validation Techniques

Another method used to evaluate deep learning models is cross-validation. Cross-validation involves splitting the data into multiple subsets and training the model on each subset. This allows us to test the model’s performance on different parts of the data and check for overfitting.

Some common types of cross-validation techniques include k-fold cross-validation, leave-one-out cross-validation, and stratified cross-validation. Each technique has its own advantages and disadvantages, and the choice of technique often depends on the specific dataset and model being used.

Other Evaluation Methods

In addition to accuracy metrics and cross-validation, there are other methods used to evaluate deep learning models. These include confusion matrices, receiver operating characteristic (ROC) curves, and precision-recall curves.

Confusion matrices provide a visual representation of the model’s performance by showing the number of true positives, true negatives, false positives, and false negatives. ROC curves and precision-recall curves plot the model’s true positive rate against its false positive rate and precision, respectively.

Conclusion

In conclusion, evaluating deep learning models is a crucial step in the machine learning process. By using accuracy metrics, cross-validation techniques, and other evaluation methods, we can determine whether a model is accurate and reliable. So the next time you encounter a deep learning model, take a closer look at how it was evaluated to understand its true performance.

Email- contact@devopsschool.com

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com