What is DataOps?

DataOps stands for Data Operations.

DataOps is a process-oriented, automated, and Data management collaborative approach for designing, implementing, and managing data workflows and a distributed data architecture.

DataOps aims to:

Deliver high value

Manage risks

According to Gartner, “DataOps is a collaborative data management practice focused on improving the communication, integration and automation of data flows between data managers and data consumers across an organization”.

Chris Bergh is the Founder of DataOps

How DataOps Works and Architecture?

DataOps architecture and processes brings new business insights by allowing the rapid development and deployment of innovative, high quality data analytic pipelines.

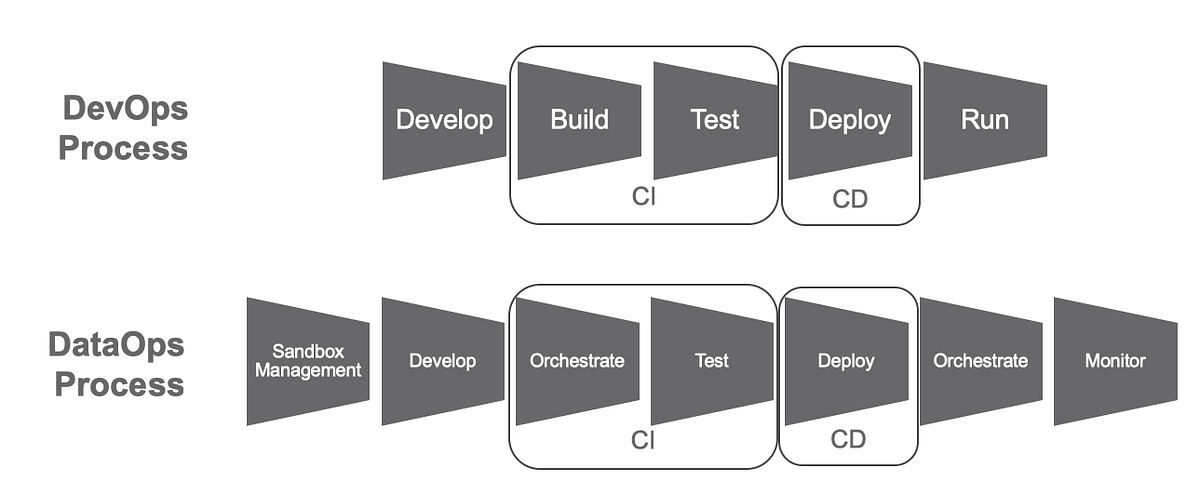

The aim of DataOps is to merge DevOps and Agile methodologies to manage data in arrangement with business goals.

DataOps uses statistical process control (SPC) to monitor and control the data analytics pipeline. With SPC in place, the data flowing through an operational system is constantly monitored and verified to be working. If an anomaly occurs, the data analytics team can be notified through an automated alert.

Breakdown of the DataOps Architecture

The DataOps architecture include support for environment creation and management. This allows separate development, test, and production environments, that in turn support orchestration, monitoring, and test automation. The software automates impact review and new-analytics deployment so that changes can be examine and published constantly. Each environment agents operates on the behalf of the DataOps Platform to manage code and configuration, execute tasks, and return test results, logs, and runtime information. This allows the architecture to work in heterogeneous tools and systems. The DataOps Platform also combines several other functions which support the aim of rapid deployment and high quality with governance:

- Storage /Revision Control — Version control manages changes in artifacts; essential for governance and iterative development. (example: git, dockerhub)

- History and Metadata — Manage system and activity logs (example, MongoDB)

- Authorization and Permissions— Control access to environments (example: Auth0)

- Environment Secrets — Role-based access to tools and resources within environments (example: Vault)

- DataOps Metrics and Reports — Internal analytics provide a big-picture assessment of the state of the analytics and data team. We call this the CDO Dashboard. (example: Tableau)

- Automated Deployment— This involves moving the code/configuration from one environment (e.g., a test environment) to a production environment. (Examples: Jenkins, CircleCI).

- Environment Creation and Management— treat your infrastructure as code be able to create places for your team to do work with all the required hardware, software, and test data sets they need. (example: chef, puppet, etc.)

- Orchestrate, Test, Monitor — As your pipelines are running, orchestrate all the tools involved, test and monitor, and alert if something goes wrong. (examples, Airflow, Great Expectations, Grafana, etc.)

Multi-Location DataOps Data Architecture

Companies are rapidly moving their work from on-premises to the cloud. Enterprises are selecting to have multiple cloud providers, as well. As a result, your data analytics workloads can expand multiple physical locations and multiple teams. Your customers only look after the result of that coordination. How can you do DataOps across those locations and teams and not end up with a “Data Ooooops”? Think of a “hub and spoke” model for your DataOps Data Architecture. As shown in figure 4, the DataOps Platform is the hub for your distributed sites engaging in development and operations. Testing is also coordinated between the sites.

Building DataOps Into an Existing Data Architecture

Whether your current data architecture is on-prem or in the cloud or in both; whether you have a standard environment or live in a multi-tool world, you can evolve your system to incorporate DataOps functionalities. You can build a DataOps Platform yourself or leverage solutions from the vibrant and growing DataOps ecosystem. DataOps can help you architect your data operations pipeline to support rapid development and deployment of new analytics, robust quality, and high levels of staff productivity.

Best institute for learning DataOps

In my consideraton, the best institute is DevOpsSchool. Why i am saying this? Because this institute has proven itself in very less time by achieved a tremedous track record of successfully trained so many participants so far. Whether it is a student or individual professionals or to any particular company. This institute has brilliant trainers that holds 15+ years of IT experience and they all are well skilled in their domain. This institute ‘s USP is it provides live and instructor led online training with so many benefits to help in learning. So if you are lloking fo any specific institute for training and certification then you should go for this.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com

A great share indeed!I would like to share that I recently opted for dataops services from Enov8,just in case if are lokking for the best in the biz.