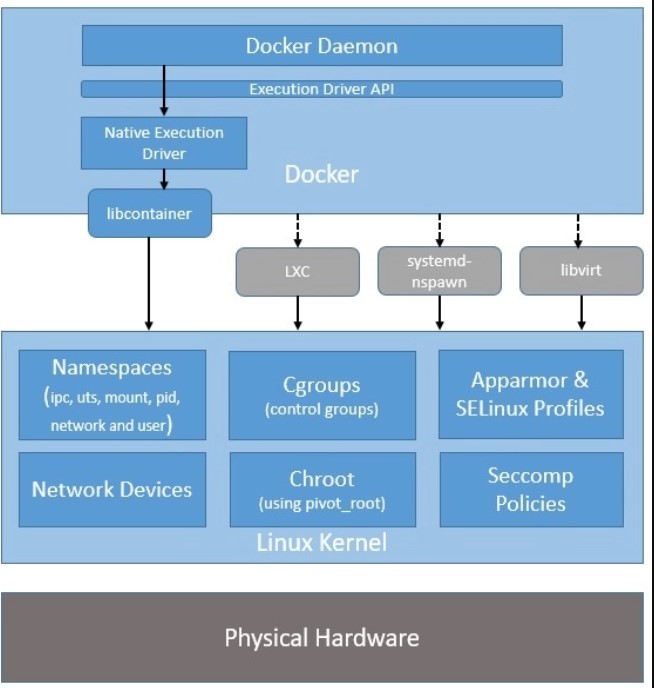

Docker architecture consists of several components that work together to provide a platform for building, shipping, and running containers. These components include:

- Docker Engine: This is the core component of Docker, and it is responsible for building and running containers. The Docker Engine provides a REST API for interacting with containers, as well as a command-line interface for managing containers.

- Docker Images: Docker images are snapshots of a container that can be used to launch new containers. Images are stored in a registry, such as Docker Hub or a private registry, and can be pulled down to a local machine for use.

- Docker Registries: Docker registries are where Docker images are stored and distributed. Docker Hub is a public registry that is free to use, but organizations can also set up their own private registries to store and distribute images.

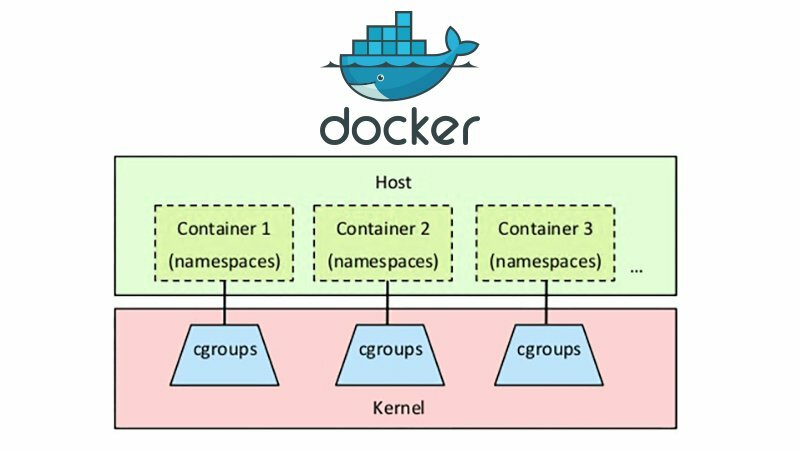

- Docker Containers: Docker containers are isolated environments that run applications. Containers are built from images and can be launched on any host that has the Docker Engine installed.

- Docker Compose: Docker Compose is a tool for defining and running multi-container applications using a YAML file. It allows developers to define the services that make up an application, as well as the relationships between those services, and then start and stop the entire application with a single command.

- Docker Swarm: Docker Swarm is a native clustering solution for Docker that provides high-availability and scalability for containers. Swarm allows administrators to manage a swarm of Docker nodes as a single virtual host, making it easier to deploy and manage containers at scale.

In summary, Docker architecture consists of several components, including the Docker Engine, Docker Images, Docker Registries, Docker Containers, Docker Compose, and Docker Swarm. These components work together to provide a platform for building, shipping, and running containers.

What is Docker Workflow?

The Docker workflow involves several steps to build, package, and run applications as containers. The basic steps are as follows:

- Writing Dockerfiles: A Dockerfile is a script that contains instructions for building a Docker image. It specifies the base image to use, the application code to include, and the environment variables to set.

- Building Docker Images: Docker images are built from Dockerfiles using the “docker build” command. The build process follows the instructions in the Dockerfile to create a new image that includes the application code and its dependencies.

- Storing Docker Images: Docker images can be stored in a Docker registry, such as Docker Hub or a private registry. Docker images are stored as layers, making it possible to share images between different projects and build new images from existing images.

- Running Docker Containers: Docker containers are launched from Docker images using the “docker run” command. Containers are isolated environments that run applications, and they can be launched on any host that has the Docker Engine installed.

- Managing Docker Containers: Docker provides several tools for managing containers, including the “docker ps” command to list running containers, the “docker stop” command to stop containers, and the “docker rm” command to remove containers.

- Deploying Docker Containers: Docker containers can be deployed to a variety of environments, including physical servers, virtual machines, cloud platforms, and more. Docker Compose and Docker Swarm are two tools that can be used to deploy and manage containers at scale.

In summary, the Docker workflow involves several steps, including writing Dockerfiles, building Docker images, storing Docker images, running Docker containers, managing Docker containers, and deploying Docker containers. The Docker platform provides a set of tools for building, packaging, and running applications as containers, making it easier to deploy and manage applications at scale.

Docker CLI (docker)

/usr/bin/docker

Docker is used as a reference to the whole set of docker tools and at the beginning, it was a monolith. But now docker-cli is only responsible for user-friendly communication with docker.

So the command’s like docker build ... docker run ... are handled by Docker CLI and result in the invocation of dockerd API.

Dockerd

/usr/bin/dockerd

The Docker daemon – dockerd listens for Docker API requests and manages the host’s Container life-cycles by utilizing contanerd

dockerd can listen for Docker Engine API requests via three different types of Socket: unix, tcp, and fd. By default, a unix domain socket is created at /var/run/docker.sock, requiring either root permission, or docker group membership. On Systemd based systems, you can communicate with the daemon via Systemd socket activation, use dockerd -H fd://.

There are many configuration options for the daemon, which are worth checking if you work with docker (dockerd).

My impression is that dockerd is here to serve all the features of Docker (or Docker EE) platform, while actual container life-cycle management is “outsourced” to containerd.

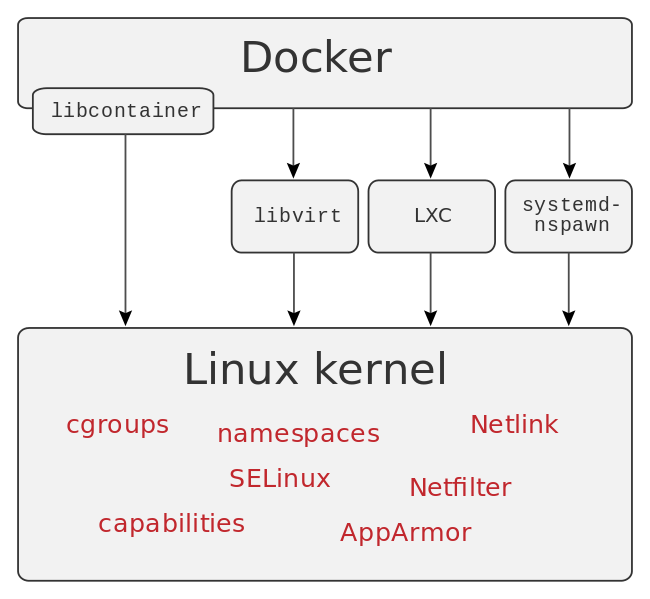

Containerd

/usr/bin/docker-containerd

containerd was introduced in Docker 1.11 and since then took the main responsibility of managing containers life-cycle. containerd is the executor for containers, but has a wider scope than just executing containers. So it also takes care of:

- Image push and pull

- Managing storage

- Of course executing of Containers by calling runc with the right parameters to run containers…

- Managing of network primitives for interfaces

- Management of network namespaces containers to join existing namespaces

containerd fully leverages the OCI runtime specification1, image format specifications, and OCI reference implementation (runc). Because of its massive adoption, containerd is the industry standard for implementing OCI. It is currently available for Linux and Windows.

As shown in the picture above, contained includes a daemon exposing gRPC API over a local UNIX socket. The API is a low-level one designed for higher layers to wrap and extend. Containerd uses RunC to run containers according to the OCI specification.

containerd is based on the Docker Engine’s core container runtime to benefit from its maturity and existing contributors, however, containerd is designed to be embedded into a larger system, rather than being used directly by developers or end-users.

Well, now other vendors can use containers without having to deal with docker-related parts.

let’s go through some subsystems of containerd…

RunC

/usr/bin/docker-runc runc (OCI runtime) canbe seen as component of containerd.

runc is a command-line client for running applications packaged according to the OCI format and is a compliant implementation of the OCI spec.

Containers are configured using bundles. A bundle for a container is a directory that includes a specification file named “config.json” and a root filesystem. The root filesystem contains the contents of the container.

Assuming you have an OCI bundle you can execute the container

# run as root

cd /mycontainer

runc run mycontainerid

containerd-ctr

/usr/bin/docker-containerd-ctr (docker-)containerd-ctr – it’s barebone CLI (ctr) designed specifically for development and debugging purposes for direct communication with containerd. It’s included in the releases of containerd. By that less interesting for docker users.

containerd-shim

/usr/bin/docker-containerd-shim

The shim allows for daemon-less containers. According to Michael Crosby it basically sits as the parent of the container’s process to facilitate a few things.

- First it allows the runtimes, i.e.

runc, to exit after it starts the container. This way we don’t have to have the long-running runtime processes for containers. - Second it keeps the STDIO and other fds open for the container in the case

containerdand/or docker both die. If the shim was not running then the parent site of the pipes or the TTY master would be closed and the container would exit. - Finally it allows the container’s exit status to be reported back to a higher level tool like docker without having the be the actual parent of the container’s process and do a wait4.

How it all works together

We can do an experiment. First we check what Docker processes are running right after Docker installation.

ps fxa | grep docker -A 3

# prints:

2239 ? Ssl 0:27 /usr/bin/dockerd -H fd://

2397 ? Ssl 0:18 \_ docker-containerd -l unix:///var/run/docker/libcontainerd/docker-containerd.sock ...

...

well at this point we see that dockerd is started and containerd is running as a child process too. Like described, dockerd needs containerd

docker run -d alpine sleep 60

Now we should see it in the process list in the next 60 seconds. Let’s check again:

ps fxa | grep dockerd -A 3

#prints

2239 ? Ssl 0:28 /usr/bin/dockerd -H fd://

2397 ? Ssl 0:19 \_ docker-containerd -l unix:///var/run/docker/libcontainerd/docker-containerd.sock ...

15476 ? Sl 0:00 \_ docker-containerd-shim 3da7... /var/run/docker/libcontainerd/3da7.. docker-runc

15494 ? Ss 0:00 \_ sleep 60

Now we see the whole process chain:

dockerd –> containerd –> containerd-shim –> “sleep 60” (desired process in the container).

We do not see runc in the chain, we know containerd-shim takes over after runc has started the container. Also, we know that theoretically containerd-shim can survive the crash of containerd. But in the current docker version, it’s not activated by default.

However, it’s a pretty long chain with possible disadvantages that such chains might have.

How it all works in Kubernetes

You might imagine that Kubernetes do not need Docker-specific parts. As of now, it’s exactly the case…

Kubernetes “speaks” with contanerd directly as depicted in the picture. If interested, check how it was in between.

I hope this might help all Docker users. Give me a hint if something is not precise.

- The OCI Runtime Specification outlines how to run a containers “filesystem bundle” that is unpacked on disk. At a high level, an OCI implementation would download an OCI Image (OCI Image Specification) then unpack that image into an OCI Runtime filesystem bundle. At this point, the OCI Runtime Bundle would be run by an OCI Runtime. ↩︎

Reference

- https://alexander.holbreich.org/docker-components-explained/

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com