The Kubernetes scheduler can be constrained to place a pod on particular nodes using few different options. In your POD specification, there are many ways in which you declare a POD should be dedicated to specific nodes.

- Node Selector

- Node Affinity

- Pod Affinity aka inter-pod affinity/anti-affinity

- Taints and Tolerations

In this post, I am going to discuss on Node Selector and Node Affinity.

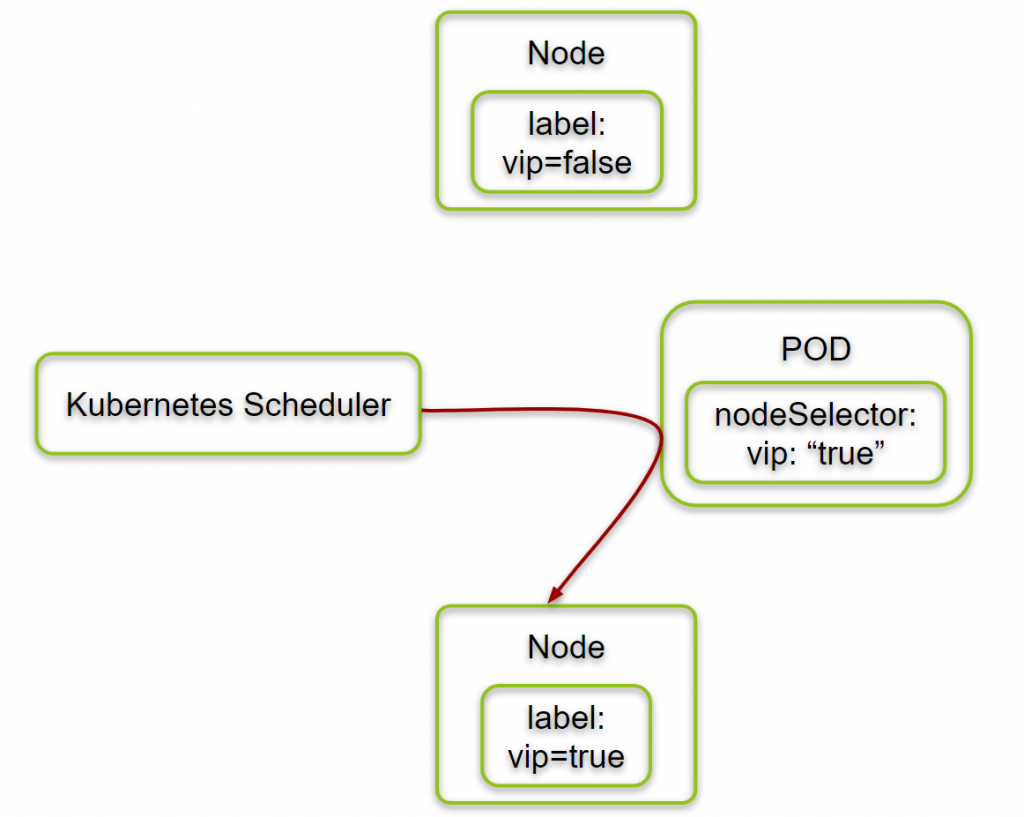

nodeSelector

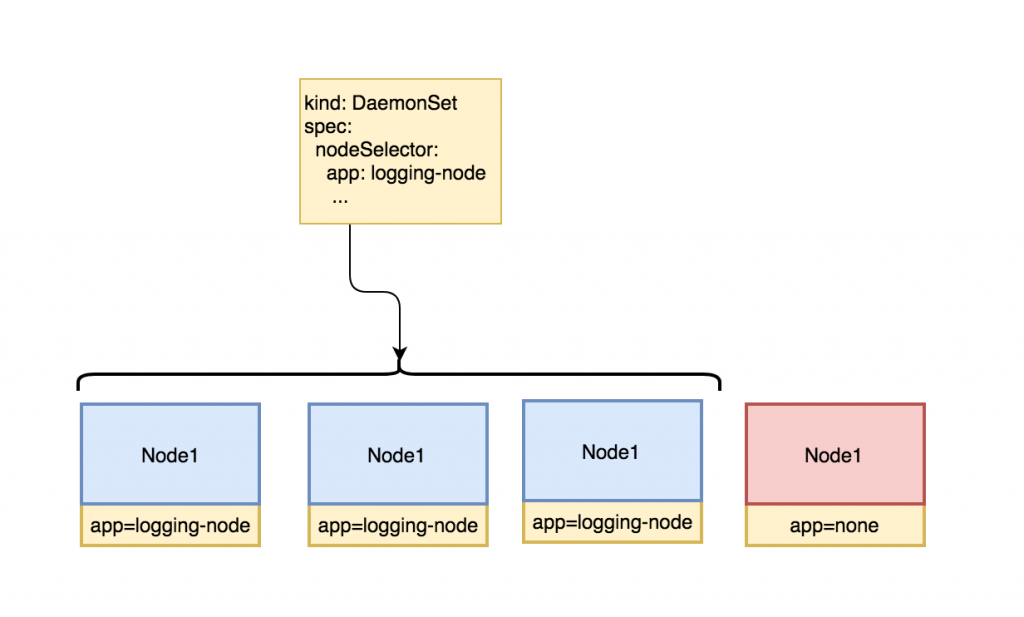

nodeSelector is the simplest recommended form of node selection constraint. nodeSelector is a field of PodSpec. It specifies a map of key-value pairs. For the pod to be eligible to run on a node, the node must have each of the indicated key-value pairs as labels (it can have additional labels as well).

Interlude: built-in node labels

In addition to labels you attach, nodes come pre-populated with a standard set of labels. These labels are

kubernetes.io/hostname

failure-domain.beta.kubernetes.io/zone

failure-domain.beta.kubernetes.io/region

topology.kubernetes.io/zone

topology.kubernetes.io/region

beta.kubernetes.io/instance-type

node.kubernetes.io/instance-type

kubernetes.io/os

kubernetes.io/arch

The affinity feature consists of two types of affinity

- node affinity” and

- inter-pod affinity/anti-affinity”

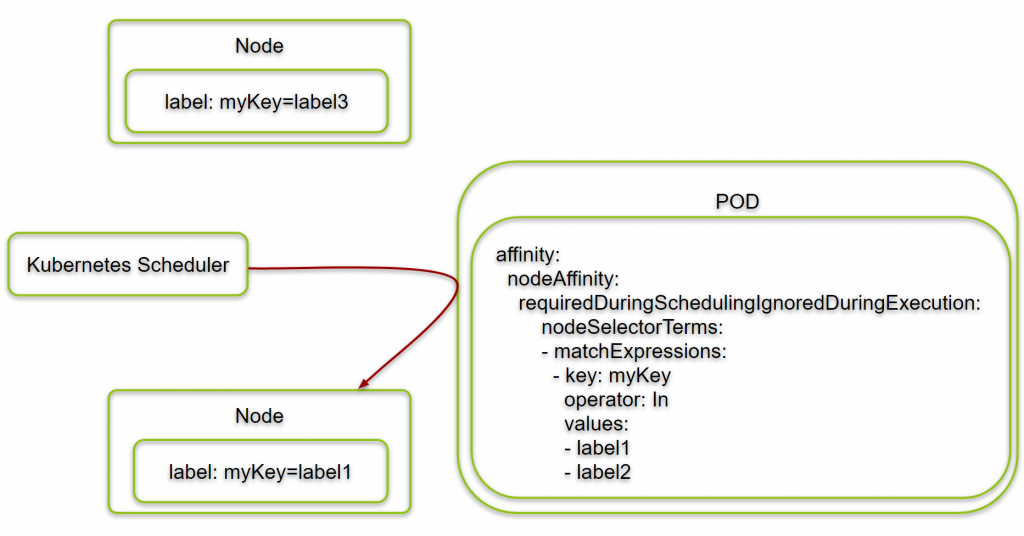

nodeAffinity

Kubernetes also has a more nuanced way of setting affinity called nodeAffinity and podAffinity.

nodeSelector provides a very simple way to constrain pods to nodes with particular labels. Node affinity is conceptually similar to nodeSelector but nodeAffinity allows users to more expressive way pods to nodes with particular labels.

The affinity/anti-affinity feature, greatly expands the types of constraints you can express. The key enhancements are

- The affinity/anti-affinity language is more expressive. The language offers more matching rules besides exact matches created with a logical AND operation;

- you can indicate that the rule is “soft”/”preference” rather than a hard requirement, so if the scheduler can’t satisfy it, the pod will still be scheduled;

- you can constrain against labels on other pods running on the node (or other topological domain), rather than against labels on the node itself, which allows rules about which pods can and cannot be co-located

The affinity feature consists of two types of affinity

- node affinity – Node affinity is like the existing nodeSelector (but with the first two benefits listed above)

- inter-pod affinity/anti-affinity – inter-pod affinity/anti-affinity constrains against pod labels rather than node labels, as described in the all three item listed above.

These are fields in under Pod metadata and take automatic or user-defined metadata to dictate where to schedule pods. affinity differs from nodeSelector in the following ways:

- Schedule a pod based on which other pods are or are not running on a node.

- Request without requiring that a pod be run on a node.

- Specify a set of allowable values instead of a single value requirement.

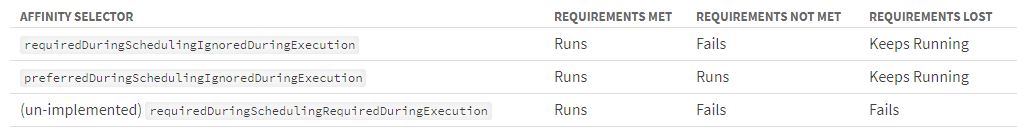

Types of node affinity

There are currently two types of node affinity, called

requiredDuringSchedulingIgnoredDuringExecution

- hard rule

preferredDuringSchedulingIgnoredDuringExecution

- soft rule

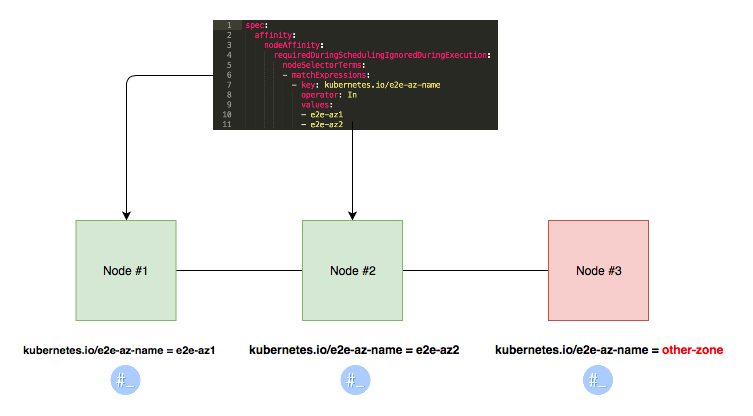

nodeAffinity example

Lets take the above example of deploying a nginx and a httpd pod, except we have a more complicated set of requirements:

- nginx cannot run on the same node as httpd

- httpd should run on a node with the x-web:yes label, but can run anywhere.

- nginx must run on a node with y-web:yes label and should fail if not.

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: with-node-affinity | |

| spec: | |

| affinity: | |

| nodeAffinity: | |

| requiredDuringSchedulingIgnoredDuringExecution: | |

| nodeSelectorTerms: | |

| - matchExpressions: | |

| - key: kubernetes.io/e2e-az-name | |

| operator: In | |

| values: | |

| - e2e-az1 | |

| - e2e-az2 | |

| preferredDuringSchedulingIgnoredDuringExecution: | |

| - weight: 1 | |

| preference: | |

| matchExpressions: | |

| - key: another-node-label-key | |

| operator: In | |

| values: | |

| - another-node-label-value | |

| containers: | |

| - name: with-node-affinity | |

| image: k8s.gcr.io/pause:2.0 | |

| TODO: Debug this example | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: nginx | |

| labels: | |

| nginx: yes | |

| annotations: | |

| scheduler.alpha.kubernetes.io/affinity: > | |

| { | |

| "nodeAffinity": { | |

| "requiredDuringSchedulingIgnoredDuringExecution": [ | |

| { | |

| "labelSelector": { | |

| "matchExpressions": [ | |

| { | |

| "key": "x-web", | |

| "operator": "In", | |

| "values": ["yes", "true"] | |

| } | |

| ] | |

| } | |

| } | |

| ] | |

| }, | |

| "podAntiAffinity": { | |

| "requiredDuringSchedulingIgnoredDuringExecution": [ | |

| { | |

| "labelSelector": { | |

| "matchExpressions": [ | |

| { | |

| "key": "httpd", | |

| "operator": "Exists" | |

| "values": ["yes", "true"] | |

| } | |

| ] | |

| } | |

| } | |

| ] | |

| } | |

| } | |

| spec: | |

| containers: | |

| - name: nginx | |

| image: nginx | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: httpd | |

| labels: | |

| nginx: yes | |

| annotations: | |

| scheduler.alpha.kubernetes.io/affinity: > | |

| { | |

| "nodeAffinity": { | |

| "preferredDuringSchedulingIgnoredDuringExecution": [ | |

| { | |

| "labelSelector": { | |

| "matchExpressions": [ | |

| { | |

| "key": "y-web", | |

| "operator": "In", | |

| "values": ["yes", "true"] | |

| } | |

| ] | |

| } | |

| } | |

| ] | |

| } | |

| } | |

| spec: | |

| containers: | |

| - name: httpd | |

| image: httpd |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: nginx | |

| labels: | |

| env: test | |

| spec: | |

| containers: | |

| - name: nginx | |

| image: nginx | |

| imagePullPolicy: IfNotPresent | |

| nodeSelector: | |

| key1: value1 | |

| key2: value2 | |

| ... | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: nginx | |

| labels: | |

| env: test | |

| spec: | |

| containers: | |

| - name: nginx | |

| image: nginx | |

| imagePullPolicy: IfNotPresent | |

| nodeSelector: | |

| disktype: ssd | |

| ... | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: nginx | |

| labels: | |

| env: test | |

| spec: | |

| containers: | |

| - name: nginx | |

| image: nginx | |

| nodeSelector: | |

| beta.kubernetes.io/instance-type: m3.medium | |

| example.com/load-balancer: true | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: httpd | |

| labels: | |

| env: test | |

| spec: | |

| containers: | |

| - name: httpd | |

| image: httpd | |

| nodeSelector: | |

| failure-domain.beta.kubernetes.io/region: us-west-2 |

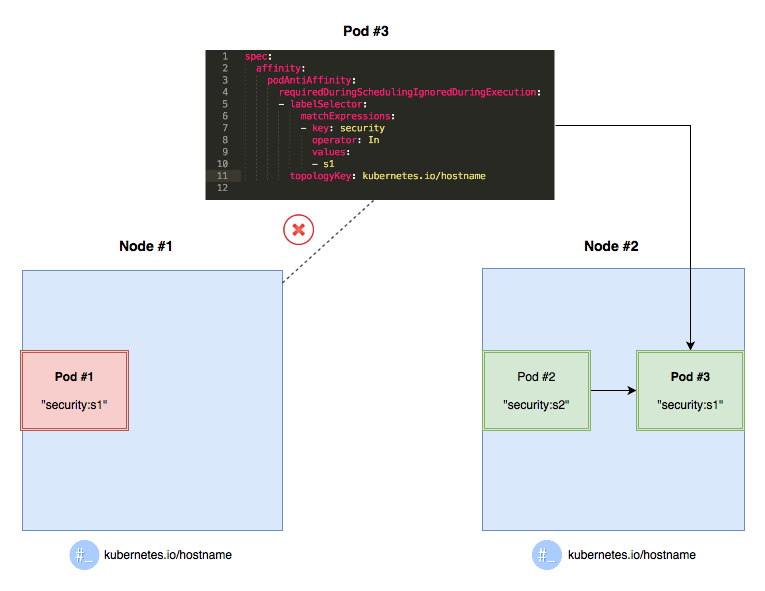

inter-pod affinity/anti-affinity

Inter-pod affinity and anti-affinity allow you to constrain which nodes your pod is eligible to be scheduled based on labels on pods that are already running on the node rather than based on labels on nodes.

An example of a pod that uses pod affinity

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: with-pod-affinity | |

| spec: | |

| affinity: | |

| podAffinity: | |

| requiredDuringSchedulingIgnoredDuringExecution: | |

| - labelSelector: | |

| matchExpressions: | |

| - key: security | |

| operator: In | |

| values: | |

| - S1 | |

| topologyKey: failure-domain.beta.kubernetes.io/zone | |

| podAntiAffinity: | |

| preferredDuringSchedulingIgnoredDuringExecution: | |

| - weight: 100 | |

| podAffinityTerm: | |

| labelSelector: | |

| matchExpressions: | |

| - key: security | |

| operator: In | |

| values: | |

| - S2 | |

| topologyKey: failure-domain.beta.kubernetes.io/zone | |

| containers: | |

| - name: with-pod-affinity | |

| image: k8s.gcr.io/pause:2.0 |

Reference

https://coreos.com/fleet/docs/latest/affinity.html

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com

by

by