Large Language Models (LLMs) as of January 2025

By early 2025, numerous advanced Large Language Models (LLMs) have been developed, each with unique features tailored to specific applications. Below is a comprehensive overview of some of the most notable LLMs:

1. GPT-4

- Developer: OpenAI

- Key Features: A multimodal model capable of processing both text and images, with rumored parameters exceeding 170 trillion.

2. Claude

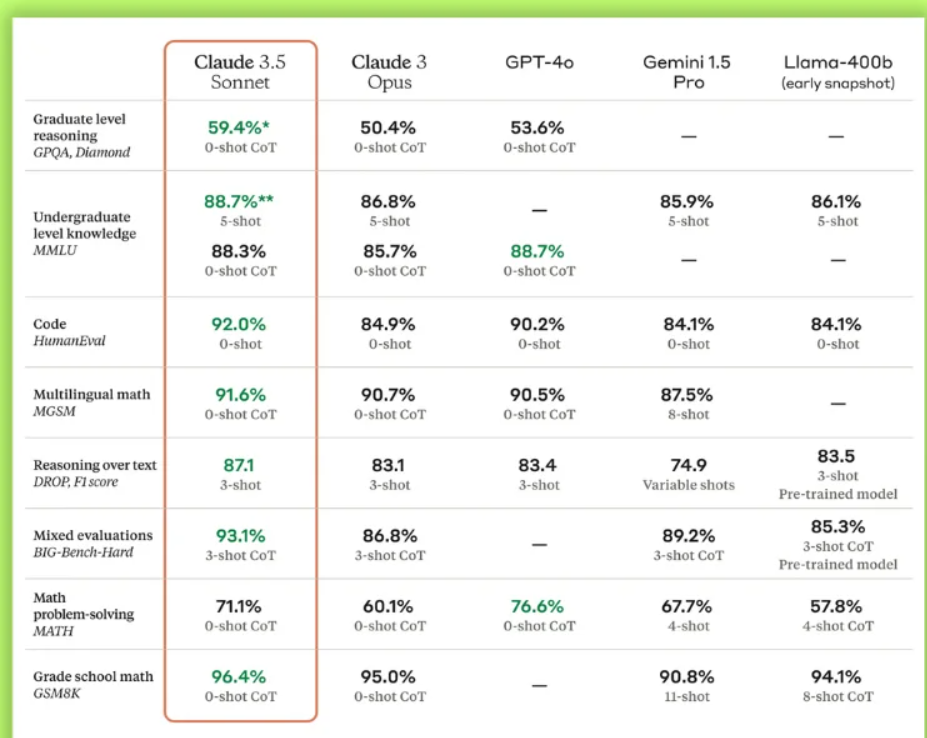

- Developer: Anthropic

- Key Features: Known for its advanced conversational abilities and safety features, making it suitable for tasks ranging from dialogue systems to coding.

3. Gemini

- Developer: Google AI

- Key Features: Previously known as Bard, this multimodal model excels at real-time data access, text generation, language translation, and coding tasks.

4. LLaMA (Large Language Model Meta AI)

- Developer: Meta

- Key Features: Open-source with versions scaling up to 405 billion parameters, designed for a variety of applications including text and image processing.

5. Mistral Large 2

- Developer: Mistral

- Key Features: Offers 123 billion parameters with an extended context window of 128,000 tokens, optimized for computational efficiency and document processing.

6. Vicuna

- Developer: LMSYS

- Key Features: Built on LLaMA, this model is fine-tuned with conversational data, improving its performance in real-world applications.

7. OLMo 2

- Developer: Allen Institute for AI (Ai2)

- Key Features: Available in 7B and 13B parameter versions, particularly strong in question answering and summarization tasks.

8. StableLM

- Developer: Stability AI

- Key Features: Open-source with parameter sizes ranging from 3B to 175B, recognized for its flexibility and broad applicability.

9. Cohere

- Developer: Cohere Inc.

- Key Features: An open-source model trained on a diverse dataset, excelling in handling multiple languages and accents.

10. GLM-130B

- Developer: Tsinghua University

- Key Features: A powerful model designed for various natural language processing tasks.

11. DeepSeek

- Developer: Unknown

- Key Features: Gained recognition for its innovative approach to language understanding.

12. Qwen

- Developer: Unknown

- Key Features: Delivers strong performance across a wide range of NLP tasks.

13. Phi-1

- Developer: Microsoft

- Key Features: A compact model with 1.3 billion parameters, highly effective in Python coding tasks.

14. Gemma

- Developer: Google (Open Source)

- Key Features: Offered in 2B and 7B parameter variants, providing efficient performance for local applications.

Conclusion

The LLM landscape by January 2025 highlights rapid innovation, showcasing models with varied capabilities from multimodal processing to domain-specific optimizations. These advancements reflect the growing diversity in AI technologies, offering solutions for a wide range of industries and applications.

Comparison of Large Language Models (LLMs) as of January 2025

Comparison of Large Language Models (LLMs) as of January 2025

Here is an updated comparison of notable LLMs available by January 2025:

Here’s a comparative table of notable large language models (LLMs) available by January 2025, highlighting their key features, parameters, and capabilities:

| Model Name | Developer | Parameters | Multimodal Capabilities | Key Features | Use Cases |

|---|---|---|---|---|---|

| GPT-4 | OpenAI | 1.5 Trillion | Yes | Advanced reasoning, strong performance across benchmarks | General AI applications, coding |

| Claude | Anthropic | 52 Billion | No | Strong conversational abilities, safety-focused | Customer support, content generation |

| Gemini | Google AI | 1.6 Trillion | Yes | Real-time data access, strong reasoning capabilities | Research, content creation |

| LLaMA 3.2 | Meta | Up to 405 Billion | Yes | Open-source, versatile for various applications | Education, marketing |

| Mistral Large 2 | Mistral | 123 Billion | No | High efficiency, excels in coding tasks | Software development |

| Vicuna | LMSYS | Up to 13 Billion | No | Fine-tuned for conversational context | Chatbots, personal assistants |

| OLMo 2 | Allen Institute | 7B / 13B | No | Strong in question answering and summarization | Educational tools |

| StableLM | Stability AI | 3B – 175B | No | Open-source and versatile | Various NLP tasks |

| GLM-130B | Tsinghua University | 130 Billion | No | Strong natural language processing capabilities | Research and development |

| DeepSeek | Unknown | Undisclosed | No | Innovative language understanding | General AI applications |

| Qwen | Unknown | Undisclosed | No | Competitive performance in NLP tasks | Various applications |

This table summarizes the key aspects of each model, providing a clear overview of their capabilities and potential applications in various fields.

Insights:

- Multimodal Capabilities: Models like GPT-4 and Gemini excel with text and image processing, making them versatile for creative and analytical tasks.

- Open-Source Advantages: LLaMA, StableLM, and Gemma offer accessibility for developers to build custom applications.

- Coding Specialization: Phi-1 (Microsoft) and Claude (Anthropic) stand out in programming-related tasks.

- Efficiency Models: Mistral Large 2 and StableLM provide options for computational efficiency and long-context tasks.

- Multilingual Excellence: Cohere and StableLM are strong contenders for multilingual NLP tasks.

This table reflects the diversity and rapid advancements in LLMs, catering to a broad spectrum of industries and applications by January 2025.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com