What is Apache Kafka?

Introduction:

Apache Kafka is an open-source distributed event streaming platform, originally developed by LinkedIn and later donated to the Apache Software Foundation. It has become one of the most popular tools for building real-time data pipelines and streaming applications. Kafka is designed to handle a continuous flow of data, making it ideal for scenarios that require the processing of real-time data streams.

Understanding Event Streaming:

At its core, Kafka enables the creation and handling of event streams. An event can be anything that happens within your system, such as a user logging into an application, a new transaction being recorded, or sensor data being generated. Kafka allows you to capture these events, store them in a durable and fault-tolerant manner, and process them in real-time or at a later time.

Key Concepts:

• Producer: An application that sends data (messages) to Kafka. A producer can publish data to one or more Kafka topics.

• Consumer: An application that reads data from Kafka. Consumers subscribe to topics and process the data in real-time.

• Topic: A category or feed name to which records are sent by producers. Topics in Kafka are partitioned and replicated to provide scalability and fault tolerance.

• Broker: A Kafka server that stores data and serves it to consumers. Multiple brokers work together to form a Kafka cluster.

• Partition: A topic can be divided into partitions to allow parallel processing by multiple consumers. Each partition is an ordered sequence of records, and Kafka ensures that all records within a partition are delivered in order.Use Cases:

Kafka is widely used for:

• Real-time Analytics: Processing data in real-time to generate insights.

• Log Aggregation: Collecting logs from various sources and processing them.

• Event Sourcing: Capturing state changes as events to rebuild the state of an application.

• Data Integration: Moving data between systems in real-time.Advantages:

• High Throughput: Kafka can handle large volumes of data with low latency.

• Scalability: Kafka’s distributed architecture allows it to scale horizontally.

• Durability: Data is written to disk and replicated across brokers, ensuring persistence.

• Fault Tolerance: Kafka automatically handles broker failures and ensures data availability.Conclusion:

Apache Kafka is a powerful tool for building modern data architectures that require real-time data processing and integration. It provides the foundation for creating scalable, fault-tolerant, and durable data pipelines that can handle massive amounts of data efficiently.

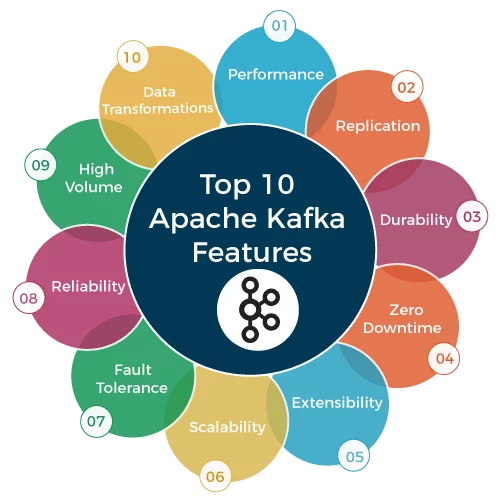

Features of Apache Kafka

Apache Kafka offers a rich set of features that make it an ideal choice for building real-time data streaming and processing systems. Below is a detailed exploration of its key features:

- Distributed Architecture:

Kafka is inherently distributed, meaning it can run across multiple servers (brokers) that work together to form a cluster. This architecture allows Kafka to handle large volumes of data and provides fault tolerance. The distributed nature of Kafka also means that it can scale horizontally by simply adding more brokers to the cluster. - High Throughput:

Kafka is designed for high throughput, capable of handling millions of messages per second. It achieves this by writing data to disk in an efficient, sequential manner and making use of modern IO techniques such as zero-copy. Kafka’s ability to handle high throughput makes it suitable for applications with heavy data flow requirements, such as log aggregation, real-time analytics, and event sourcing. - Scalability:

Kafka’s architecture allows it to scale both in terms of data ingestion and data processing. Topics in Kafka are divided into partitions, and each partition can be processed independently. This partitioning enables parallel processing by multiple consumers, which can be scaled out across different machines. Additionally, Kafka clusters can scale horizontally by adding more brokers. - Fault Tolerance:

Kafka ensures fault tolerance by replicating data across multiple brokers. Each partition in a Kafka topic has one leader and multiple replicas. The leader handles all reads and writes for the partition, while the replicas act as backups. If the leader broker fails, one of the replicas automatically takes over as the leader, ensuring no data is lost and the system remains operational. - Durability:

Kafka provides durability through its log-based storage mechanism. Data in Kafka is written to disk and replicated across multiple brokers. This means that even in the event of a failure, data is not lost. Kafka allows users to configure the retention period for data, meaning data can be stored for as long as needed. This makes Kafka a reliable choice for systems that require long-term storage of data. - Real-Time Stream Processing:

Kafka is not just a message broker; it also supports real-time stream processing. Through integrations with stream processing frameworks like Apache Storm, Apache Flink, and Kafka Streams (a native stream processing library within Kafka), you can build complex data processing pipelines that operate on the data as it flows through Kafka. - Log Compaction:

Kafka supports log compaction, a feature that ensures only the latest value for a given key is retained in the log. This is particularly useful in scenarios where the latest state of an entity needs to be maintained, such as in event sourcing or change data capture systems. - Exactly-Once Semantics:

Kafka provides exactly-once semantics (EOS) for stream processing. This means that each message is processed exactly once, even in the case of failures, ensuring that no data is lost and no duplicates are created. This is crucial for applications that require high accuracy, such as financial systems. - Flexible and Extensible:

Kafka is highly flexible and can be extended to meet various use cases. It supports custom serializers and deserializers, allowing you to work with any data format. Additionally, Kafka Connect provides a framework for integrating Kafka with external systems like databases, key-value stores, and data lakes. - Ecosystem and Community:

Kafka has a large and active community, with a rich ecosystem of tools and libraries. The Kafka ecosystem includes tools for monitoring, managing, and integrating Kafka with other systems, such as Kafka Connect for data integration and Confluent Platform for enterprise-grade features.

Apache Kafka Terminology

To effectively work with Apache Kafka, it’s important to understand the key terminologies used within the platform. Here is a comprehensive guide to Kafka’s terminology:

- Topic:

A topic in Kafka is a category or feed name to which records (messages) are published. Topics are logical channels that allow producers to write data and consumers to read it. Each topic can have multiple partitions. - Partition:

A partition is a division of a Kafka topic. Partitions allow topics to be split into multiple segments, enabling parallel processing. Each partition is an ordered, immutable sequence of records that is continually appended to. Kafka guarantees the order of records within a partition. - Producer:

A producer is an application that sends records to a Kafka topic. Producers can publish data to one or more topics and can choose to send data to specific partitions within a topic based on custom logic or let Kafka handle the distribution automatically. - Consumer:

A consumer is an application that reads records from Kafka topics. Consumers subscribe to one or more topics and process the data in real-time. Kafka consumers are part of a consumer group, which allows for the distribution of partitions across multiple consumers for parallel processing. - Consumer Group:

A consumer group is a group of consumers that work together to process records from one or more topics. Each partition in a topic is assigned to only one consumer within a consumer group, ensuring that records are processed exactly once. If a consumer fails, the partitions it was processing are reassigned to other consumers in the group. - Broker:

A broker is a Kafka server that stores data and serves client requests. A Kafka cluster is made up of multiple brokers, each of which can handle data for multiple partitions. Brokers coordinate with each other to ensure data replication and fault tolerance. - Cluster:

A Kafka cluster is a group of brokers working together to manage data streams. The cluster is responsible for distributing data across brokers, managing data replication, and ensuring fault tolerance. - Leader and Follower:

In Kafka, each partition has a designated leader broker and one or more follower brokers. The leader handles all read and write requests for the partition, while followers replicate the data. If the leader fails, one of the followers is automatically promoted to leader. - Replica:

A replica is a copy of a partition’s data that is stored on a different broker. Replicas are used to provide fault tolerance and ensure that data is not lost in the event of a broker failure. - Zookeeper:

Apache Zookeeper is a coordination service used by Kafka to manage the state of the cluster. Zookeeper is responsible for leader election, configuration management, and maintaining metadata about the brokers and topics. - Log:

In Kafka, a log is an ordered, append-only sequence of records. Each partition in a topic is represented as a log, where new records are appended to the end. Kafka’s log-based storage provides durability and guarantees the order of records within a partition. - Offset:

An offset is a unique identifier for each record within a partition. Kafka assigns an incremental offset to each record as it is added to a partition. Consumers use offsets to keep track of the records they have already processed. - Retention:

Kafka topics can be configured with a retention policy that determines how long records are kept before they are deleted. Retention can be based on time (e.g., keep records

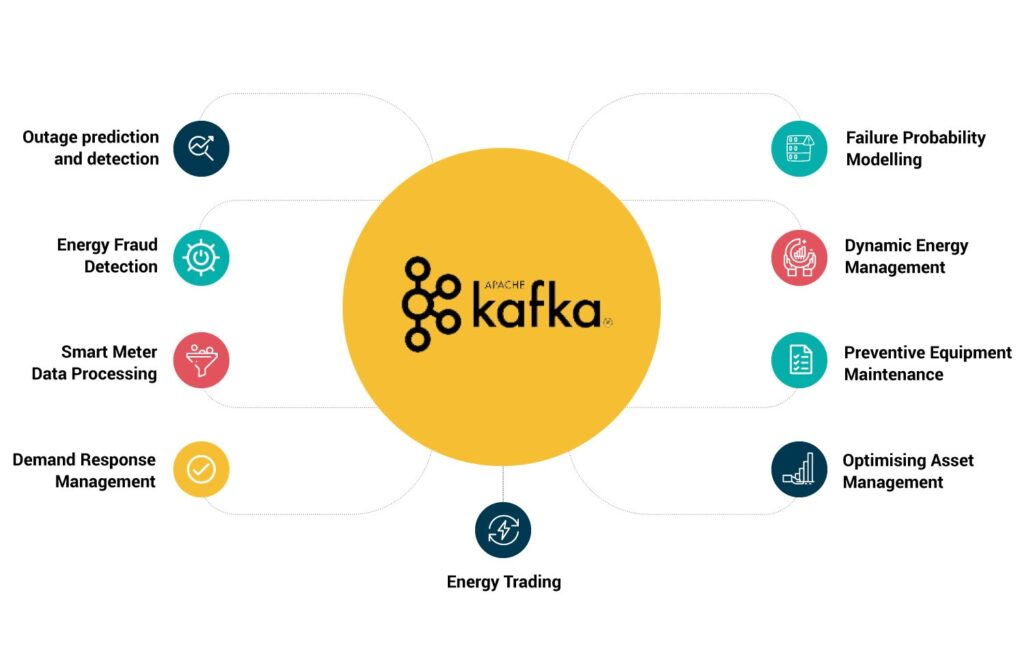

Top 10 Use Cases of Apache Kafka

Apache Kafka is a versatile platform that supports a wide range of use cases across various industries. Here are the top 10 use cases where Kafka shines:

Real-Time Data Streaming and Analytics:

Kafka is widely used for real-time data streaming and analytics. Organizations use Kafka to collect, process, and analyze data as it is generated. This enables businesses to make quick, data-driven decisions. For example, in financial services, Kafka can stream stock prices, trading volumes, and other market data in real-time for instant analysis and decision-making.

Log Aggregation:

Kafka is often used to collect and aggregate log data from multiple sources, such as servers, applications, and services. By streaming logs to Kafka, organizations can centralize their log data and make it available for monitoring, searching, and analysis. Tools like Elasticsearch and Splunk can be used alongside Kafka to provide powerful log analysis capabilities.

Event Sourcing:

Event sourcing is a design pattern where state changes in an application are stored as a sequence of events. Kafka is an excellent choice for event sourcing because it can store and replay events in the exact order they occurred. This allows applications to rebuild their state by processing the events in sequence. Event sourcing is particularly useful in scenarios where auditability and historical data reconstruction are critical, such as in financial applications.

Data Integration and ETL Pipelines:

Kafka acts as a real-time data pipeline that can integrate data from various sources and transform it as it flows through the system. By using Kafka Connect, organizations can easily connect Kafka with databases, data warehouses, and other systems. Kafka can be used for Extract, Transform, Load (ETL) processes, enabling the movement and transformation of data in real-time without the need for batch processing.

Microservices Communication:

In a microservices architecture, services often need to communicate with each other in a reliable and scalable manner. Kafka provides a robust messaging backbone that enables microservices to exchange data asynchronously. By decoupling services through Kafka, organizations can build scalable, resilient microservices architectures where services can independently evolve and scale.

Internet of Things (IoT):

Kafka is well-suited for IoT use cases, where data from sensors and devices needs to be collected, processed, and analyzed in real-time. Kafka can handle the high volume of data generated by IoT devices and provide a scalable platform for processing and analyzing this data. For example, Kafka can be used to collect data from connected vehicles, smart meters, or industrial machinery and stream it to analytics platforms for real-time insights.

Stream Processing:

Kafka is often used as a backbone for stream processing applications, where data streams need to be processed in real-time. Kafka Streams, a native stream processing library within Kafka, allows developers to build complex stream processing applications directly on top of Kafka. This is useful for applications such as fraud detection, real-time monitoring, and anomaly detection.

Metrics Collection and Monitoring:

Organizations use Kafka to collect and aggregate metrics from various systems, such as servers, applications, and network devices. Kafka can handle the high volume of metrics data and make it available for real-time monitoring and alerting. Tools like Prometheus, Grafana, and Elasticsearch can be integrated with Kafka to provide powerful monitoring and visualization capabilities.

Data Lakes and Data Warehousing:

Kafka is often used to feed data lakes and data warehouses with real-time data streams. By streaming data into a data lake or warehouse, organizations can ensure that their data is always up-to-date and available for analysis. Kafka Connect provides out-of-the-box connectors for popular data lakes and warehouses, making it easy to integrate Kafka with existing data infrastructure.

Top 10 Use Cases of Apache Kafka

Apache Kafka is a versatile platform that supports a wide range of use cases across various industries. Here are the top 10 use cases where Kafka shines:

- Real-Time Data Streaming and Analytics:

Kafka is widely used for real-time data streaming and analytics. Organizations use Kafka to collect, process, and analyze data as it is generated. This enables businesses to make quick, data-driven decisions. For example, in financial services, Kafka can stream stock prices, trading volumes, and other market data in real-time for instant analysis and decision-making. - Log Aggregation:

Kafka is often used to collect and aggregate log data from multiple sources, such as servers, applications, and services. By streaming logs to Kafka, organizations can centralize their log data and make it available for monitoring, searching, and analysis. Tools like Elasticsearch and Splunk can be used alongside Kafka to provide powerful log analysis capabilities. - Event Sourcing:

Event sourcing is a design pattern where state changes in an application are stored as a sequence of events. Kafka is an excellent choice for event sourcing because it can store and replay events in the exact order they occurred. This allows applications to rebuild their state by processing the events in sequence. Event sourcing is particularly useful in scenarios where auditability and historical data reconstruction are critical, such as in financial applications. - Data Integration and ETL Pipelines:

Kafka acts as a real-time data pipeline that can integrate data from various sources and transform it as it flows through the system. By using Kafka Connect, organizations can easily connect Kafka with databases, data warehouses, and other systems. Kafka can be used for Extract, Transform, Load (ETL) processes, enabling the movement and transformation of data in real-time without the need for batch processing. - Microservices Communication:

In a microservices architecture, services often need to communicate with each other in a reliable and scalable manner. Kafka provides a robust messaging backbone that enables microservices to exchange data asynchronously. By decoupling services through Kafka, organizations can build scalable, resilient microservices architectures where services can independently evolve and scale. - Internet of Things (IoT):

Kafka is well-suited for IoT use cases, where data from sensors and devices needs to be collected, processed, and analyzed in real-time. Kafka can handle the high volume of data generated by IoT devices and provide a scalable platform for processing and analyzing this data. For example, Kafka can be used to collect data from connected vehicles, smart meters, or industrial machinery and stream it to analytics platforms for real-time insights. - Stream Processing:

Kafka is often used as a backbone for stream processing applications, where data streams need to be processed in real-time. Kafka Streams, a native stream processing library within Kafka, allows developers to build complex stream processing applications directly on top of Kafka. This is useful for applications such as fraud detection, real-time monitoring, and anomaly detection. - Metrics Collection and Monitoring:

Organizations use Kafka to collect and aggregate metrics from various systems, such as servers, applications, and network devices. Kafka can handle the high volume of metrics data and make it available for real-time monitoring and alerting. Tools like Prometheus, Grafana, and Elasticsearch can be integrated with Kafka to provide powerful monitoring and visualization capabilities. - Data Lakes and Data Warehousing:

Kafka is often used to feed data lakes and data warehouses with real-time data streams. By streaming data into a data lake or warehouse, organizations can ensure that their data is always up-to-date and available for analysis. Kafka Connect provides out-of-the-box connectors for popular data lakes and warehouses, making it easy to integrate Kafka with existing data infrastructure. - Multi-Cloud and Hybrid Cloud Data Integration:

Kafka is increasingly used to integrate data across multi-cloud and hybrid cloud environments. Organizations that operate in multiple cloud environments or have a combination of on-premises and cloud infrastructure use Kafka to stream data between different environments in real-time. This enables seamless data integration and movement across different platforms.

How Apache Kafka Works and Architecture?

Introduction:

Apache Kafka is designed to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Understanding how Kafka works and its architecture is crucial for effectively implementing it in your organization. Kafka’s architecture is based on a distributed system of brokers, producers, consumers, topics, and partitions.

Kafka Components and Architecture:

- Topics:

Kafka organizes data into topics. A topic is a logical channel to which producers write data and from which consumers read data. Topics can be thought of as a feed name or category to which messages are published. Kafka topics are split into partitions, which allow Kafka to scale horizontally. - Partitions:

Each Kafka topic is divided into partitions, which are ordered, immutable sequences of records (messages). Partitions are a key concept in Kafka’s scalability and fault tolerance. Each partition can reside on a different broker in the Kafka cluster, enabling parallel processing by multiple consumers. - Brokers:

A Kafka broker is a server that stores data and serves client requests. Kafka brokers form a Kafka cluster. Each broker in the cluster is responsible for a subset of partitions. Kafka brokers work together to manage the storage and retrieval of data, replication, and fault tolerance. - Producers:

Producers are client applications that publish (write) data to Kafka topics. Producers can send data to specific partitions within a topic or allow Kafka to automatically distribute the data across partitions. Producers are designed to be scalable and can handle high throughput. - Consumers:

Consumers are client applications that subscribe to Kafka topics and process the data in real-time. Consumers can belong to a consumer group, where each partition is assigned to only one consumer within the group. This ensures that data is processed in parallel by different consumers. - Consumer Groups:

Consumer groups allow Kafka to distribute the processing of data across multiple consumers. Each consumer in a group processes data from a unique set of partitions. If a consumer fails, Kafka automatically redistributes the partitions to the remaining consumers in the group. This ensures fault tolerance and load balancing. - Zookeeper:

Apache Zookeeper is a distributed coordination service that Kafka uses to manage the state of the Kafka cluster. Zookeeper is responsible for maintaining metadata about brokers, topics, and partitions. It also handles leader election for partitions and coordinates tasks like broker registration and configuration management. - Kafka Log:

Kafka uses a log-based storage mechanism where each partition is an ordered, append-only sequence of records. Producers append new records to the end of the log, and consumers read records in order. Kafka’s log storage provides durability and ensures that records are processed in the correct order. - Replication:

Kafka provides fault tolerance through replication. Each partition has a designated leader broker and one or more follower brokers that replicate the data. The leader handles all read and write requests for the partition, while the followers act as backups. If the leader fails, one of the followers is automatically promoted to leader. - Offsets:

Kafka assigns a unique offset to each record within a partition. An offset is a unique identifier that represents the position of a record in the partition. Consumers use offsets to keep track of which records they have already processed. Kafka allows consumers to commit their offsets, ensuring that they can resume processing from the correct position in case of a failure.

How Kafka Handles Data Flow:

1. Data Ingestion: Producers publish records to Kafka topics. The records are distributed across partitions within the topic based on a partitioning strategy.

2. Data Storage: The records are stored in partitions on the brokers. Kafka ensures that data is replicated across multiple brokers to provide fault tolerance.

3. Data Consumption: Consumers subscribe to topics and read records from the partitions. Kafka tracks the offsets to ensure that records are consumed in order.Kafka’s Distributed Nature:

Kafka’s distributed architecture allows it to scale horizontally by adding more brokers to the cluster. The partitioning of topics enables parallel processing, while replication ensures fault tolerance and data durability. Zookeeper manages the coordination and state of the cluster, allowing Kafka to handle large volumes of data with high reliability.

How to Install Apache Kafka in Linux?

# Step 1: Update the package index and install Java

sudo apt-get update

sudo apt-get install default-jdk -y

# Verify Java installation

java -version

# Step 2: Download Apache Kafka

wget https://downloads.apache.org/kafka/3.0.0/kafka_2.13-3.0.0.tgz

# Step 3: Extract the downloaded Kafka package

tar -xzf kafka_2.13-3.0.0.tgz

cd kafka_2.13-3.0.0

# Step 4: Start Zookeeper

# Kafka uses Zookeeper to manage the cluster, so we need to start it first.

bin/zookeeper-server-start.sh config/zookeeper.properties &

# Step 5: Start Kafka Broker

bin/kafka-server-start.sh config/server.properties &

# Step 6: Create a Kafka Topic

# This command creates a topic named 'test-topic' with a replication factor of 1 and 1 partition.

bin/kafka-topics.sh --create --topic test-topic --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1

# Step 7: List Kafka Topics

# Verify that the 'test-topic' has been created.

bin/kafka-topics.sh --list --bootstrap-server localhost:9092

# Step 8: Send Messages to the Topic

# Open a producer console to start sending messages to the 'test-topic'.

bin/kafka-console-producer.sh --topic test-topic --bootstrap-server localhost:9092

# Type some messages in the producer console (each message on a new line) and press Enter.

# Step 9: Consume Messages from the Topic

# Open a consumer console to start reading messages from the 'test-topic'.

bin/kafka-console-consumer.sh --topic test-topic --bootstrap-server localhost:9092 --from-beginning

# Step 10: Stop Kafka Broker and Zookeeper

# To stop the Kafka broker and Zookeeper after use, run the following commands.

bin/kafka-server-stop.sh

bin/zookeeper-server-stop.shExplanation:

1. Java Installation: Java is required to run Kafka, so it’s installed first.

2. Downloading Kafka: The Kafka binary is downloaded from the official Apache website and extracted.

3. Starting Zookeeper and Kafka: Zookeeper is started first, followed by the Kafka broker.

4. Creating and Listing Topics: A Kafka topic is created and verified by listing all topics.

5. Producing and Consuming Messages: Messages are produced to the topic and then consumed using the Kafka console producer and consumer.

6. Stopping Services: Finally, the Kafka broker and Zookeeper are stopped.Email- contact@devopsschool.com

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com