K3s is a lightweight Kubernetes distribution created by Rancher Labs, and it is fully certified by the Cloud Native Computing Foundation (CNCF). K3s is highly available and production-ready. It has a very small binary size and very low resource requirements.

K3s is a lightweight, open-source Kubernetes distribution that is designed to be easy to install and operate in resource-constrained environments. It is a simplified version of Kubernetes that is optimized for edge computing and IoT (Internet of Things) scenarios.

K3s is built on top of Kubernetes, and it includes many of the same features and capabilities, such as automatic scaling, self-healing, and automatic rollouts and rollbacks. However, it is designed to be more resource-efficient and to have a smaller footprint than traditional Kubernetes distributions. This makes it well suited for running on resource-constrained devices such as Raspberry Pi, or in environments with limited resources such as remote or branch offices.

K3s also includes several additional features that are not present in vanilla Kubernetes, such as:

- A built-in SQLite database for storing configuration data, which eliminates the need for a separate etcd cluster

- Built-in support for load balancers, which eliminates the need for a separate ingress controller

- Automatic service discovery and load balancing for services within the cluster, which eliminates the need for a separate service discovery solution

K3s was developed by Rancher Labs, and it is available under the Apache 2.0 license. It can be used to deploy and manage containerized applications on edge devices, remote locations, and IoT devices, and it’s considered a good solution for organizations that need to run Kubernetes in environments with limited resources.

k3s is running all kubernetes component in container or node?

K3s can run all Kubernetes components in a container or on the node. By default, K3s will run all Kubernetes components in a container. This is the most efficient way to run K3s, as it reduces the resource overhead of running Kubernetes.

However, you can also choose to run some or all of the Kubernetes components on the node. This may be necessary if you need to use a specific version of a Kubernetes component, or if you need to have more control over the configuration of a Kubernetes component.

To run a Kubernetes component on the node, you need to set the --no-containerized flag when you start the K3s server or agent.

For example, to run the K3s API server on the node, you would run the following command:

$ k3s server --no-containerized

To run the K3s controller manager on the node, you would run the following command:

$ k3s agent –no-containerized –server https://<server-ip>:6443 –token <node-token> –no-containerized-components controller-manager

You can also choose to run all of the Kubernetes components on the node by setting the --no-containerized-all flag when you start the K3s server or agent.

For example, to run all of the Kubernetes components on the node, you would run the following command:

$ k3s server --no-containerized-all

Which option should you choose?

The best way to run K3s depends on your specific needs and requirements. If you are looking for the most efficient way to run K3s, then you should run all Kubernetes components in a container. However, if you need to use a specific version of a Kubernetes component, or if you need to have more control over the configuration of a Kubernetes component, then you can choose to run some or all of the Kubernetes components on the node.

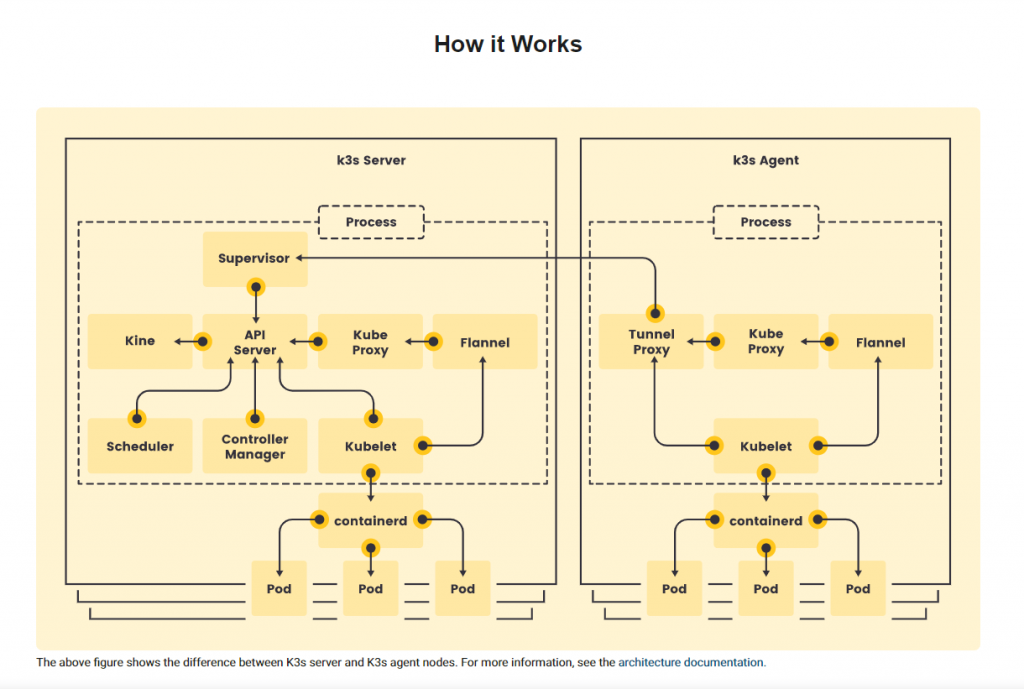

How K3s Works?

10 Use cases of k3s?

K3s is a lightweight Kubernetes distribution that is easy to install and manage. It is designed for resource-constrained environments, such as edge computing and IoT devices.

Here are 10 use cases of K3s:

- Edge computing: K3s can be used to run Kubernetes applications at the edge. This can be useful for applications that need to be low-latency and highly available, such as real-time analytics and machine learning applications.

- IoT devices: K3s can be used to run Kubernetes applications on IoT devices. This can be useful for applications that need to collect and process data from IoT devices, such as smart home and industrial automation applications.

- Development and testing: K3s can be used to run Kubernetes development and testing environments. This can be useful for developers to test their applications on a Kubernetes cluster before deploying them to production.

- Continuous integration and continuous delivery (CI/CD): K3s can be used to run Kubernetes CI/CD pipelines. This can be useful for automating the building, testing, and deployment of Kubernetes applications.

- Bare-metal servers: K3s can be used to run Kubernetes on bare-metal servers. This can be useful for organizations that want to have more control over their Kubernetes environment.

- Virtual machines: K3s can be used to run Kubernetes on virtual machines. This can be useful for organizations that want to run Kubernetes in a cloud environment.

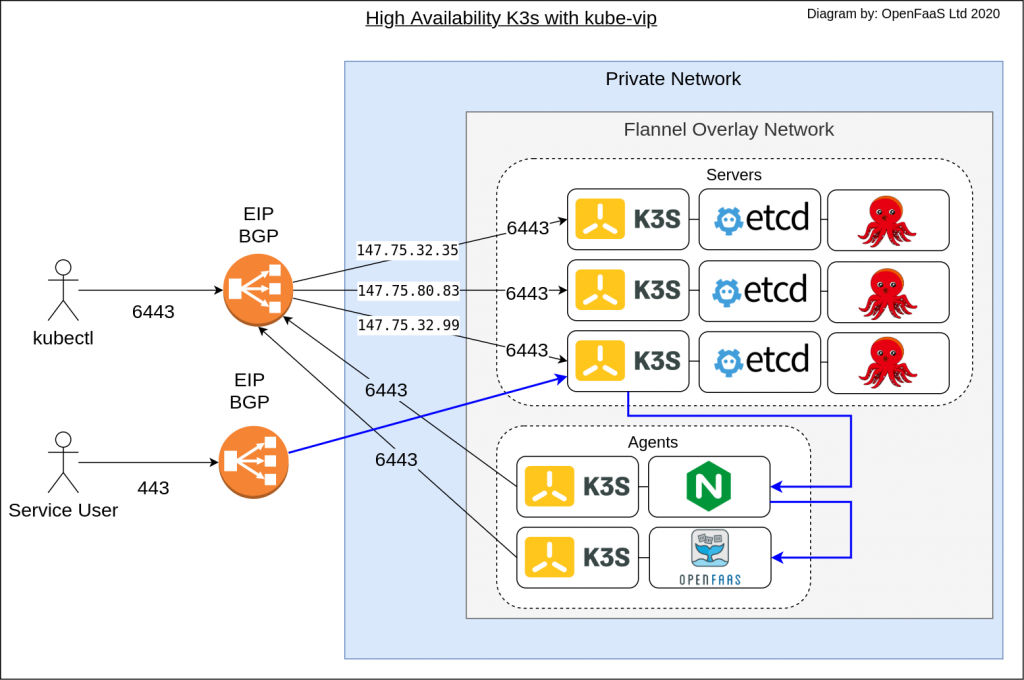

- High-availability clusters: K3s can be used to run Kubernetes high-availability clusters. This can be useful for organizations that need to ensure that their Kubernetes applications are always available.

- Disaster recovery: K3s can be used to run Kubernetes disaster recovery clusters. This can be useful for organizations that need to be able to quickly recover their Kubernetes applications in the event of a disaster.

- Air-gapped environments: K3s can be used to run Kubernetes in air-gapped environments. This can be useful for organizations that need to run Kubernetes in environments that are not connected to the internet.

- Education and research: K3s can be used to run Kubernetes for education and research purposes. This can be useful for students and researchers to learn about and experiment with Kubernetes.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND