History & Origin of TensorFlow

TensorFlow was developed by the Google Brain team for internal Google use in research and production. The initial version was released under the Apache License 2.0 in 2015. Google released the updated version of TensorFlow, named TensorFlow 2.0, in September 2019.

TensorFlow is an open source framework developed by Google researchers to run machine learning, deep learning and other statistical and predictive analytics workloads. Like similar platforms, it’s designed to streamline the process of developing and executing advanced analytics applications for users such as data scientists, statisticians and predictive modelers.

The TensorFlow software handles data sets that are arrayed as computational nodes in graph form. The edges that connect the nodes in a graph can represent multidimensional vectors or matrices, creating what are known as tensors. Because TensorFlow programs use a data flow architecture that works with generalized intermediate results of the computations, they are especially open to very large-scale parallel processing applications, with neural networks being a common example.

The framework includes sets of both high-level and low-level APIs. Google recommends using the high-level ones when possible to simplify data pipeline development and application programming. However, knowing how to use the low-level APIs — called TensorFlow Core — can be valuable for experimentation and debugging of applications, the company says; it also gives users a “mental model” of the machine learning technology’s inner workings, in Google’s words.

TensorFlow applications can run on either conventional CPUs or higher-performance graphics processing units (GPUs), as well as Google’s own tensor processing units (TPUs), which are custom devices expressly designed to speed up TensorFlow jobs. Google’s first TPUs, detailed publicly in 2016, were used internally in conjunction with TensorFlow to power some of the company’s applications and online services, including its RankBrain search algorithm and Street View mapping technology.

In early 2018, Google furthered its external TensorFlow efforts by making the second generation of TPUs available to Google Cloud Platform users for training and running their own machine learning models. TensorFlow-based workloads are billed on a per-second basis; the Cloud TPU service initially was launched as a beta program with only “limited quantities” of the devices available for use, according to Google.

TensorFlow origins and releases

TensorFlow followed in the footsteps of a closed-source Google framework called DistBelief, which the company used internally to carry out unsupervised feature learning and deep learning applications based on extremely large neural networks and the backpropagation algorithm.

DistBelief, which Google first disclosed in detail in 2012, was a testbed for implementations of deep learning that included advanced image and speech recognition, natural language processing, recommendation engines and predictive analytics.

TensorFlow differs from DistBelief in a number of ways. Because TensorFlow was designed to be able to work separately from Google’s own computing infrastructure, its code was more easily portable for outside uses. It’s also a more general machine learning framework that isn’t as tightly focused on neural networks as DistBelief was. Moreover, it’s designed to support faster configuration and to run against the high-level APIs.

Google released TensorFlow as an open source technology in 2015 under an Apache 2.0 license. Since then, the framework has gained a variety of adherents beyond Google. For example, TensorFlow tooling is supported as add-on modules to machine learning and AI development suites from IBM, Microsoft and others.

In early 2017, TensorFlow reached Release 1.0.0 status. That version added a specialized debugger, a domain-specific compiler for TensorFlow graphs, Docker container images for version 3 of the Python programming language and an experimental Java API. Four more releases followed during the course of 2017; a TensorFlow Lite version optimized for use on mobile and embedded devices was also introduced as a developer preview. As of February 2018, TensorFlow was up to Release 1.6.0.

Applications of TensorFlow

For the most part, TensorFlow applications are advanced and large-scale AI undertakings in the realms of machine learning and deep learning. In powering Google’s RankBrain machine learning system, TensorFlow has been employed to improve the information retrieval capabilities of the company’s flagship search engine.

Google has also used the framework for applications that include automatic email response generation, image classification and optical character recognition, as well as a drug-discovery application that the company worked on with researchers from Stanford University

What is TensorFlow ?

TensorFlow.js is a library for machine learning in JavaScript

Develop ML models in JavaScript, and use ML directly in the browser or in Node.js.

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. TensorFlow, its open source community and ecosystem, and highlights some interesting TensorFlow open sourced models. The TensorFlow distributed execution engine abstracts away the many supported devices and provides a high performance-core implemented in C++ for the TensorFlow platform. Tensorflow allows developers to create a graph of computations to perform. Each node in the graph represents a mathematical operation and each connection represents data.

How TensorFlow works aka TensorFlow architecture?

TensorFlow allows developers to create dataflow graphs—structures that describe how data moves through a graph, or a series of processing nodes. Each node in the graph represents a mathematical operation, and each connection or edge between nodes is a multidimensional data array, or tensor.

TensorFlow provides all of this for the programmer by way of the Python language. Python is easy to learn and work with, and provides convenient ways to express how high-level abstractions can be coupled together. Nodes and tensors in TensorFlow are Python objects, and TensorFlow applications are themselves Python applications.

The actual math operations, however, are not performed in Python. The libraries of transformations that are available through TensorFlow are written as high-performance C++ binaries. Python just directs traffic between the pieces, and provides high-level programming abstractions to hook them together.

TensorFlow applications can be run on most any target that’s convenient: a local machine, a cluster in the cloud, iOS and Android devices, CPUs or GPUs. If you use Google’s own cloud, you can run TensorFlow on Google’s custom TensorFlow Processing Unit (TPU) silicon for further acceleration. The resulting models created by TensorFlow, though, can be deployed on most any device where they will be used to serve predictions.

TensorFlow 2.0, released in October 2019, revamped the framework in many ways based on user feedback, to make it easier to work with (e.g., by using the relatively simple Keras API for model training) and more performant. Distributed training is easier to run thanks to a new API, and support for TensorFlow Lite makes it possible to deploy models on a greater variety of platforms. However, code written for earlier versions of TensorFlow must be rewritten—sometimes only slightly, sometimes significantly—to take maximum advantage of new TensorFlow 2.0 features.

Run existing models

Use off-the-shelf JavaScript models or convert Python TensorFlow models to run in the browser or under Node.js.

Retrain existing models

Retrain pre-existing ML models using your own data.

Develop ML with JavaScript

Build and train models directly in JavaScript using flexible and intuitive APIs.

TensorFlow library combines various APIs to create a deep learning architecture like CNN (Convolutional Neural Network) or RNN (Recurrent Neural Networks). TensorFlow Architecture is based on graph computation. it acknowledges the developer to imagine the structure of the neural network with Tensorboard.

Why TensorFlow Architecture is in Demand?

There’s a heavily popular thing among the machine learning developers; they are crowding toward a tool called TensorFlow, which promotes some of the vital work essential in developing and managing training data sets in ML. Including some of the big brands that are switching to TensorFlow Architecture for machine learning, the demand is visible. The question is, why TensorFlow? TensorFlow is the best library as it is built to be convenient for everyone. TensorFlow library combines various APIs to create a deep learning architecture like CNN (Convolutional Neural Network) or RNN (Recurrent Neural Networks). TensorFlow Architecture is based on graph computation. it acknowledges the developer to imagine the structure of the neural network with Tensorboard. DevOps with TensorFlow is helpful to detect the errors.

Use case of TensorFlow

Learn how TensorFlow solves real, everyday machine learning problem.

Explore how various companies from wide variety of industries implement ML to solve their biggest problems. From healthcare to social network and even e-commerce, ML Can be integrated into your industry and company.

The ubiquity, openness, and large community have pushed TensorFlow into the enterprise for solving real-world applications such as analyzing images, generating data, natural language processing, intelligent chatbots, robotics, and more. For corporations of all types and sizes, the use cases that fit well with TensorFlow include:

- Speech recognition

- Image recognition

- Object tagging videos

- Self-driving cars

- Sentiment analysis

- Detection of flaws

- Text summarization

- Mobile image and video processing

- Air, land and sea drones

Feature and Advantage of using TensorFlow

Advantages of TensorFlow

1. Open-source platform

It is an open-source platform that makes it available to all the users around and ready for the development of any system on it.

2. Data visualization

TensorFlow provides a better way of visualizing data with its graphical approach. It also allows easy debugging of nodes with the help of TensorBoard. This reduces the effort of visiting the whole code and effectively resolves the neural network.

3. Keras friendly

TensorFlow has compatibility with Keras, which allows its users to code some high-level functionality sections in it. Keras provides system-specific functionality to TensorFlow, such as pipelining, estimators, and eager execution.

The Keras functional API supports a variety of topologies with different combinations of inputs, output, and layers.

4. Scalable

Almost every operation can be performed using this platform. With its characteristic of being deployed on every machine and graphical representation of a model allows its users to develop any kind of system using TensorFlow.

Hence TensorFlow has been able to develop systems like Airbnb, Dropbox, Intel, Snapchat, etc.

5. Compatible

It is compatible with many languages such as C++, JavaScript, Python, C#, Ruby, and Swift. This allows a user to work in an environment they are comfortable in.

6. Parallelism

TensorFlow finds its use as a hardware acceleration library due to the parallelism of work models. It uses different distribution strategies in GPU and CPU systems.

A user can choose to run its code on either of the architecture based on the modeling rule. A system chooses a GPU if not specified. This process reduces the memory allocation to an extent.

7. Architectural support

TensorFlow also has its architecture TPU, which performs computations faster than GPU and CPU. Models built using TPU can be easily deployed on a cloud at a cheaper rate and executed at a faster rate.

8. Graphical support

Deep learning uses TensorFlow for its development as it allows building neural networks with the help of graphs that represent operations as nodes.

TensorFlow acts in multiple domains such as image recognition, voice detection, motion detection, time series, etc hence it suits the requirement of a user.

Noe when we have seen advantages of TensorFlow, let us see some disadvantages of it.

1. Frequent updates

TensorFlow releases different updates every 2-3 month, increasing the overhead for a user to install it and bind it with the existing system.

2. Inconsistent

TensorFlow provides homonyms that share similar names but different implementations, which makes it confusing to remember and use. For eg: tf.nn.conv2d, tf.nn.convolution, tf.layers.conv2d, tf.layers.Conv2d has varying meanings and often makes it inconsistent with its usability.

3. Architectural limitation

TensorFlow’s architecture TPU only allows the execution of a model not to train it.

4. Dependency

Although TensorFlow reduces the length of code and makes it easier for a user to access it, it adds a level of complexity to its use. Every code needs to be executed using any platform for its support which increases the dependency for the execution.

5. Symbolic loops

TensorFlow lags at providing the symbolic loops for indefinite sequences. It has its usage for definite sequences, which makes it a usable system. Hence it is referred to as a low-level API.

6. GPU Support

TensorFlow has only NVIDIA support for GPU and python support for GPU programming. It does not have any other support.

7. Slow speed

TensorFlow has low speed with respect to its competitors. It has less usability in comparison to other frameworks.

8. Support for Windows

TensorFlow does not provide much features for the Windows Operating System users. It opens a wide range of features for the Linux users. But still, Windows users can download TensorFlow using the anaconda prompt or using the pip package.

Features of TensorFlow

Let us learn some exciting Tensorflow features:

1. Open-source Library

It is an open-source library that allows rapid and easier calculations in machine learning. It eases the switching of algorithms from one tool to another TensorFlow tool.

With the help of python, it provides the front-end API for the development of various machines and deep learning algorithms.

2. Easy to run

We can execute TensorFlow applications on various platforms such as Android, Cloud, IOS and various architectures such as CPUs and GPUs. This allows it to be executed on various embedded platforms.

TensorFlow has its own designed hardware to train the neural models known as Cloud TPUs (TensorFlow Processing unit).

3. Fast Debugging

It allows you to reflect each node, i.e., operation individually concerning its evaluation. Tensor Board works with the graph to visualize its working using its dashboard. It provides computational graphing methods that support an easy to execute paradigm.

4. Effective

It works with multi-dimensional arrays with the help of data structure tensor which represents the edges in the flow graph. Tensor identifies each structure using three criteria: rank, type, shape.

5. Scalable

It provides room for prediction of stocks, products, etc with the help of training using the same models and different data sets. It also allows for synchronous and asynchronous learning techniques and data ingestion. The graphical approach secures the distributed execution parallelism.

6. Easy Experimentation

TensorFlow transforms the raw data to the estimators-a form of data neural networks understand. TensorFlow feature columns allow the bridge between raw data and estimators to train the model. This adds the agility to the model for fast developmental insights.

7. Abstraction

TensorFlow provides a defined level of abstraction by reducing the code length and cutting the development time. The user needs to focus on logic disregarding the proper way of providing input to functions. A user can choose the model apt according to the system’s requirement.

Best Alternative of TensorFlow

Top 10 Alternatives to TensorFlow

- MATLAB.

- IBM Watson Studio.

- Google Cloud AI Platform.

- Amazon SageMaker.

- Google Cloud AutoML.

- RapidMiner.

- Azure Machine Learning Studio.

- RStudio.

Best Resources, Tutorials and Guide for TensorFlow

Free Video Tutorials of TensorFlow

Interview Questions and Answer for TensorFlow

. What is TensorFlow?

Answer: TensorFlow is the world’s most used library for Machine Learning. Developed in 2015 by the Google Brain Team, it ensures to provide an easy-to-use low-level toolkit that can handle complex mathematical operations and learning architectures.

2. What is the meaning of TensorBoard?

Answer: TensorBoard is a Graphical User Interface (GUI) that is provided by TensorFlow to help users visualize graphs, plots, and other metrics easily without having to write a lot of code. TensorBoard provides an ample number of advantages in terms of readability, ease of use, and performance metrics.

3. What are tensors?

Answer: Tensors are similar to arrays in programming languages, but here, they are of higher dimensions. It can be considered as a generalization of matrices that form an n-dimensional array. TensorFlow provides methods that can be used to create tensor functions and compute their derivatives easily. This is what sets tensors apart from the NumPy arrays.

4. Are there any limitations to using TensorFlow?

Answer: Even though TensorFlow provides numerous advantages, it has one or two caveats in the current versions:

- No support for OpenCL (Open Computing Language) yet

- GPU memory conflicts when used with Theano

- Can be overwhelming for beginners to get started

Next up on these TensorFlow coding interview questions, let us check out about the types of tensors.

5. What are some of the advantages of using TensorFlow?

Answer: TensorFlow has numerous advantages, and this is why it is the most used framework for Machine Learning in the world. Some of its advantages are given below:

- Platform independency

- Usage of GPU for distributed computing

- Auto-differentiation capability

- Open-source and large community

- Highly customizable based on requirements

- Support for asynchronous computations

6. What is a graph explorer in TensorFlow?

Answer: A graph explorer is used to visualize a graph on TensorBoard. It is also used for the inspection operations of a model in TensorFlow. To easily understand the flow in a graph, it is recommended to use a graph visualizer in TensorBoard.

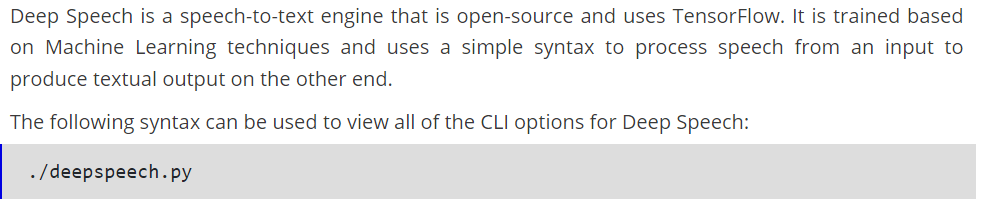

7. What is the meaning of Deep Speech?

Answer:

8. What are the types of tensors available in TensorFlow?

Answer: There are three main types of tensors:

- Constant tensors

- Variable tensors

- Placeholder tensors

9. How is variable lifetime tracked in TensorFlow?

Answer: The lifetime of a variable is automatically tracked after its initialization, using the tf.Variable.initializer operation.

Later, after the usage, the session can be closed and the variable can be destroyed, using the tf.Session.close operation.

10. What is the use of a histogram dashboard in TensorFlow?

Answer: Histogram dashboards are widely used to display complex statistical distributions of a tensor in a simple way. Every histogram chart will have a slice of data that denotes the data that the tensor has at the point of representation.

11. How can data be loaded into TensorFlow?

Answer: There are two ways that you can use to load data into TensorFlow before training Machine Learning algorithms:

- Data load into memory: Here, the data is loaded into the memory as a single array unit. It is the easiest way to load the data.

- TensorFlow data pipeline: It is making use of the built-in APIs to load the data and feed it across to the algorithm.

12. What are the types of dashboards supported by TensorFlow?

Answer: TensorFlow supports a variety of dashboards that are used to perform numerous tasks on TensorBoard easily:

- Scalar dashboard

- Image dashboard

- Graph dashboard

- Text dashboard

- Distributer dashboard

- Histogram dashboard

13. How is audio stored in the audio dashboard?

Answer: The audio dashboard serves to primarily help users embed playable widgets stored in files. Tf.summary.audio is used for the storage of these files, and the tagging system is used to embed the latest audio based on the storage policies.

14. What is the simple working of an algorithm in TensorFlow?

Answer: There are five main steps that govern the working of the majority of algorithms in TensorFlow. They are as follows:

- Data import or data generation, alongside setting up a data pipeline

- Data input through computational graphs

- Generation of the loss function to evaluate the output

- Backpropagation to modify the data

- Iterating until output criteria are met

15. Can TensorFlow be deployed onto a container architecture?

Answer: Yes, TensorFlow can be easily used with containerization tools like Docker. The containerization tools alongside TensorFlow are mostly used to deploy various models that require text classification using convolutional neural networks.

16. What are some of the components needed to deploy a Lite model file?

Answer: In TensorFlow, three main components are used to deploy a Lite model:

- Java API: Used as a wrapper around the C++ API for Android

- C++ API: Used to load the TensorFlow Lite model and call the interpreter

- Interpreter: Used to handle kernel loading and the execution of the model

17. What are the methods that can be used to handle overfitting in TensorFlow?

Answer: There are three methods, which can be used to easily handle the condition of overfitting when using TensorFlow:

- Batch normalization

- Regularization technique

- Dropouts

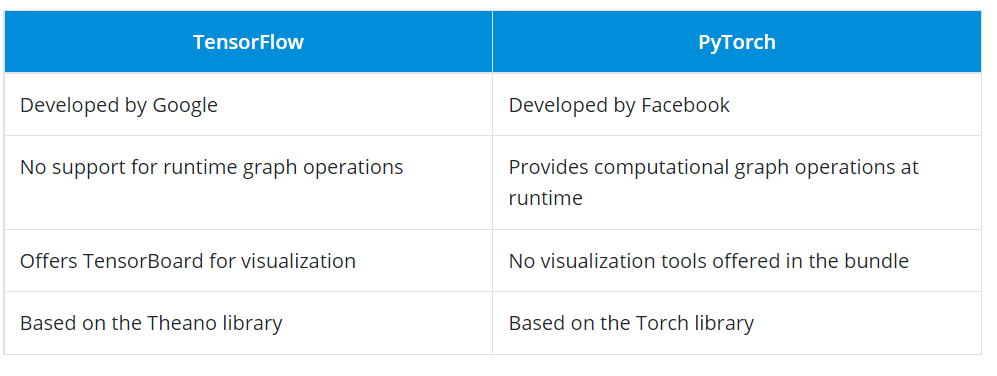

18. Differentiate between TensorFlow and PyTorch.

Answer:

19. What is TensorFlow JS?

Answer: TensorFlow JS is a library that gives users the functionality of using browsers to run Machine Learning models. High-level APIs work with JavaScript to support a variety of entities in the backend, such as WebGL, to use a GPU to render functionality (if available). Models can be imported, re-trained, and executed easily by just using a browser.

20. What are the languages that are supported in TensorFlow?

Answer: TensorFlow supports a wide variety of languages for programmers to write the code in. The preferred language presently is Python.

However, experimental support is being implemented for other languages, such as Go, Java, and C++. Also, language bindings for Ruby, Scala, and Julia are being developed by the open-source community.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND

Starting: 1st of Every Month

Starting: 1st of Every Month  +91 8409492687

+91 8409492687  Contact@DevOpsSchool.com

Contact@DevOpsSchool.com