- Edited

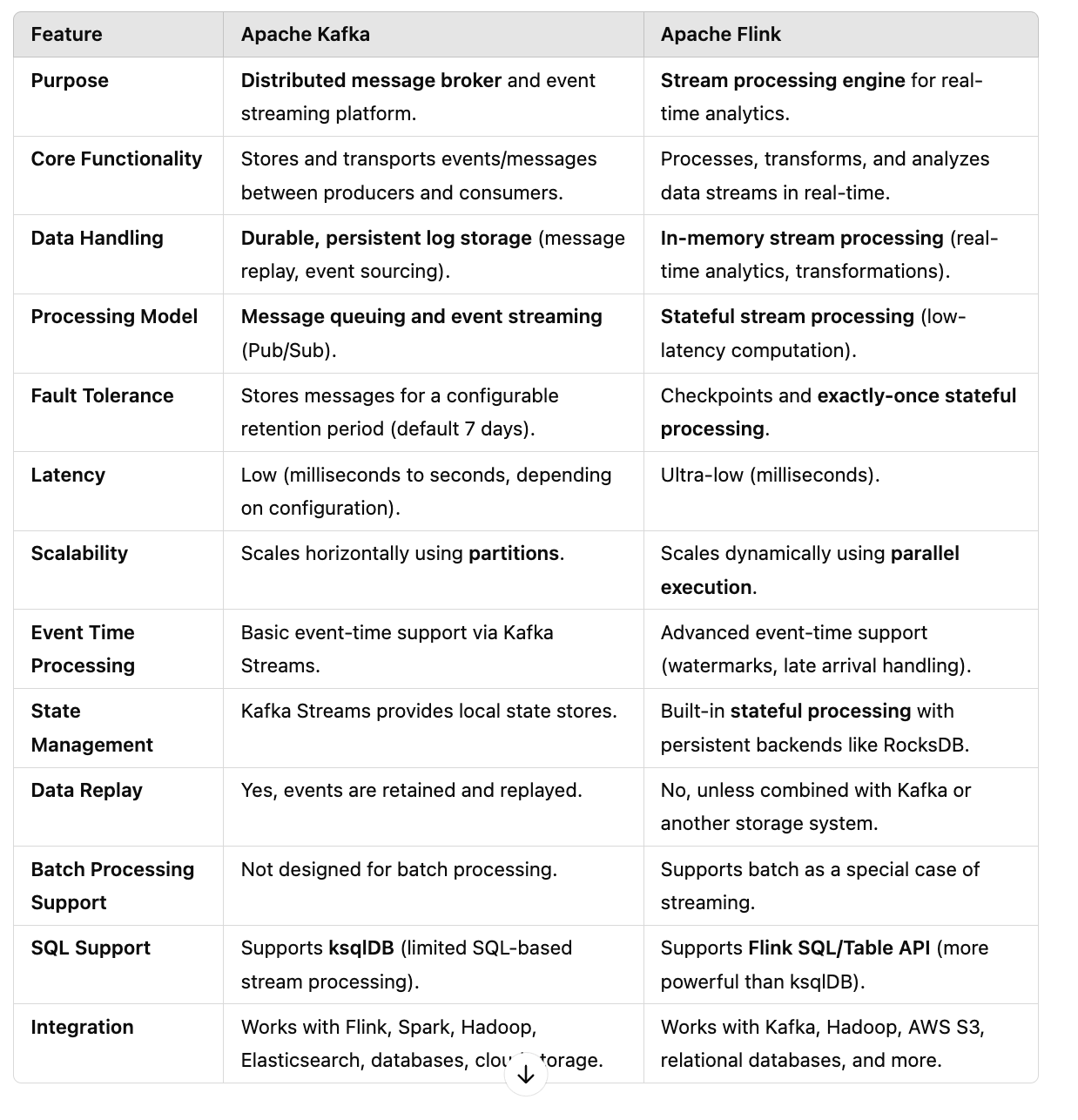

Major Differences Between Apache Kafka and Apache Flink

Apache Kafka and Apache Flink are both used in real-time data processing, but they serve different purposes in the data pipeline.

| Feature | Apache Kafka | Apache Flink |

|---------|---------------|--------------|

| Purpose | Distributed message broker and event streaming platform. | Stream processing engine for real-time analytics. |

| Core Functionality | Stores and transports events/messages between producers and consumers. | Processes, transforms, and analyzes data streams in real-time. |

| Data Handling | Durable, persistent log storage (message replay, event sourcing). | In-memory stream processing (real-time analytics, transformations). |

| Processing Model | Message queuing and event streaming (Pub/Sub). | Stateful stream processing (low-latency computation). |

| Fault Tolerance | Stores messages for a configurable retention period (default 7 days). | Checkpoints and exactly-once stateful processing. |

| Latency | Low (milliseconds to seconds, depending on configuration). | Ultra-low (milliseconds). |

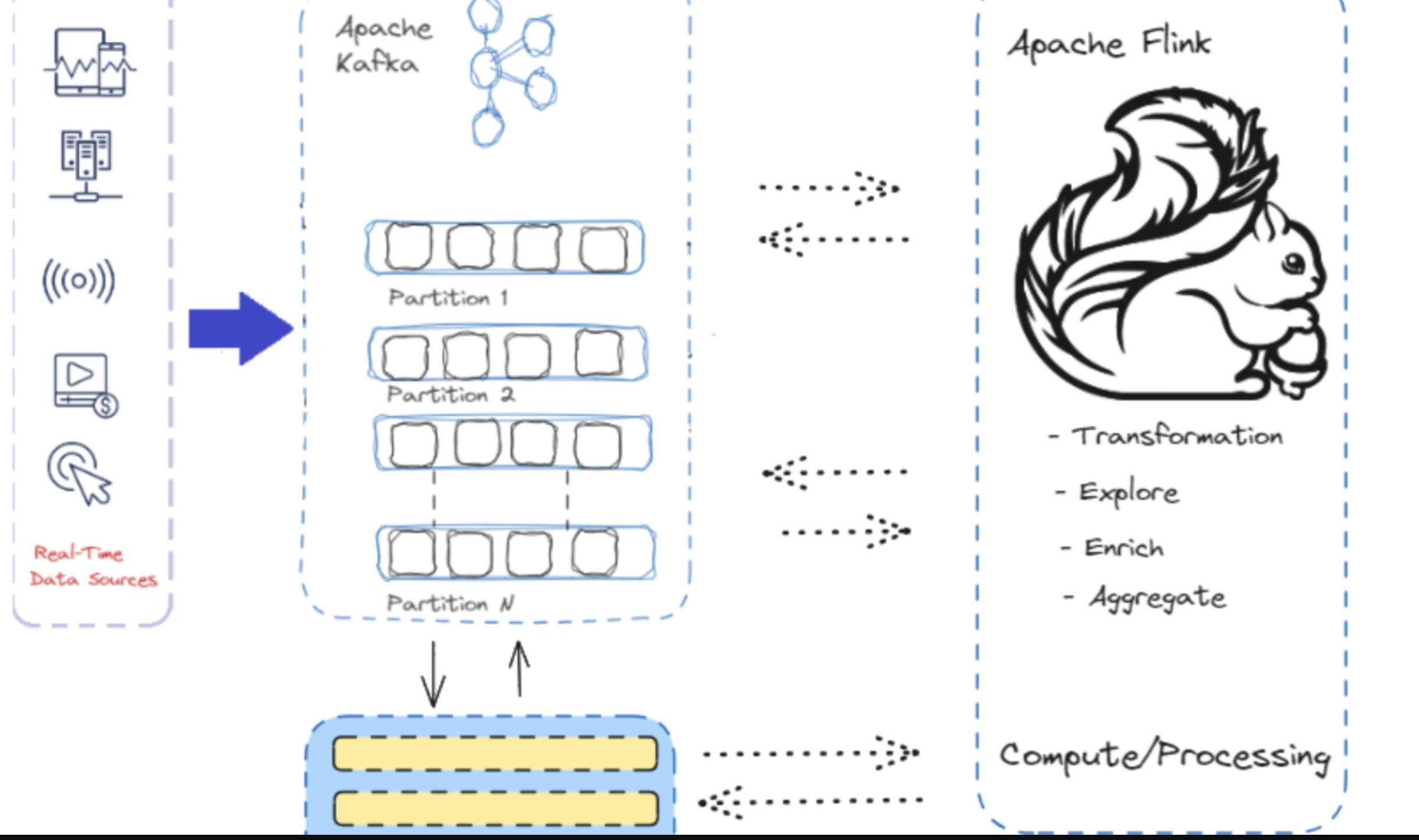

| Scalability | Scales horizontally using partitions. | Scales dynamically using parallel execution. |

| Event Time Processing | Basic event-time support via Kafka Streams. | Advanced event-time support (watermarks, late arrival handling). |

| State Management | Kafka Streams provides local state stores. | Built-in stateful processing with persistent backends like RocksDB. |

| Data Replay | Yes, events are retained and replayed. | No, unless combined with Kafka or another storage system. |

| Batch Processing Support | Not designed for batch processing. | Supports batch as a special case of streaming. |

| SQL Support | Supports ksqlDB (limited SQL-based stream processing). | Supports Flink SQL/Table API (more powerful than ksqlDB). |

| Integration | Works with Flink, Spark, Hadoop, Elasticsearch, databases, cloud storage. | Works with Kafka, Hadoop, AWS S3, relational databases, and more. |

When to Use Kafka vs Flink

When to Use Apache Kafka

When to Use Apache Kafka

- As a message broker to transport real-time events between systems.

- For event sourcing where events must be stored, replayed, and persisted.

- For decoupling microservices, allowing asynchronous communication.

- For log aggregation and audit trail (e.g., logging systems, monitoring data).

- For streaming ETL pipelines, ingesting data from multiple sources and forwarding it to databases or processing engines.

- For event-driven architectures, where producers publish events and multiple consumers process them.

When Not to Use Kafka

When Not to Use Kafka

- For real-time analytics and stream processing (Kafka alone doesn’t process data; it transports it).

- For complex event-time windowing (Kafka Streams is limited compared to Flink).

- When stateful transformations are required (Kafka Streams supports this but is less powerful than Flink).

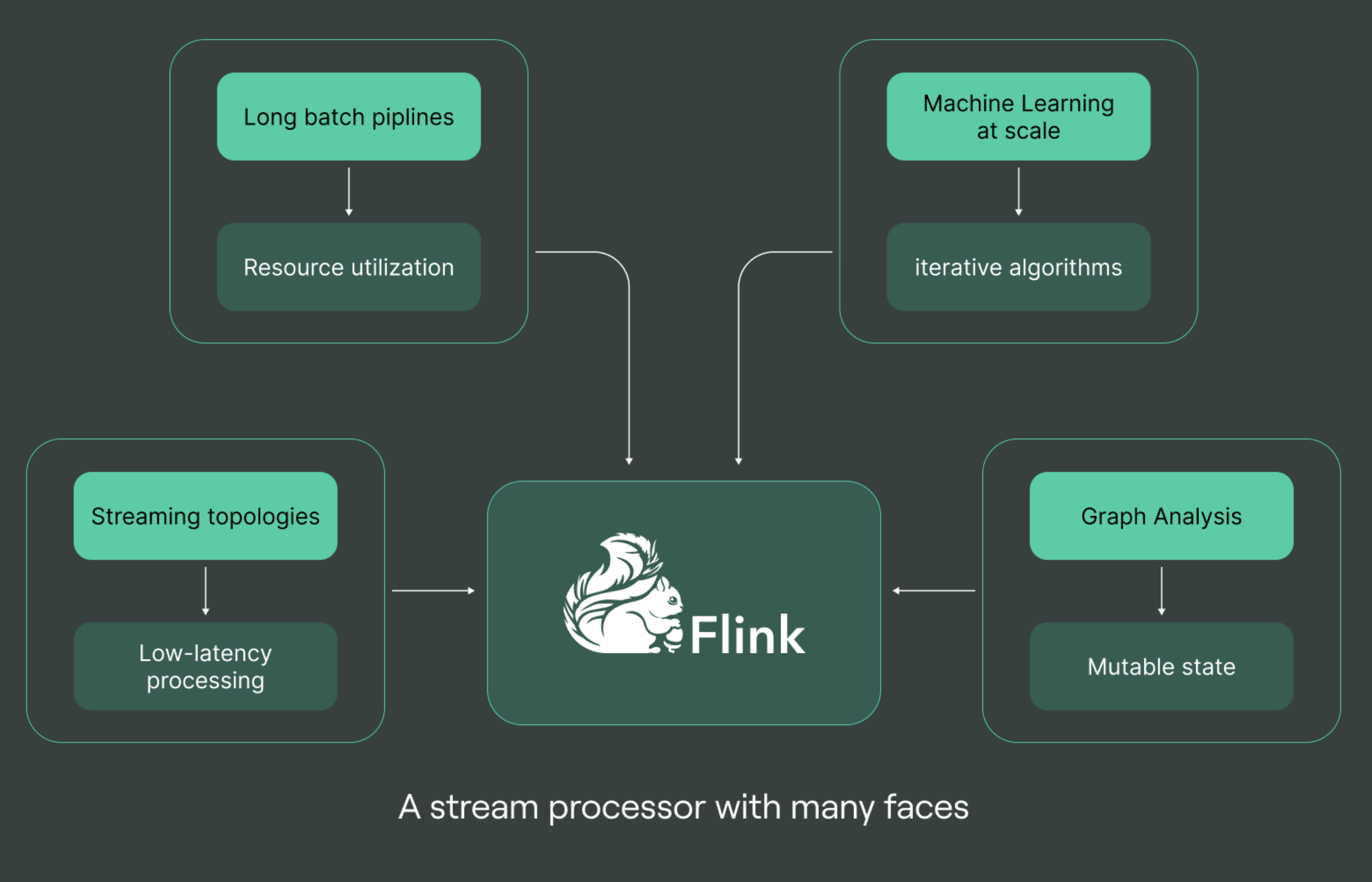

When to Use Apache Flink

When to Use Apache Flink

- For real-time analytics, such as fraud detection, anomaly detection, and live dashboards.

- For event-time processing, handling late-arriving data with watermarks.

- For complex streaming transformations, such as windowing, joins, aggregations, and stateful computations.

- For machine learning on streaming data, such as real-time recommendations.

- For stream-based ETL, where data needs filtering, deduplication, and enrichment before reaching the destination.

When Not to Use Flink

When Not to Use Flink

- As a message broker or event store (Flink does not store events; it processes them).

- For simple data transport (Kafka is a better fit for message passing).

- For high-throughput durable storage (Flink works best when paired with Kafka for data ingestion).

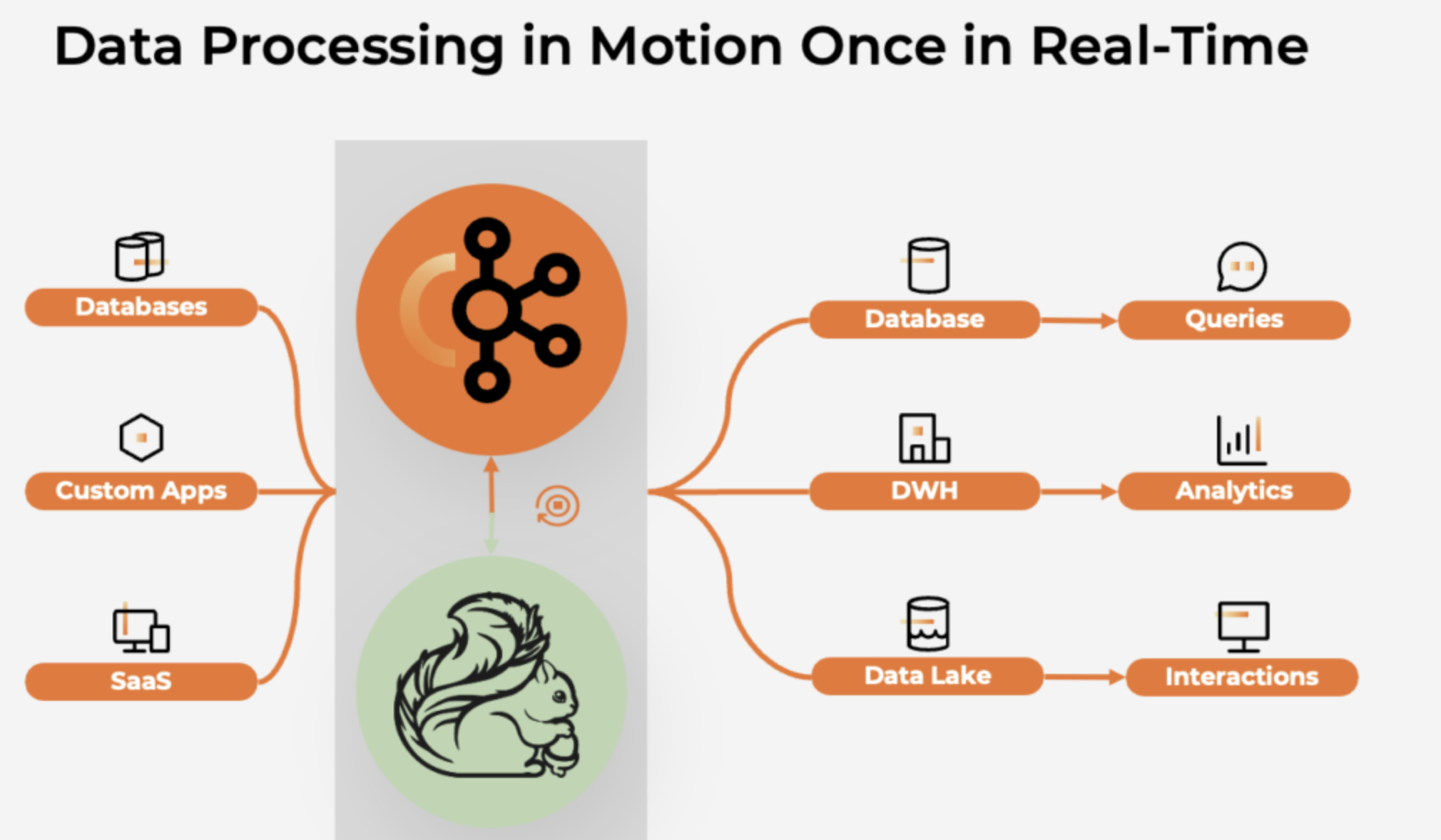

Kafka and Flink Together

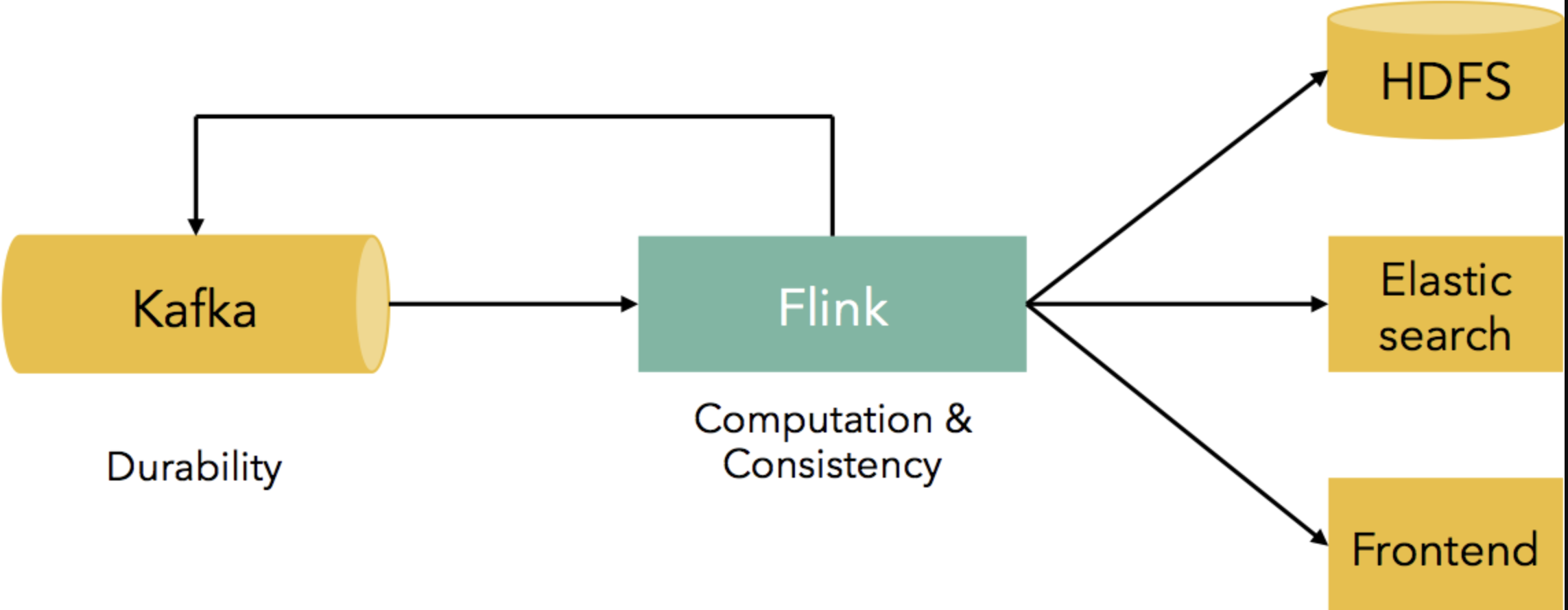

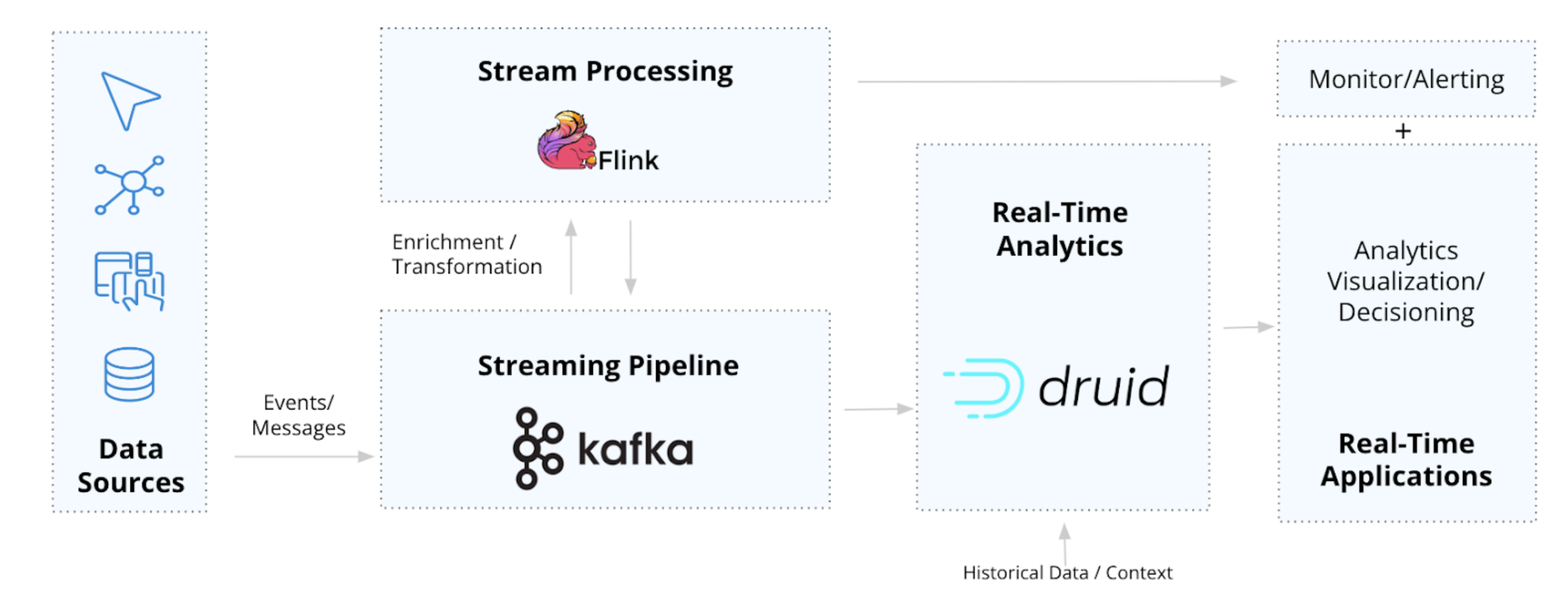

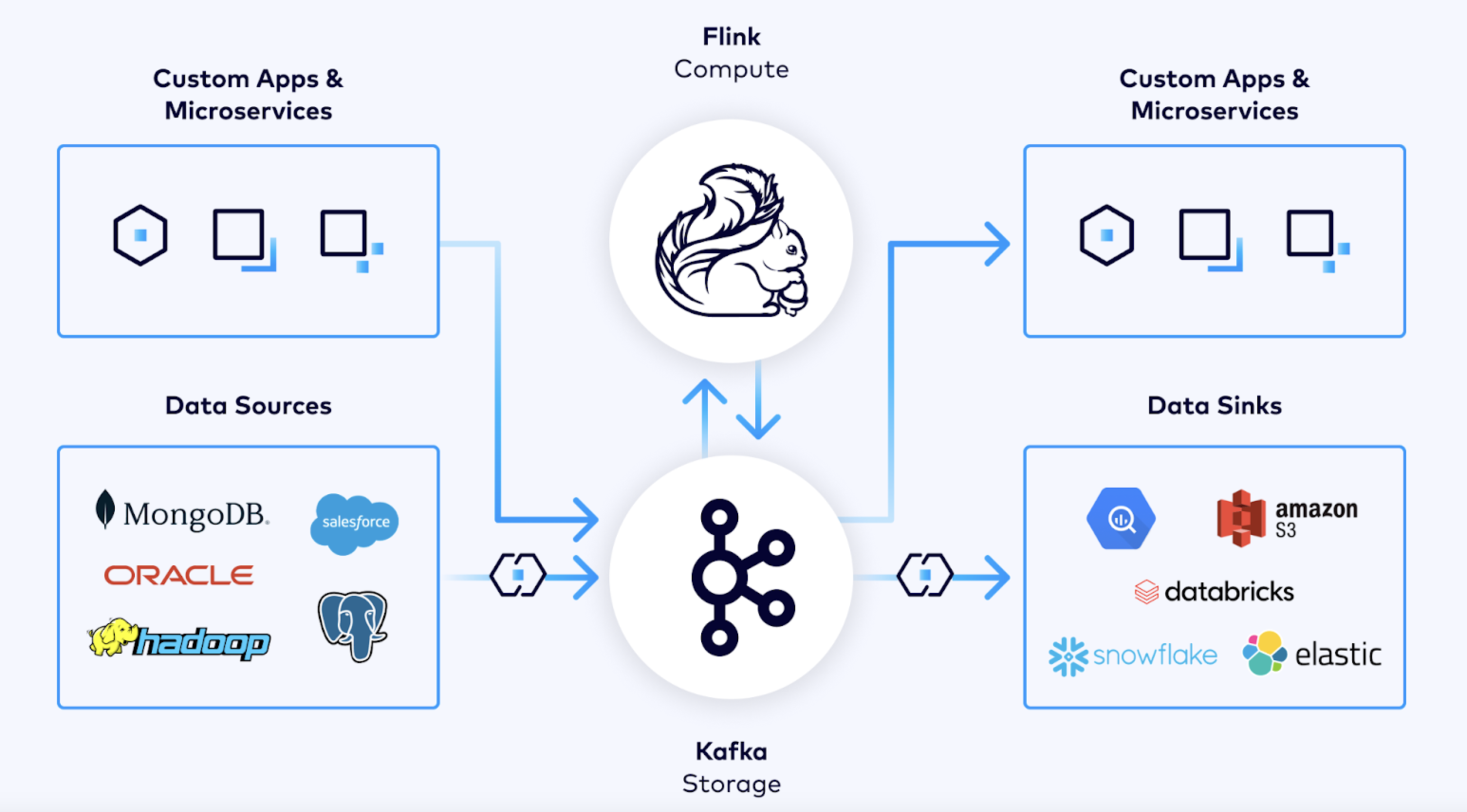

Many real-world architectures use both Kafka and Flink together:

- Kafka acts as the event store and message broker.

- Flink consumes data from Kafka, processes it, and sends results to databases, dashboards, or other services.

Example Use Case

Fraud Detection System

Fraud Detection System

- Kafka collects and stores transactions from a banking system.

- Flink continuously processes the transactions, checking for fraud patterns.

- If fraud is detected, Flink sends alerts to a database or notification system.

- Kafka stores all transactions so that past events can be analyzed later.

Final Thought

- Use Kafka for storing, streaming, and transporting data.

- Use Flink for processing, analyzing, and transforming data in real-time.

- Best practice: Combine Kafka + Flink for an end-to-end real-time data processing pipeline.