Apache Spark: Overview

Apache Spark is an open-source, distributed computing framework designed for big data processing and analytics. It provides fast, scalable, and flexible data processing across large clusters and supports batch, streaming, machine learning, and graph processing.

Key Features of Apache Spark

1. Fast In-Memory Computing

1. Fast In-Memory Computing

- Unlike traditional Hadoop MapReduce, Spark performs operations in memory, making it 100x faster for certain workloads.

- Supports disk-based processing for handling large datasets that don't fit in memory.

2. Unified Processing Engine

2. Unified Processing Engine

- Handles batch processing, real-time streaming, SQL queries, machine learning, and graph processing within a single framework.

3. Distributed Computing

3. Distributed Computing

- Runs on distributed clusters using Hadoop, Kubernetes, Mesos, or standalone Spark clusters.

- Uses Resilient Distributed Dataset (RDD) for fault-tolerant parallel processing.

4. Supports Multiple Programming Languages

4. Supports Multiple Programming Languages

- Native support for Scala, Java, Python (PySpark), and R.

5. Built-in Libraries for Data Analytics

5. Built-in Libraries for Data Analytics

- Spark SQL – Query structured data using SQL and DataFrames.

- Spark Streaming – Process real-time data streams.

- MLlib – Machine learning library for scalable ML algorithms.

- GraphX – Graph processing for social networks and recommendation systems.

6. Real-time Stream Processing

6. Real-time Stream Processing

- Micro-batch & continuous stream processing using Spark Streaming.

- Can integrate with Apache Kafka, Flink, and other streaming tools.

7. Integration with Big Data Ecosystem

7. Integration with Big Data Ecosystem

- Works with HDFS, Hive, Cassandra, S3, MongoDB, PostgreSQL, Kafka, Elasticsearch, and more.

8. Scalability & Fault Tolerance

8. Scalability & Fault Tolerance

- Automatic fault recovery via RDD lineage (lost data can be recomputed).

- Scales from a single node to thousands of nodes.

Apache Spark Architecture

1. Driver Program

1. Driver Program

- The main entry point of a Spark application.

- Creates SparkContext and manages job execution.

2. Cluster Manager

2. Cluster Manager

- Allocates resources across Hadoop YARN, Kubernetes, Mesos, or Standalone clusters.

3. Executors

3. Executors

- Worker nodes that process data and store results.

4. Resilient Distributed Datasets (RDD)

4. Resilient Distributed Datasets (RDD)

- Immutable distributed collections of objects processed in parallel.

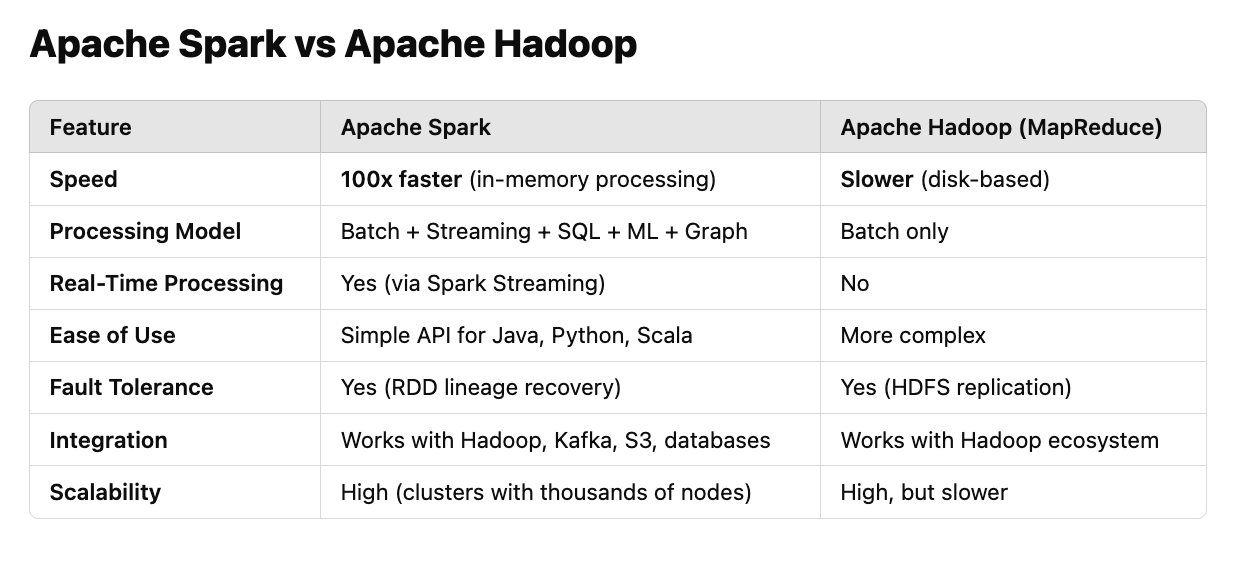

Apache Spark vs Apache Hadoop

| Feature | Apache Spark | Apache Hadoop (MapReduce) |

|---------|----------------|----------------|

| Speed | 100x faster (in-memory processing) | Slower (disk-based) |

| Processing Model | Batch + Streaming + SQL + ML + Graph | Batch only |

| Real-Time Processing | Yes (via Spark Streaming) | No |

| Ease of Use | Simple API for Java, Python, Scala | More complex |

| Fault Tolerance | Yes (RDD lineage recovery) | Yes (HDFS replication) |

| Integration | Works with Hadoop, Kafka, S3, databases | Works with Hadoop ecosystem |

| Scalability | High (clusters with thousands of nodes) | High, but slower |

When to Use Apache Spark?

Use Apache Spark if:

Use Apache Spark if:

- You need high-speed big data processing with real-time capabilities.

- You are working with machine learning, data science, or advanced analytics.

- Your data comes from Kafka, databases, or HDFS, and you need stream + batch processing.

- You require SQL-based queries on big data (Spark SQL).

Do NOT use Apache Spark if:

Do NOT use Apache Spark if:

- Your dataset is small and fits in a single machine (a relational database is better).

- You don’t need real-time or distributed computing (traditional tools like Pandas are easier).

- You need low-latency, event-driven applications (Apache Flink is better for that).

Final Thoughts

Apache Spark is a powerful, high-speed big data processing framework designed for distributed computing, real-time analytics, and machine learning. It outperforms Hadoop MapReduce and integrates well with modern data platforms.