Apache Spark vs Apache Hadoop vs Apache Flink vs Apache Superset vs Apache Kafka

Apache Spark vs Apache Hadoop vs Apache Flink vs Apache Superset vs Apache Kafka

These tools are not conflicting but complementary—each serves a different purpose in a big data ecosystem. Below, I explain how they work together in a workflow, their best use cases, and when to use each.

How They Work Together: Workflow

Step 1: Data Ingestion & Streaming (Kafka)

Step 1: Data Ingestion & Streaming (Kafka)

- Apache Kafka collects, buffers, and transmits data streams in real-time.

- Works as a message broker to decouple producers and consumers.

- Example: Logs, IoT sensor data, transactions, or user interactions are streamed into Kafka.

Step 2: Data Storage (Hadoop)

Step 2: Data Storage (Hadoop)

- Apache Hadoop (HDFS) stores massive volumes of data.

- Can handle structured, semi-structured, and unstructured data.

- Example: Kafka sends raw data to HDFS for long-term storage.

Step 3: Data Processing & Analytics (Spark/Flink)

Step 3: Data Processing & Analytics (Spark/Flink)

- Apache Spark processes large-scale batch and real-time data in memory.

- Apache Flink processes real-time streaming data with low latency.

- Example: Spark performs batch analytics, while Flink handles real-time fraud detection.

Step 4: Data Querying & Visualization (Superset)

Step 4: Data Querying & Visualization (Superset)

- Apache Superset provides a BI dashboard to visualize data.

- Supports SQL-based queries over processed datasets.

- Example: Business teams use Superset to analyze processed data and gain insights.

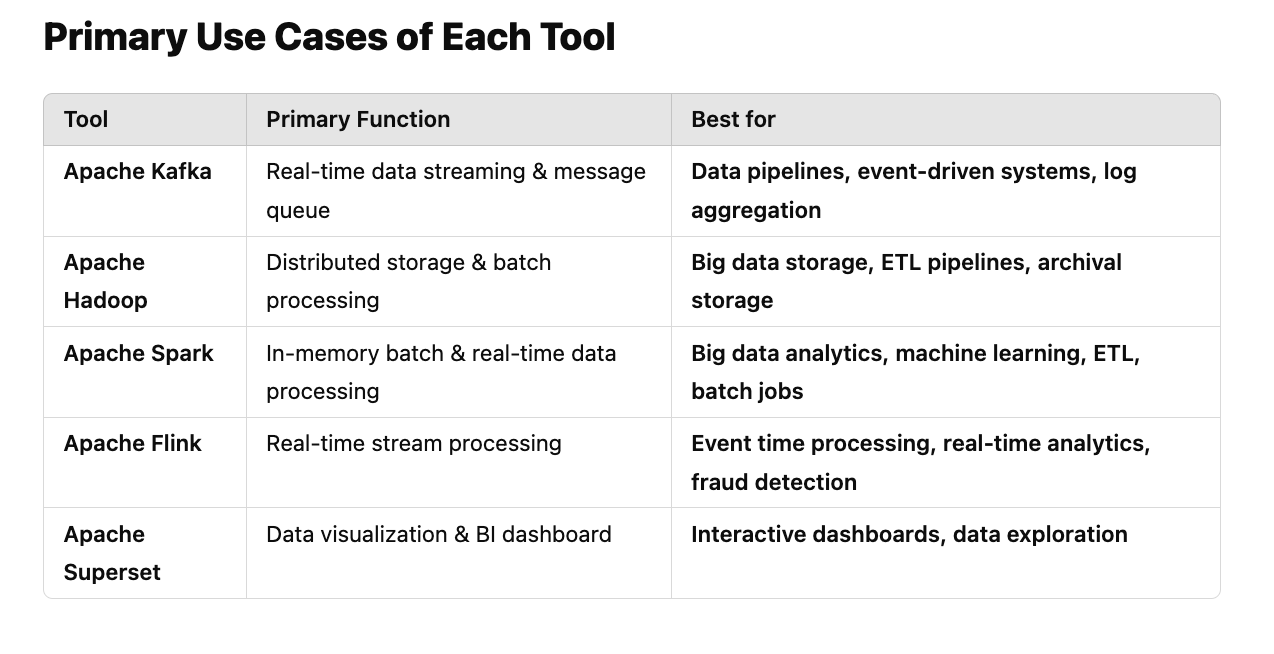

Primary Use Cases of Each Tool

| Tool | Primary Function | Best for |

|----------|----------------------|--------------|

| Apache Kafka | Real-time data streaming & message queue | Data pipelines, event-driven systems, log aggregation |

| Apache Hadoop | Distributed storage & batch processing | Big data storage, ETL pipelines, archival storage |

| Apache Spark | In-memory batch & real-time data processing | Big data analytics, machine learning, ETL, batch jobs |

| Apache Flink | Real-time stream processing | Event time processing, real-time analytics, fraud detection |

| Apache Superset | Data visualization & BI dashboard | Interactive dashboards, data exploration |

When to Use Each Tool (Detailed Scenarios)

Use Apache Kafka When:

Use Apache Kafka When:

- You need real-time streaming and event-driven architecture.

- You want to decouple microservices in a distributed system.

- You need to ingest data from multiple sources (e.g., logs, IoT, financial transactions).

- Example: Uber uses Kafka to handle millions of ride events per second.

Use Apache Hadoop When:

Use Apache Hadoop When:

- You need cheap and scalable storage for huge datasets.

- You have historical big data that needs processing later.

- You are running batch ETL pipelines.

- Example: Facebook stores user data in Hadoop for analysis.

Use Apache Spark When:

Use Apache Spark When:

- You need fast in-memory batch processing.

- You want to run complex machine learning models at scale.

- You need SQL-based querying over big data.

- Example: Netflix uses Spark for real-time recommendation systems.

Use Apache Flink When:

Use Apache Flink When:

- You need real-time event-driven processing.

- You need low-latency stream processing (better than Spark Streaming).

- You are working with IoT, financial transactions, or monitoring logs.

- Example: Alibaba uses Flink for real-time fraud detection.

Use Apache Superset When:

Use Apache Superset When:

- You want to visualize big data from sources like Hadoop, Kafka, or Spark.

- You need an open-source alternative to Tableau/Power BI.

- You need an interactive SQL-based BI tool.

- Example: Airbnb uses Superset for internal business analytics.

Final Thoughts

These tools complement each other:

- Kafka → Hadoop → Spark/Flink → Superset forms a complete data pipeline.

- Use Kafka for streaming, Hadoop for storage, Spark/Flink for processing, and Superset for visualization.

- Choose Spark for batch jobs, Flink for real-time analytics.