Kubernetes Ingress Tutorial For Beginners

Kubernetes Ingress is a resource to add rules for routing traffic from external sources to the services in the kubernetes cluster.

In this Kubernetes ingress tutorial series, you will learn the concept of ingress resource and ingress controllers used for routing external traffic to Kubernetes deployments. Also, we will cover advanced ingress routing using ISTIO ingress service gateway.

Generally, a custom Nginx or an HAproxy kubernetes deployment will be exposed as a service for proxying external traffic to internal cluster services, where the routing rules will be baked into the pod and added as a configmap. Kubenetes ingress acts in a similar way except the routing rules will be maintained as Kubernetes ingress object. It has a huge advantage of dynamic routing rule configuration without redeploying the proxy pods.

Kubernetes Ingress Tutorial

To get started, you need to understand two key things.

- Kubernetes Ingress

- Kubernetes Ingress Controller.

Lets have a look at each one these concepts.

Kubernetes Ingress:

Kubernetes Ingress is a native kubernetes resource where you can have rules to route traffic from an external source to service endpoints residing inside the cluster. It requires an ingress controller for routing the rules specified in the ingress object. An ingress object looks like the following.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: dev

spec:

rules:

- host: test.apps.example.com

http:

paths:

- backend:

serviceName: hello-service

servicePort: 80The above declaration means, all calls to test.apps.example.com should hit the service named hello-serviceresiding in the dev namespace.

Key things to understand about ingress object.

- You should create ingress rules in the same namespace where you have the services deployed. You cannot route traffic to a service in a different namespace where you don’t have the ingress object.

- An ingress object requires an ingress controller for routing traffic.

- External traffic will not hit the ingress API, instead, it will hit the ingress controller service

Kubernetes Ingress Controller

Ingress controller is typically a proxy service deployed in the cluster. It is nothing but a kubernetes deployment exposed to a service. Following are the ingress controllers available for kubernetes.

- Nginx Ingress Controller (Community & From Nginx Inc)

- Traefik

- HAproxy

- Contour

- GKE Ingress Controller

Generally, Nginx is widely used as an ingress controller. Here is how an Nginx ingress controller works.

- The nginx.conf file inside the Nginx controller pod is a go template which can talk to Kubernetes ingress API and get the latest values for traffic routing in real time.

- The Nginx controller talks to Kubernetes ingress API to check if there is any rule created for traffic routing.

- If it finds any ingress rules, it will be applied to the Nginx controller configuration, that is a

nginx.conffile inside the pod using the go template.

If you connect to the pod using exec and check the /etc/nginx/nginx.conf file, you can see all the rules specified in the ingress object applied in the conf file.

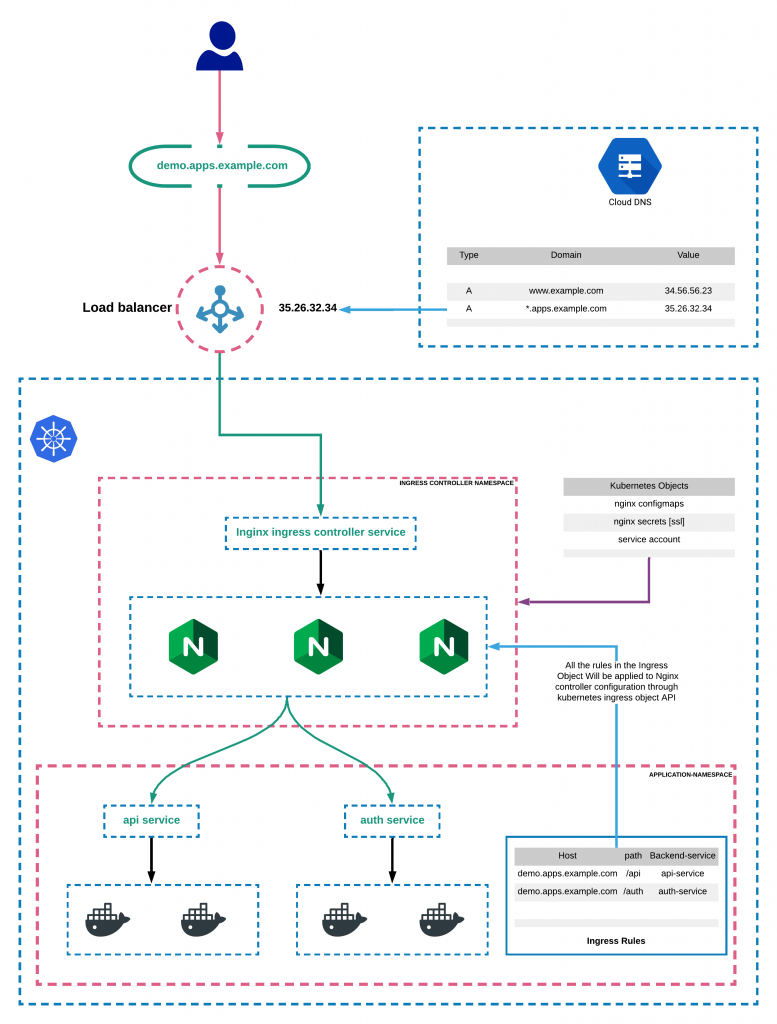

Here is the architecture diagram which explains the ingress setup on a kubernetes cluster.

In this tutorial series, we will look into all types of ingress solutions supported by Kubernetes.

Here is the list of kubernetes Ingress setup tutorials.

How To Set Up Ingress On Kubernetes Using Nginx Controller

In this tutorial, you will learn how to setup Kubernetes ingress using Nginx ingress controller and to route traffic to deployments using wildcard DNS.

If you want to understand how Kubernetes ingress works, please read this blog post on Kubernetes Ingress Tutorial.

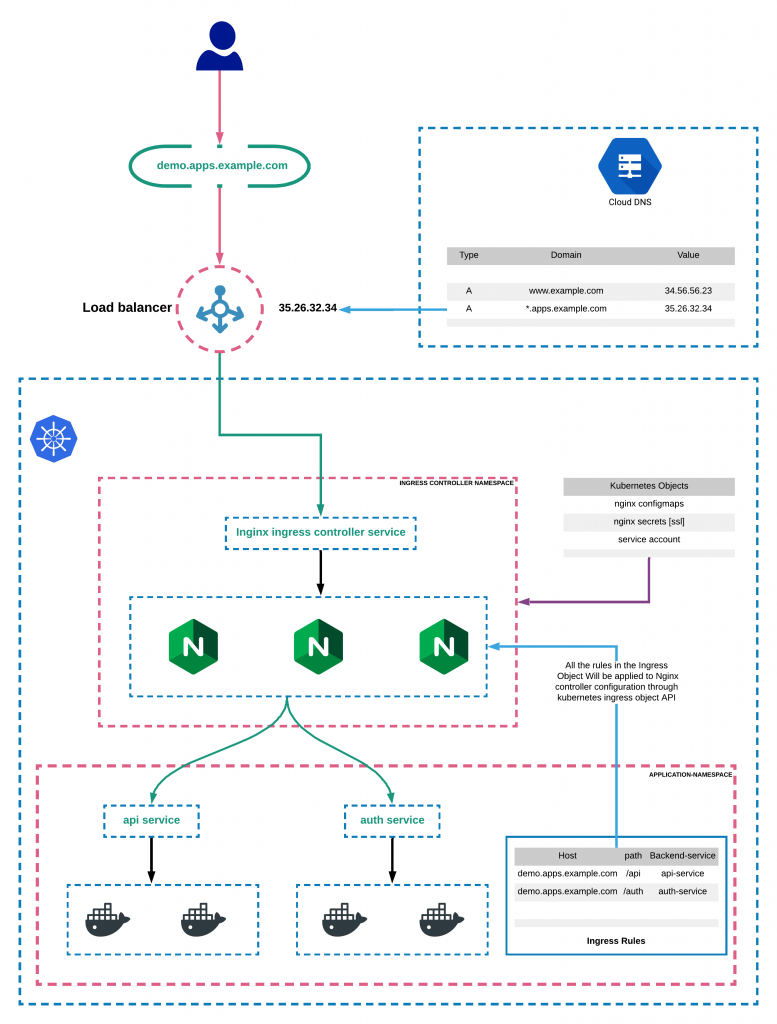

Here is an example architecture of Kubernetes ingress using Nginx ingress controller

Prerequisites:

- A Kuberntes cluster

- kubectl utility installed and authenticated to kubernetes cluster.

- Admin access to kubernetes cluster.

- A valid domain to point to ingress controller Load Balancer.

If you are on google cloud, assign admin permissions to your account to enable cluster roles.

ACCOUNT=$(gcloud info --format='value(config.account)')

kubectl create clusterrolebinding owner-cluster-admin-binding \

--clusterrole cluster-admin \

--user $ACCOUNTNote:

This tutorial was tried on google cloud GKE cluster. Logically it should work on all cloud environments. If at all you face any error, you might need to do some tweaks in the setup.

Setup Nginx Ingress Controller

There are two nginx ingress controllers.

- Nginx ingress controller by kubernetes community

- Nginx ingress controller by Nginx Inc

We will be using the Nginx controller from the kubernetes community.

Ingress controller needs a specific namespace, service account, cluster role bindings, configmaps etc. You can create all the kubernetes objects mentioned using the yaml file from official ingress repo.

Let’s deploy the ingress controller using mandatory.yaml file from the official repo. It has the consolidated list of kubernetes objects required for the Nginx controller.

Lets create the Nginx controller deployment using kubectl.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yamlCheck the ingress controller pods to make sure if it is setup correctly.

kubectl get pods -n ingress-nginxSetup LoadBalancer Service For Ingress Controller

Next step is to create a service of Type Loadbalancer to expose the nginx controller deployment outside the cluster.

Step 1: Create a project directory locally and switch to that directory.

mkdir ingress-deployment && cd ingress-deploymentStep 2: Create a file named nginx-ingress.yaml

vi nginx-ingress.yamlStep 3: Copy the following contents to the file.

Note:

The annotations under the labels are very important for integrating with the nginx controller deployment.

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

externalTrafficPolicy: Local

type: LoadBalancer

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: httpsStep 4: Create the ingress service.

kubectl apply -f nginx-ingress.yamlStep 5: Check the created service if it is attached to the external load balancer.

kubectl get svc -n ingress-nginxMap A Domain Name To Loadbalancer IP

To make our ingress settings work, we need to map a domain name to the load balancer IP. You can do it in two ways.

Single DNS Mapping:

You can map single domain directly as a A record to the load balancer IP. Using this you can have only one domain for the ingress controller and multiple path based traffic routing.

For example,

www.example.com --> Loadbalancer IPYou can have path based routing using this model.

Few examples,

http://www.example.com/app1

http://www.example.com/app2

http://www.example.com/app1/api

http://www.example.com/app2/apiWildcard DNS Mapping:

If you map a wildcard DNS to the load balancer, you can have dynamic DNS end points through ingress.

For example,

*.apps.example.comThis way you can have multiple dynamic subdomains through single ingress controller and each DNS can have its own path based routing.

For example,

#URL one

http://demo1.apps.example.com/api

http://demo1.apps.example.com/api/v1

http://demo1.apps.example.com/api/v2

#URL two

http://demo2.apps.example.com/api

http://demo2.apps.example.com/api/v1

http://demo2.apps.example.com/api/v2For demo purposes, we have mapped a wildcard DNS to the LoadBalancer IP. Based on your DNS provider, you can do this setting.

Setup A Demo Application

For testing purposes, we will deploy a demo application and add a ClusterIp service to it.

Also Read:Kubernetes deployment tutorial

Step 1: create a namespace named dev

kubectl create namespace devStep 2: Create a file named hello-app.yaml

Step 3: Copy the following contents and save the file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-app

namespace: dev

spec:

selector:

matchLabels:

app: hello

replicas: 3

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello

image: "gcr.io/google-samples/hello-app:2.0"Step 4: Create the deployment using kubectl

kubectl create -f hello-app.yamlCheck the deployment status.

kubectl get deployments -n devStep 5: Create a file named hello-app-service.yaml

Step 6: Copy the following contents and save the file.

apiVersion: v1

kind: Service

metadata:

name: hello-service

namespace: dev

labels:

app: hello

spec:

type: ClusterIP

selector:

app: hello

ports:

- port: 80

targetPort: 8080

protocol: TCPStep 7: Create the service using kubectl.

kubectl create -f hello-app-service.yamlCheck the service status

kubectl get svc -n devCreate Kubernetes Ingress Object

Now let’s create an ingress object to access our hello app using a DNS. An ingress object is nothing but a setup of routing rules.

If you are wondering how ingress object is connected to Nginx controller, the ingress controller pod connects to the Ingress API to check for rules and it updates its nginx.conf accordingly.

Step 1: Create a file named ingress.yaml

Step 2: Copy the following contents and save the file.

Replace test.apps.example.info with your domain name. Here the assumption is that you have a wildcard DNS in the format *.apps.example.info

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: dev

spec:

rules:

- host: test.apps.example.info

http:

paths:

- backend:

serviceName: hello-service

servicePort: 80Step 3: Describe created ingress object created to check the configurations.

kubectl describe ingress -n devNow if you try to access test.apps.example.info domain (replace it with your domain name), you should be able to access our sample app deployed.

|