“kservice” is likely a shorthand or abbreviated term for Knative Service, which is a building block of the Knative Serving platform for deploying and running serverless applications on Kubernetes.

A Knative Service is essentially a Kubernetes Deployment with some additional configuration and capabilities. When you create a Knative Service, you specify a container image to use, as well as some optional configuration such as environment variables and resource limits. When you apply the Knative Service configuration to your Kubernetes cluster, Knative Serving creates a Deployment that runs the specified container image, along with a horizontal pod autoscaler (HPA) to automatically scale the number of pods based on incoming traffic.

One of the key features of Knative Services is that they are automatically scaled to zero when there is no incoming traffic. This means that you only pay for the resources you actually use, rather than paying for a fixed number of servers or containers that are running all the time.

Knative Services also provide some additional capabilities beyond what you would get with a standard Kubernetes Deployment. For example, Knative Services automatically inject the necessary environment variables and configuration into your container so that it can communicate with other Knative components like the Istio service mesh and the Knative eventing system.

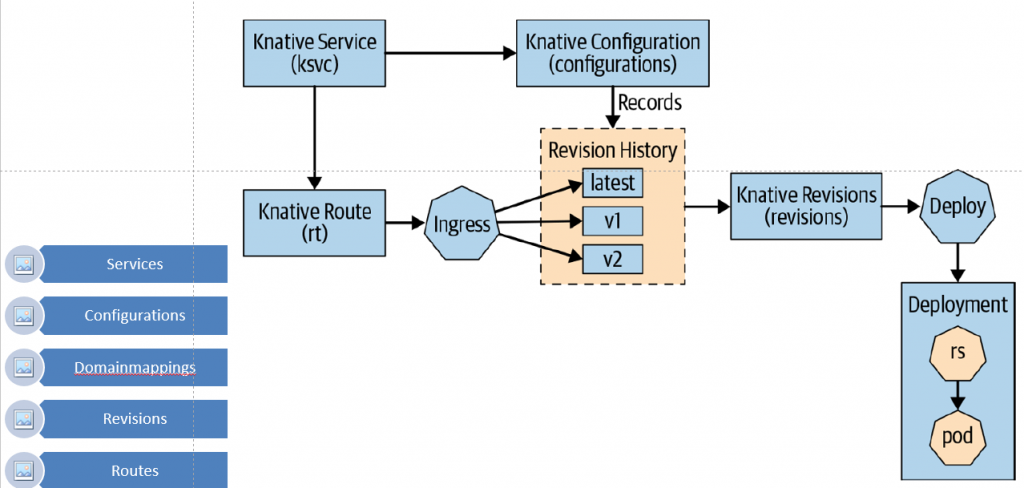

In addition to Knative Services, Knative Serving also provides several other building blocks for serverless applications, such as Knative Routes (which allow you to expose your Knative Service to the internet with a custom domain name) and Knative Configurations (which allow you to deploy multiple versions of your Knative Service and easily switch traffic between them).

One Example of kservice

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: my-knative-service

spec:

template:

metadata:

name: my-knative-service-container

spec:

containers:

- image: gcr.io/my-project/my-container-image

env:

- name: MY_ENV_VAR

value: "my-value"

resources:

limits:

memory: 512Mi

cpu: 500m

Code language: JavaScript (javascript)In this example, we are creating a Knative Service named “my-knative-service” that runs a container image from Google Container Registry with the image name “gcr.io/my-project/my-container-image”. We are also specifying an environment variable named “MY_ENV_VAR” with a value of “my-value”, and setting resource limits for the container.

When you apply this manifest YAML file to your Kubernetes cluster, Knative Serving will create a Deployment that runs the specified container image, along with a horizontal pod autoscaler (HPA) to automatically scale the number of pods based on incoming traffic. The Knative Service will also automatically inject the specified environment variable and resource limits into the container.

Note that this is just a simple example, and Knative Services can be configured in many different ways depending on your specific use case. For example, you can specify custom networking settings, use build templates to automatically build your container image from source code, and deploy multiple versions of your Knative Service using Knative Configurations.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Do you want to learn Quantum Computing?

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND