Machine Learning: Data, Algorithms, and Models

Machine Learning (ML) is a dynamic field of artificial intelligence that focuses on enabling machines to learn from data, identify patterns, and make decisions with minimal human intervention. At the core of any machine learning system are three fundamental components: data, algorithms, and models. Understanding how these components interact and contribute to the development of intelligent systems is crucial for anyone involved in the field of machine learning. This blog provides a comprehensive overview of these key elements and their roles in the machine learning process.

1. Data: The Foundation of Machine Learning

Data is the foundational element of any machine learning system. In machine learning, data is used to train models, evaluate their performance, and make predictions. The quality, quantity, and relevance of the data directly impact the effectiveness of the machine learning model.

Types of Data in Machine Learning

- Structured Data:

- Structured data is highly organized and formatted in a way that is easily searchable in databases, typically in rows and columns (e.g., spreadsheets, relational databases).

- Examples: Customer information, transaction records, sensor data.

- Unstructured Data:

- Unstructured data lacks a predefined format, making it more challenging to analyze using traditional tools. It includes a wide range of data types such as text, images, and videos.

- Examples: Social media posts, email content, images, audio files.

- Semi-Structured Data:

- Semi-structured data is not as strictly organized as structured data but still contains tags or markers to separate elements, making it more manageable than purely unstructured data.

- Examples: XML files, JSON files.

The Role of Data in Machine Learning

- Training Data: The dataset used to train a machine learning model. It consists of input data (features) and corresponding output labels (in supervised learning). The model learns from this data to make predictions or classifications.

- Validation Data: A separate dataset used to tune the model’s hyperparameters and prevent overfitting. It helps in selecting the best model during the training process.

- Test Data: An independent dataset used to evaluate the model’s performance after it has been trained and validated. It provides an unbiased assessment of how well the model will perform on new, unseen data.

- Feature Engineering: The process of selecting, transforming, and creating input variables (features) from raw data to improve the performance of a machine learning model. Good feature engineering can significantly enhance model accuracy.

- Data Preprocessing: The steps taken to clean, transform, and prepare raw data for modeling. This includes handling missing values, scaling features, encoding categorical variables, and more.

2. Algorithms: The Heart of Machine Learning

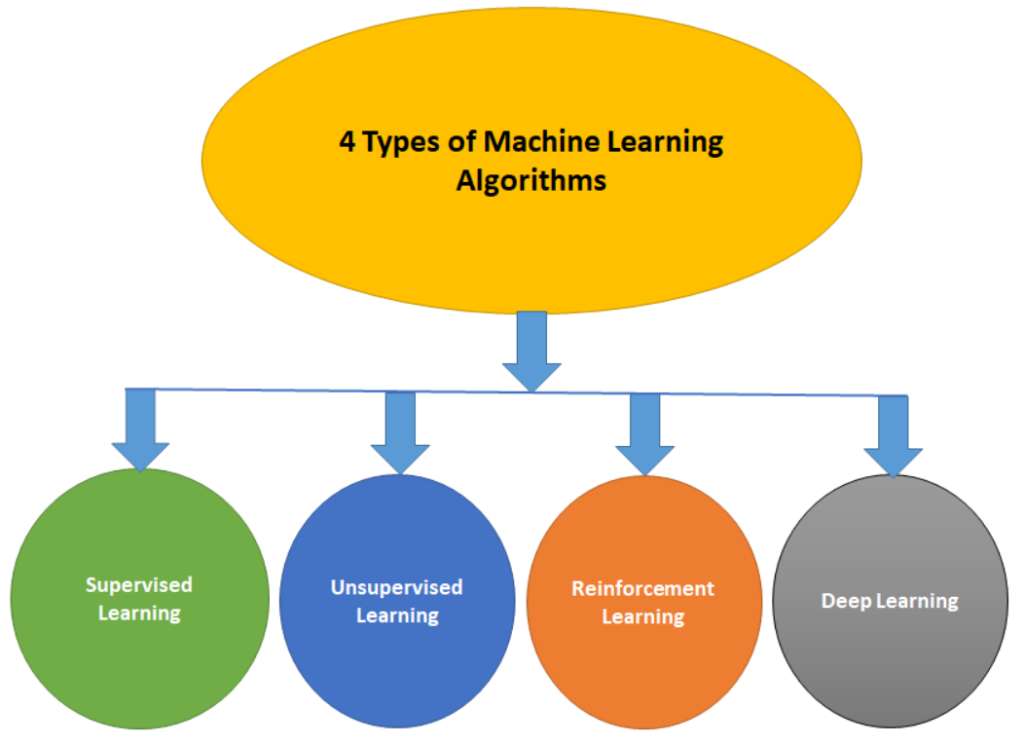

Algorithms are the mathematical or logical rules that guide the machine learning process. They define how the model learns from the data, how it makes predictions, and how it improves over time. Different types of algorithms are suited to different types of problems, and selecting the right algorithm is crucial for building an effective machine learning model.

Types of Machine Learning Algorithms

- Supervised Learning Algorithms:

- These algorithms learn from labeled data, where the input-output pairs are provided. The goal is to learn a mapping from inputs to outputs that can be used to predict outcomes on new data.

- Examples: Linear Regression, Logistic Regression, Decision Trees, Support Vector Machines (SVM), k-Nearest Neighbors (k-NN), Neural Networks.

- Unsupervised Learning Algorithms:

- Unsupervised learning algorithms work with unlabeled data. They seek to find hidden patterns or structures in the data without explicit instructions on what to look for.

- Examples: k-Means Clustering, Hierarchical Clustering, Principal Component Analysis (PCA), Autoencoders.

- Reinforcement Learning Algorithms:

- In reinforcement learning, an agent learns to make decisions by taking actions in an environment and receiving feedback in the form of rewards or penalties. The goal is to maximize cumulative rewards over time.

- Examples: Q-Learning, Deep Q-Networks (DQNs), SARSA, Policy Gradient Methods.

- Ensemble Learning Algorithms:

- Ensemble learning combines multiple models to improve the overall performance. The idea is that by aggregating the predictions of several models, the ensemble can outperform any individual model.

- Examples: Random Forest, Gradient Boosting Machines (GBM), AdaBoost, XGBoost.

The Role of Algorithms in Machine Learning

- Learning Process: Algorithms guide the learning process by adjusting the model’s parameters to minimize errors and improve predictions. This is typically done through optimization techniques like gradient descent.

- Model Selection: Choosing the right algorithm depends on the problem type (classification, regression, clustering, etc.), the nature of the data, and the specific requirements of the task. Understanding the strengths and weaknesses of different algorithms is key to making informed choices.

- Hyperparameter Tuning: Algorithms often come with hyperparameters—settings that need to be adjusted to optimize model performance. Tuning these hyperparameters is crucial for achieving the best possible results.

- Generalization: A good algorithm helps the model generalize well to new, unseen data by avoiding overfitting (fitting the training data too closely) and underfitting (failing to capture the underlying patterns in the data).

3. Models: The Output of Machine Learning

Models are the output of the machine learning process. A model is essentially a function that has been trained by an algorithm on a dataset to make predictions or decisions based on new input data. Once trained, the model can be used to generate predictions on new data.

Types of Machine Learning Models

- Regression Models:

- Regression models predict continuous outcomes based on input data. These models are commonly used in forecasting and financial predictions.

- Examples: Linear Regression, Ridge Regression, Lasso Regression.

- Classification Models:

- Classification models predict categorical outcomes, such as whether an email is spam or not, or whether a customer will churn. They assign input data to one of several predefined categories.

- Examples: Logistic Regression, Support Vector Machines, Decision Trees, Neural Networks.

- Clustering Models:

- Clustering models group similar data points together based on their features. These models are often used in customer segmentation and exploratory data analysis.

- Examples: k-Means, Hierarchical Clustering, DBSCAN.

- Dimensionality Reduction Models:

- Dimensionality reduction models reduce the number of features in a dataset while preserving as much information as possible. They are useful for visualizing high-dimensional data and improving the efficiency of other algorithms.

- Examples: Principal Component Analysis (PCA), t-SNE, Autoencoders.

- Reinforcement Learning Models:

- Reinforcement learning models are used in environments where decisions need to be made sequentially, with the goal of maximizing a cumulative reward. These models are often used in robotics, game playing, and autonomous systems.

- Examples: Q-Learning Models, Policy Networks.

The Role of Models in Machine Learning

- Prediction: The primary role of a machine learning model is to make predictions on new, unseen data. The quality of these predictions depends on the data used to train the model and the effectiveness of the algorithm.

- Interpretability: Some models, like decision trees and linear regression, are easy to interpret, allowing users to understand how predictions are made. Others, like deep neural networks, are more complex and often considered “black boxes,” making interpretation more challenging.

- Model Evaluation: Once a model is trained, it must be evaluated to determine its accuracy, precision, recall, F1-score, or other relevant metrics, depending on the task. Model evaluation helps ensure that the model will perform well on real-world data.

- Deployment: After evaluation, the model can be deployed in a production environment where it will make predictions on live data. Deployment requires monitoring to ensure that the model continues to perform well over time.

Interaction Between Data, Algorithms, and Models

- Training: During the training phase, data is fed into the algorithm, which then adjusts the model’s parameters to minimize the error between the model’s predictions and the actual outcomes. This process is iterative and continues until the model reaches an acceptable level of performance.

- Validation and Testing: After training, the model is validated on a separate dataset to fine-tune its hyperparameters and prevent overfitting. Finally, the model is tested on a third dataset to evaluate its performance and ensure it generalizes well to new data.

- Feedback Loop: In a typical machine learning workflow, the interaction between data, algorithms, and models is iterative. Insights from the model’s performance on validation and test data can lead to further data preprocessing, algorithm selection, or model refinement, creating a feedback loop that drives continuous improvement.

Here’s a tabular comparison highlighting the differences between Data, Algorithms, and Models in Machine Learning:

| Aspect | Data | Algorithms | Models |

|---|---|---|---|

| Definition | The raw information or input used to train a model. | The set of mathematical or logical rules used to learn from data and make predictions. | The output of the machine learning process; a trained function that makes predictions based on new input data. |

| Role | Provides the foundation for training and validating machine learning models. | Guides the learning process by adjusting model parameters to fit the data. | Used to make predictions or decisions based on new, unseen data. |

| Types | Structured, Unstructured, Semi-Structured | Supervised (e.g., Linear Regression, SVM), Unsupervised (e.g., k-Means, PCA), Reinforcement (e.g., Q-Learning) | Regression (e.g., Linear Regression), Classification (e.g., Decision Trees), Clustering (e.g., k-Means) |

| Input/Output | Input: Raw data (features and, in supervised learning, labels). | Input: Data (features and, in supervised learning, labels). Output: A trained model. | Input: New, unseen data. Output: Predictions or classifications. |

| Examples | Customer purchase history, images, text documents | Decision Trees, Neural Networks, k-Means Clustering | A model predicting house prices, a model classifying emails as spam or not spam. |

| Evaluation | Quality assessed by relevance, accuracy, completeness, and amount. | Assessed by how well it minimizes error and improves the model’s performance. | Evaluated using metrics like accuracy, precision, recall, F1-score, or MSE, depending on the task. |

| Importance | Critical for the quality and reliability of the model; “Garbage in, garbage out.” | Essential for determining how the model learns from data and adapts to it. | The final product that delivers predictions; its accuracy and reliability determine the success of the machine learning task. |

| Modification | Preprocessing: Cleaning, transforming, and encoding data. | Tuning: Adjusting hyperparameters, selecting appropriate algorithms for the problem. | Training: Adjusting parameters based on algorithms; fine-tuning during validation. |

| Examples of Use | Raw data collected from sensors for a predictive maintenance system. | Algorithms like Gradient Boosting for improving model accuracy in a classification task. | A neural network model used for image recognition in a facial recognition system. |

Conclusion

Machine learning is a powerful tool for solving complex problems, and its success relies on the effective interaction of data, algorithms, and models. Data serves as the foundation, providing the necessary inputs for training. Algorithms act as the engine, processing the data and fine-tuning the model. Finally, the model is the output that delivers predictions, classifications, or decisions based on new data.

Understanding these components and how they work together is crucial for anyone looking to build, evaluate, or deploy machine learning systems. Whether you’re a data scientist, engineer, or business leader, mastering the relationship between data, algorithms, and models will enable you to harness the full potential of machine learning.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Do you want to learn Quantum Computing?

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND