What is Apache Beam?

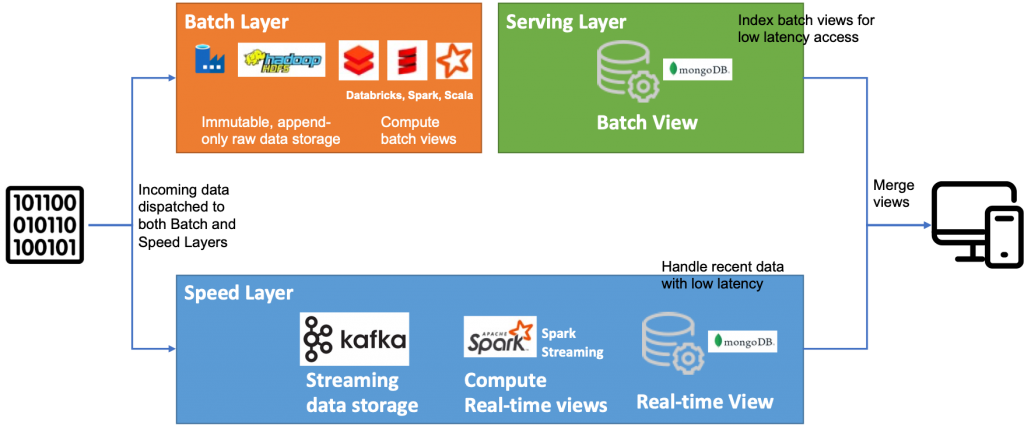

Apache Beam is an open-source unified programming model and a set of APIs for building batch and streaming data processing pipelines. It provides a way to define data processing tasks that can run on various distributed processing backends, such as Apache Spark, Apache Flink, Google Cloud Dataflow, and more. The goal of Apache Beam is to provide a portable and consistent way to express data processing pipelines regardless of the underlying execution engine.

Top 10 use cases of Apache Beam:

Here are the top 10 use cases of Apache Beam:

- Real-Time Analytics: Apache Beam is used to process and analyze streaming data in real time. It can be applied in scenarios like monitoring social media feeds, tracking user activities, and generating real-time insights.

- Batch ETL (Extract, Transform, Load): Beam enables efficient extraction, transformation, and loading of large volumes of batch data. It’s commonly used to preprocess and clean data before storing it in data warehouses or databases.

- Event Time Processing: Apache Beam provides features for event time processing, which is critical for scenarios where events occur at different times but are processed in the correct order.

- IoT Data Processing: With the rise of Internet of Things (IoT), Beam can be used to ingest and process data from various sensors and devices in real time.

- Clickstream Analysis: For websites and applications, Beam can help analyze clickstream data to understand user behavior and make data-driven decisions to improve user experiences.

- Fraud Detection: Beam can be used to detect patterns and anomalies in streaming data, helping to identify potential fraudulent activities in real time.

- Financial Data Processing: Beam is applicable in the financial industry for processing and analyzing stock market data, transactions, and trading activities.

- Recommendation Systems: In e-commerce and entertainment, Beam can process user interaction data to generate personalized recommendations for products, movies, music, and more.

- Data Enrichment: Beam can enrich data by joining streams or batches of data with external datasets, providing additional context for analysis.

- Machine Learning Pipelines: Beam can be used to preprocess and transform data before feeding it into machine learning models. It supports data preparation steps like feature engineering and normalization.

- Log Analysis: For system monitoring and troubleshooting, Beam can process log data in real time, identifying issues and anomalies to ensure smooth operations.

- Supply Chain Optimization: In logistics and supply chain management, Beam can process data related to shipments, inventory, and demand to optimize routes and inventory levels.

These are just a few examples of how Apache Beam can be applied to various data processing scenarios. The key advantage of using Beam is its portability across different processing engines, allowing developers to write pipelines once and run them on different platforms without major code changes.

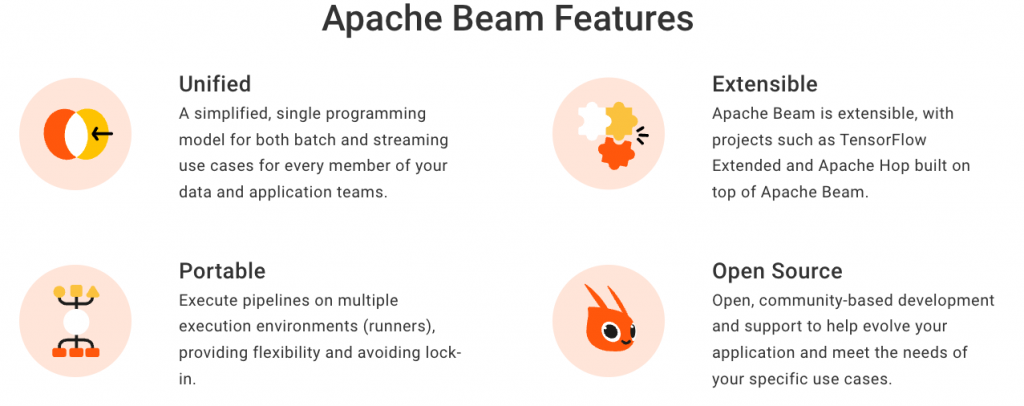

What are the feature of Apache Beam?

Apache Beam offers a set of features that enable developers to build data processing pipelines that are portable, scalable, and expressive. It provides an abstraction layer that allows users to define data processing tasks without being tied to a specific execution engine. Here are some key features of Apache Beam:

- Unified Model: Apache Beam provides a unified programming model for both batch and stream processing. This allows developers to write code that works for both scenarios, promoting code reuse and simplifying development.

- Portability: Beam pipelines can be executed on various processing engines, such as Apache Spark, Apache Flink, Google Cloud Dataflow, and more. This enables users to choose the most suitable execution engine for their use case.

- Parallel Processing: Beam processes data in parallel, taking advantage of the underlying processing engine’s capabilities to distribute work across multiple nodes and cores, improving performance.

- Event Time Processing: Beam supports event time processing, allowing developers to handle out-of-order data based on event timestamps. This is crucial for scenarios where data arrives at different times.

- Windowing: Beam provides windowing capabilities for managing and processing data within specific time intervals or windows, facilitating tasks like sessionization and aggregations.

- Exactly-Once Processing: Beam supports exactly-once processing semantics, ensuring that each piece of data is processed exactly once, even in the presence of failures.

- Dynamic Scaling: Beam pipelines can dynamically scale up or down based on the incoming data volume, enabling efficient resource utilization.

- Backpressure Handling: Beam provides mechanisms to handle backpressure, which occurs when the rate of data production exceeds the rate of data processing. This ensures stability and prevents resource exhaustion.

- Extensibility: Beam allows users to create custom transformations, sources, and sinks, enabling integration with various data sources, APIs, and storage systems.

- State Management: Beam supports distributed state management, allowing developers to maintain and use stateful information across different processing stages.

- Checkpointing: For stream processing, Beam supports checkpointing, which enables fault tolerance by periodically saving the pipeline’s state to a durable storage system.

How Apache Beam Works and Architecture?

- Pipeline Definition: Developers define their data processing pipeline using the Apache Beam SDK. This includes specifying transformations, data sources, sinks, and other processing steps.

- Graph Representation: The pipeline definition is transformed into a directed acyclic graph (DAG) that represents the sequence of transformations and data flows.

- Execution Engine: The user specifies the execution engine where the pipeline will run. Apache Beam supports various execution engines like Apache Spark, Apache Flink, Google Cloud Dataflow, and more.

- Translation Layer: Apache Beam translates the pipeline’s logical representation into executable code that is compatible with the chosen execution engine.

- Distributed Processing: The execution engine distributes the pipeline tasks across a cluster of machines, performing parallel processing and optimizations.

- Data Processing: The execution engine processes the data according to the defined transformations and logic, performing tasks like mapping, filtering, aggregation, and more.

- Windowing and Time Handling: If windowing is used, data is organized into windows based on time intervals. Event time processing and windowing are applied to ensure accurate data processing.

- Output: Processed data is sent to the specified output sinks, which could be databases, storage systems, APIs, or other data destinations.

- Fault Tolerance: Apache Beam’s execution engines ensure fault tolerance by managing checkpoints, maintaining state, and handling failures gracefully.

- Scaling: The execution engine can dynamically scale the pipeline based on incoming data volume and available resources, ensuring efficient resource utilization.

- Completion and Cleanup: Once the pipeline completes, resources are released, and cleanup tasks are performed.

- Monitoring and Metrics: Apache Beam provides monitoring and metrics capabilities to track the progress, performance, and health of the pipeline.

Overall, Apache Beam’s architecture abstracts the complexities of distributed data processing and provides a consistent model for building data pipelines that can be executed on various processing engines. This promotes code portability, scalability, and ease of development across different data processing scenarios.

How to Install Apache Beam?

To install Apache Beam, you need to have the following:

- Java 8 or later

- Python 3.6 or later

- A compatible runner

The following are the detailed steps on how to install Apache Beam on different operating systems:

Windows

- Install Java 8 or later. You can download the Java installer from the Oracle website.

- Install Python 3.6 or later. You can go to their website to download the Python installer.

- Install the Apache Beam SDK for Python. You can perform this by running the below command in a terminal window:

pip install apache-beam[gcp]Code language: CSS (css)macOS

- Install Java 8 or later. You can download the Java installer from the Oracle website.

- Install Python 3.6 or later. You can go to their website to download the Python installer.

- Install the Apache Beam SDK for Python. You can perform this by running the below command in a terminal window:

pip install apache-beam[gcp]Code language: CSS (css)Linux

- Install Java 8 or later. You can download the Java installer from the Oracle website.

- Install Python 3.6 or later. You can go to their website to download the Python installer.

- Install the Apache Beam SDK for Python. You can perform this by running the below command in a terminal window:

pip install apache-beam[gcp]Code language: CSS (css)Once you have installed Apache Beam, you can start writing pipelines. You can find more information about writing pipelines in the Apache Beam documentation.

Here are some of the benefits of using Apache Beam:

- It is a unified model for data processing pipelines.

- It can be used to process data on a variety of platforms, including Apache Spark, Apache Flink, and Google Cloud Dataflow.

- It provides a variety of features for data processing, such as batch processing, streaming processing, and machine learning.

- It is free to apply and open-source.

Here are some of the drawbacks of using Apache Beam:

- It can be complex to learn and use.

- It can be slow for some applications, especially those that use a lot of data.

- It is not as popular as some other data processing frameworks, such as Spark and Flink.

Overall, Apache Beam is a powerful and versatile data processing framework that can be used to process data on a variety of platforms. It is a good choice for developers who want to build scalable, reliable, and efficient data processing pipelines.

Basic Tutorials of Apache Beam: Getting Started

The following are the steps of basic tutorials of Apache Beam:

- Create a new Apache Beam project in IntelliJ IDEA

To create a new Apache Beam project in IntelliJ IDEA, you can follow these steps:

1. Open IntelliJ IDEA.

2. Click on the "Create New Project" button.

3. In the "New Project" dialog box, select the "Project" project type and click on

the "Next" button.

4. In the "Choose a project SDK" dialog box, select the "Java SDK 1.8" option and

click on the "Next" button.

5. In the "Configure Project" dialog box, enter a name for your project and click

on the "Finish" button.Code language: JavaScript (javascript)- Add the Apache Beam SDK to your project

To add the Apache Beam SDK to your project, you can follow these steps:

1. Unlock the project in IntelliJ IDEA.

2. In the project window, right-click on the "pom.xml" file and select the "Open

Module Settings" menu item.

3. Select the "Dependencies" tab in the "Module Settings" dialog box.

4. Click on the "+" button and select the "Add Library" menu item.

5. In the "Add Library" dialog box, select the "Maven" tab.

6. Enter "org.apache.beam" in the "Group ID" field.

7. Enter "beam-sdks-python-io" in the "Artifact ID" field.

8. Click on the "OK" button.Code language: PHP (php)- Write a simple Apache Beam pipeline

A simple Apache Beam pipeline can be written in a few lines of code. The following is an example of a simple pipeline that reads a file and prints the contents of the file to the console:

import apache_beam as beam

with beam.Pipeline() as pipeline:

(pipeline

| 'Read data' >> beam.io.ReadFromText('data.txt')

| 'Print data' >> beam.io.WriteToText('output.txt'))

pipeline.run()Code language: JavaScript (javascript)- Run your pipeline

To run your pipeline, you can use the run() method of the Pipeline object. The following code runs the pipeline that was created in the previous step:

pipeline.run()Code language: CSS (css)

👤 About the Author

Ashwani is passionate about DevOps, DevSecOps, SRE, MLOps, and AiOps, with a strong drive to simplify and scale modern IT operations. Through continuous learning and sharing, Ashwani helps organizations and engineers adopt best practices for automation, security, reliability, and AI-driven operations.

🌐 Connect & Follow:

- Website: WizBrand.com

- Facebook: facebook.com/DevOpsSchool

- X (Twitter): x.com/DevOpsSchools

- LinkedIn: linkedin.com/company/devopsschool

- YouTube: youtube.com/@TheDevOpsSchool

- Instagram: instagram.com/devopsschool

- Quora: devopsschool.quora.com

- Email– contact@devopsschool.com