What is AWS EMR?

Amazon EMR (Elastic MapReduce) is a cloud-native big data platform provided by Amazon Web Services (AWS). It allows you to process and analyze large amounts of data using popular distributed processing frameworks like Apache Hadoop, Apache Spark, and others. EMR simplifies the setup, scaling, and management of big data clusters, making it easier to perform data processing tasks.

Top 10 Use Cases of AWS EMR:

- Batch Processing: EMR is ideal for batch processing tasks like data cleansing, transformation, and ETL (Extract, Transform, Load) operations on large datasets.

- Data Warehousing: You can use EMR to perform data analysis and transformations before loading data into a data warehouse like Amazon Redshift.

- Log Analysis: EMR can be used to analyze and process logs and event data to extract insights and identify trends.

- Machine Learning: EMR can preprocess and transform data for machine learning tasks, feeding clean and prepared data into ML models.

- Recommendation Systems: Process user behavior data to build recommendation systems that suggest products, content, or services to users.

- Genomics Data Analysis: EMR can handle large-scale genomics data analysis tasks, enabling research in fields like personalized medicine.

- Real-Time Analytics: EMR can process real-time streaming data, allowing organizations to gain insights and take actions on the fly.

- Sentiment Analysis: Analyze social media data or customer feedback to understand sentiment and opinions.

- Clickstream Analysis: Process and analyze clickstream data from websites or apps to understand user behavior and engagement.

- Fraud Detection: EMR can analyze transaction data to identify patterns and anomalies associated with fraudulent activities.

These use cases demonstrate the versatility of AWS EMR in handling various big data processing scenarios. By leveraging the power of distributed processing frameworks, EMR enables efficient and scalable data processing, analysis, and insights generation.

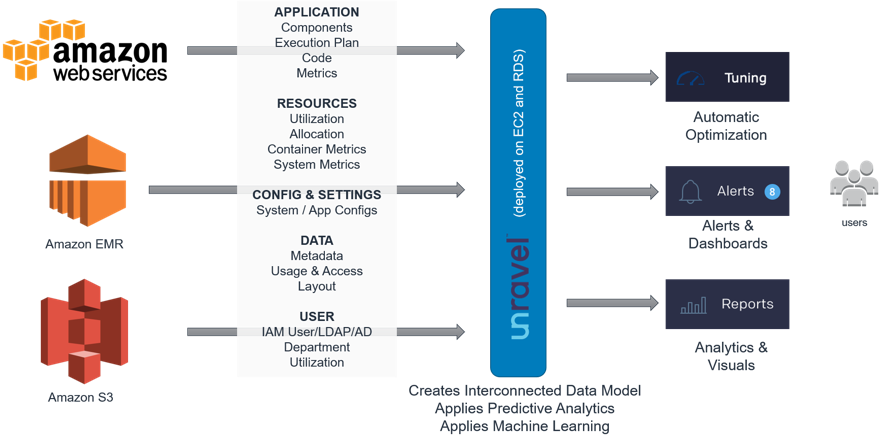

EMR integrates seamlessly with other AWS services, allowing you to build end-to-end data processing and analytics pipelines using services like Amazon S3, Amazon Redshift, Amazon DynamoDB, and more.

What are the feature of AWS EMR?

- Managed Clusters: AWS EMR provides managed clusters that simplify the setup, configuration, and scaling of big data processing clusters.

- Distributed Processing: EMR supports distributed processing frameworks like Hadoop and Spark, enabling parallel processing of large datasets.

- Integration with AWS Services: Seamlessly integrates with other AWS services like Amazon S3, Amazon Redshift, Amazon DynamoDB, and more for data storage and analysis.

- Auto Scaling: EMR clusters can be configured to automatically scale up or down based on the processing requirements, optimizing resource utilization.

- Security: Offers various security features including encryption, VPC (Virtual Private Cloud) support, IAM (Identity and Access Management), and more.

- Managed Compute: EMR provides a wide range of instance types optimized for various processing tasks, allowing you to choose the most suitable resources.

- Monitoring and Logging: EMR provides monitoring and logging capabilities to track cluster performance, resource usage, and job progress.

- Pre-Installed Applications: EMR clusters come with pre-installed applications like Hive, Pig, Spark, and more, making it easier to start processing tasks.

- Customizable: You can customize cluster configurations, choose the version of frameworks, and add additional software as needed.

- Spot Instances: Utilize AWS Spot Instances to lower costs by taking advantage of available spare capacity.

How AWS EMR Works and Architecture?

AWS EMR processes data in a distributed and parallel manner. Here’s an outline of how it works:

- Data Ingestion: Your data is stored in Amazon S3, HDFS (Hadoop Distributed File System), or other supported data sources.

- Cluster Creation: You create an EMR cluster using the AWS Management Console, AWS CLI, or SDK. You configure the cluster size, instance types, and software applications to be installed.

- Job Submission: You submit jobs to the EMR cluster using frameworks like Apache Hadoop MapReduce, Apache Spark, Hive, etc.

- Distributed Processing: The cluster processes data in parallel across the instances. Data is split into smaller chunks that are processed simultaneously.

- Data Movement: During processing, data is moved across instances using Hadoop’s HDFS or other storage connectors.

- Results: Processed data or results are written back to Amazon S3 or other storage locations.

EMR Cluster Architecture:

EMR clusters consist of several components:

- Master Node: Manages the cluster and coordinates task execution.

- Core Nodes: Perform data processing tasks and store data in HDFS.

- Task Nodes: Perform data processing tasks but don’t store data in HDFS.

- Amazon S3: Used for data storage and input/output of processed results.

- Applications: Software applications like Hadoop, Spark, Hive, Pig, etc., run on the cluster.

- Task Tracker and Node Manager: Manage task execution on core and task nodes.

EMR’s architecture abstracts much of the complexity of setting up and managing distributed clusters, allowing you to focus on your data processing tasks. It automatically handles provisioning, scaling, and resource management, making it easier to process large-scale data efficiently.

How to Install AWS EMR?

To install AWS EMR, you can follow these steps:

- Open the AWS Management Console in the browser and sign in to your account.

- Click the Services tab and select EMR.

- Click the Create Cluster button.

- In the Cluster Name field, enter a name for your cluster.

- In the Release field, select the release version of EMR that you want to use.

- In the Hardware Configuration section, select the instance type and number of nodes for your cluster.

- In the Software Configuration section, select the Hadoop and Spark versions that you want to use.

- In the Advanced Options section, you can configure additional options for your cluster, such as the security group and the VPC.

- Click the Create Cluster button to create your cluster.

Once your cluster is created, you can start submitting jobs to it.

To submit a job to AWS EMR, you can use the AWS CLI or the AWS Management Console.

Here is an example of how to submit a job to AWS EMR using the AWS CLI:

aws emr create-job --cluster-id my-cluster-id --steps file://my-job.shCode language: JavaScript (javascript)This command will create a job called my-job in the cluster my-cluster-id. The job will execute the script my-job.sh.

Here is an example of how to submit a job to AWS EMR using the AWS Management Console:

- Go to the AWS Management Console and sign in to your account.

- Click the Services tab and select EMR.

- Click the Jobs tab.

- Click the Create Job button.

- In the Job Name field, enter a name for your job.

- In the Cluster field, select the cluster that you want to submit the job to.

- In the Steps section, add a step.

- In the Action field, select the action that you want to execute.

- In the Arguments field, enter the arguments for the action.

- Click the Create button to create your job.

Basic Tutorials of AWS EMR: Getting Started

Here are some step-by-step basic tutorials of AWS EMR:

- Create a simple EMR cluster using the AWS Management Console: This tutorial shows you how to create a simple EMR cluster using the AWS Management Console.

- Open the AWS Management Console in your and sign in to your account.

- Click the Services tab and select EMR.

- Click the Create Cluster button.

- In the Cluster Name field, enter a name for your cluster.

- In the Release field, select the release version of EMR that you want to use.

- In the Hardware Configuration section, select the instance type and number of nodes for your cluster.

- In the Software Configuration section, select the Hadoop and Spark versions that you want to use.

- In the Advanced Options section, you can configure additional options for your cluster, such as the security group and the VPC.

- Click the Create Cluster button to create your cluster.

- Create a simple EMR cluster using the AWS CLI: This tutorial shows you how to create a simple EMR cluster using the AWS CLI.

- Open a command prompt and navigate to the directory where you want to create your cluster.

- Run the following command to create an EMR cluster:

aws emr create-cluster –release-label emr-5.30.0 –instance-type m5.xlarge –instance-count 3 –applications Name=Hadoop Name=Spark

This command will create a cluster called my-cluster with three m5.xlarge instances. The cluster will use the release version emr-5.30.0 and the Hadoop and Spark applications.

- Submit a simple job to EMR using the AWS Management Console: This tutorial shows you how to submit a simple job to EMR using the AWS Management Console.

- Open the AWS Management Console and sign in to your account.

- Click the Services tab and select EMR.

- Click the Jobs tab.

- Click the Create Job button.

- In the Job Name field, enter a name for your job.

- In the Cluster field, select the cluster that you want to submit the job to.

- In the Steps section, add a step.

- In the Action field, select the action that you want to execute.

- In the Arguments field, enter the arguments for the action.

- Click the Create button to create your job.

Here is an example of a simple job that you can submit to EMR:

!/bin/bash

Import the Hadoop and Spark libraries

hadoop_home=$(hadoop classpath | sort | uniq | grep -v “**”)

spark_home=$(spark-submit –class org.apache.spark.deploy.master.Master –master local[2] –deploy-mode cluster)

Copy the data to HDFS

hdfs dfs -put data /data

Run a Spark job

spark-submit –master local[2] –deploy-mode cluster $spark_home/examples/src/main/python/wordcount.py /data /output

This job will copy the data to HDFS and then run a Spark job to count the words in the data.

👤 About the Author

Ashwani is passionate about DevOps, DevSecOps, SRE, MLOps, and AiOps, with a strong drive to simplify and scale modern IT operations. Through continuous learning and sharing, Ashwani helps organizations and engineers adopt best practices for automation, security, reliability, and AI-driven operations.

🌐 Connect & Follow:

- Website: WizBrand.com

- Facebook: facebook.com/DevOpsSchool

- X (Twitter): x.com/DevOpsSchools

- LinkedIn: linkedin.com/company/devopsschool

- YouTube: youtube.com/@TheDevOpsSchool

- Instagram: instagram.com/devopsschool

- Quora: devopsschool.quora.com

- Email– contact@devopsschool.com