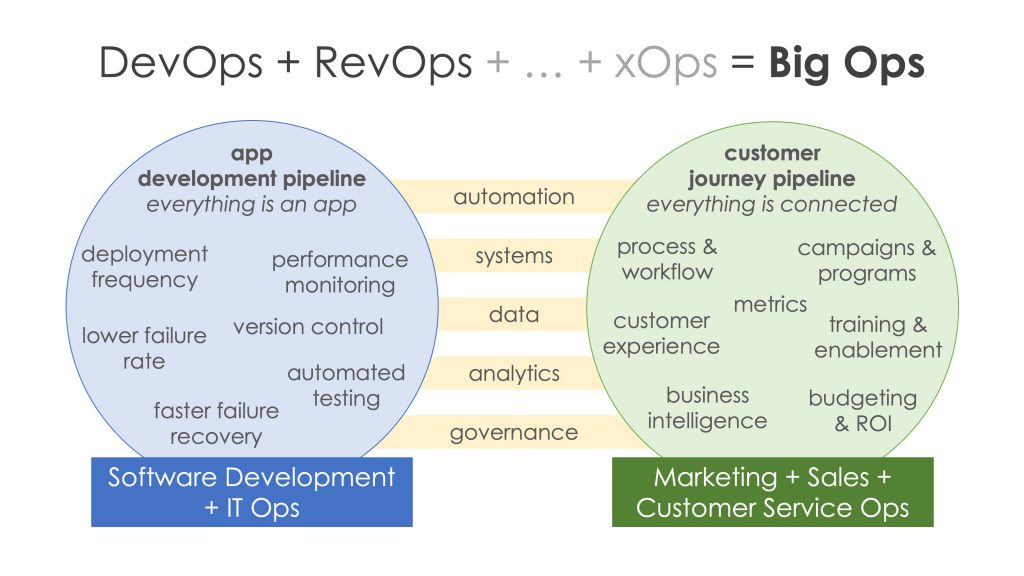

BigOps is a term that is used to refer to the practice of managing and operating large-scale, complex systems. It encompasses both traditional IT operations and the newer cloud-native technologies and methodologies. It’s a term that is commonly used in the context of large organizations that rely on big data and distributed systems, such as e-commerce, finance, and social media companies.

BigOps typically involves a combination of technical and operational skills, including data management, orchestration, security, and monitoring. It can encompass a wide range of activities, including:

- Managing and scaling large-scale data pipelines

- Automating the deployment and scaling of applications

- Managing and scaling distributed systems

- Ensuring high availability and disaster recovery

- Managing and monitoring the performance of the systems

- Managing security and compliance

BigOps is a field that is constantly evolving, as new technologies and methodologies are developed, and organizations are increasingly looking for ways to manage and operate their systems at scale.

In summary, BigOps is a term that refers to the practice of managing and operating large-scale, complex systems and it’s a field that is constantly evolving, as new technologies and methodologies are developed.

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Do you want to learn Quantum Computing?

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND