What is generative AI?

Generative AI refers to a category of artificial intelligence that focuses on generating new content or data that resembles existing data. Unlike traditional AI, which is typically designed to analyze and make predictions based on data, generative AI creates new, synthetic data based on the patterns it has learned from the training data. This can include generating images, text, music, and other types of content. Generative AI models are often trained using techniques such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and transformer models like GPT (Generative Pre-trained Transformer). These models have a wide range of applications, including art creation, natural language processing, drug discovery, and more.

How does generative AI work?

Generative AI works by learning patterns from a set of training data and then using these learned patterns to generate new, similar data. Here is an overview of how it works:

Key Techniques in Generative AI:

- Generative Adversarial Networks (GANs):

- Architecture: GANs consist of two neural networks, the generator and the discriminator, that are trained simultaneously.

- Generator: This network generates new data samples.

- Discriminator: This network evaluates the generated data against real data.

- Training Process: The generator creates data, the discriminator assesses it, and they both improve through a process of adversarial learning, where the generator tries to produce data that can fool the discriminator, and the discriminator gets better at distinguishing real from fake data.

- Variational Autoencoders (VAEs):

- Architecture: VAEs consist of an encoder and a decoder.

- Encoder: Compresses the input data into a latent space representation.

- Decoder: Reconstructs the data from this representation.

- Training Process: VAEs are trained to minimize the difference between the input data and the reconstructed data, while also ensuring that the latent space representation follows a known distribution (usually Gaussian).

- Transformers:

- Architecture: Transformers use self-attention mechanisms to process and generate sequences of data.

- Training Process: Models like GPT (Generative Pre-trained Transformer) are trained on large datasets of text to predict the next word in a sentence, allowing them to generate coherent and contextually relevant text.

General Workflow:

- Data Collection and Preprocessing:

- Collect a large and representative dataset related to the task (e.g., images, text).

- Preprocess the data to ensure it is in a suitable format for training (e.g., normalization, tokenization).

- Model Training:

- Training Objective: The model learns to generate data that is indistinguishable from the real data.

- Loss Functions: Specific loss functions guide the training process. For example, GANs use a combination of generator and discriminator loss functions.

- Generation:

- Once trained, the model can generate new data samples by feeding it random noise (in the case of GANs) or latent space vectors (in the case of VAEs) or a prompt (in the case of transformers).

- Evaluation and Fine-tuning:

- Generated data is evaluated for quality, diversity, and fidelity.

- The model may be fine-tuned using additional training or by adjusting hyperparameters to improve performance.

Applications:

- Image Generation: Creating realistic images for art, design, and media.

- Text Generation: Producing human-like text for chatbots, content creation, and language translation.

- Music Generation: Composing music in various styles and genres.

- Drug Discovery: Generating molecular structures with potential pharmaceutical applications.

Challenges:

- Quality Control: Ensuring the generated content is high-quality and relevant.

- Ethical Concerns: Addressing issues like misinformation, plagiarism, and bias in generated content.

- Computational Resources: Training generative models often requires significant computational power and data.

Generative AI vs Search Engine

| Feature | Generative AI | Search Engine |

|---|---|---|

| Function | Creates new content (text, code, images, etc.) | Finds existing information on the web |

| User Interaction | Conversational or prompt-based | Keyword-based search |

| Output | Directly generated content, can be creative or informative | List of links to relevant websites |

| Understanding | Can understand and respond to complex questions and requests | Analyzes keywords and website content |

| Accuracy | Content quality and accuracy can vary depending on training data | Aims to provide the most relevant results based on ranking algorithms |

| Bias | Can inherit biases from training data | Can be biased based on website content and search algorithms |

| Examples | ChatGPT, Bard, Dall-E 2 | Google Search, Bing, DuckDuckGopen_spark |

What are the main types of generative AI models?

Here are some of the main types of generative AI models:

- Generative Adversarial Networks (GANs): Imagine two AI models pitted against each other. One, the generator, tries to create new, realistic data (like images or text). The other, the discriminator, tries to identify if the data is real or generated. This competition helps the generator improve its creations over time. GANs are known for producing high-quality, realistic outputs.

- Variational Autoencoders (VAEs): These models work by compressing data (like an image) into a smaller, latent representation. Then, they learn to decode this representation back into a similar version of the original data. VAEs are useful for tasks like dimensionality reduction and anomaly detection, and can also be used for generating new data variations.

- Autoregressive models: This is a broad category encompassing models that generate outputs one piece at a time, like a word in a sentence or a pixel in an image. They are known for their high level of control over the generation process.

- Recurrent Neural Networks (RNNs): A type of autoregressive model, RNNs are particularly useful for sequential data like text. They consider the previous elements in a sequence when predicting the next one, making them well-suited for tasks like language translation and text generation.

- Transformer-based models: This is another powerful architecture for autoregressive models. Transformers are particularly adept at understanding long-range dependencies in data, which makes them excel in tasks like machine translation and text summarization.

- Reinforcement Learning for Generative Tasks: This approach involves training an AI model through trial and error, where the model receives rewards for generating good outputs. This is a promising area of research with potential applications in areas like game development and robot control.

What are the different types of generative AI models?

Here is a table summarizing different types of generative AI models, including their core components, applications, strengths, and weaknesses:

| Model Type | Core Components | Applications | Strengths | Weaknesses |

|---|---|---|---|---|

| Generative Adversarial Networks (GANs) | Generator, Discriminator | Image generation, deepfakes, data augmentation | High-quality, realistic outputs | Training instability, mode collapse |

| Variational Autoencoders (VAEs) | Encoder, Decoder, Latent Space | Data compression, anomaly detection, image generation | Probabilistic framework, interpretable latent space | Outputs may lack sharpness and fine details |

| Transformers | Self-attention mechanisms, Encoder-Decoder | Text generation, language translation, text summarization | Handles long-range dependencies, state-of-the-art in NLP | High computational cost, large training data needed |

| Recurrent Neural Networks (RNNs) | Hidden states, Recurrent connections | Sequence prediction, text generation, time series forecasting | Good for sequential data | Struggles with long-range dependencies, training difficulties |

| Auto-Regressive Models | Sequence modeling, Predictive coding | Text generation, music generation, code generation | Simple and effective for sequence generation | Can be slow due to sequential nature |

| Flow-based Models | Invertible transformations, Density estimation | Image generation, probabilistic modeling | Exact likelihood calculation, reversible transformations | Can be complex to implement, less popular |

| Energy-Based Models (EBMs) | Energy function, Optimization process | Image generation, probabilistic modeling | Flexible and powerful | Often require complex and slow optimization |

| Diffusion Models | Stochastic processes, Noise modeling | Image generation, denoising | Produce high-quality images, strong theoretical foundation | Slow sampling process, computationally intensive |

This table provides a concise overview of the various types of generative AI models, their core components, applications, strengths, and weaknesses, offering a clear comparison for understanding their unique characteristics and uses.

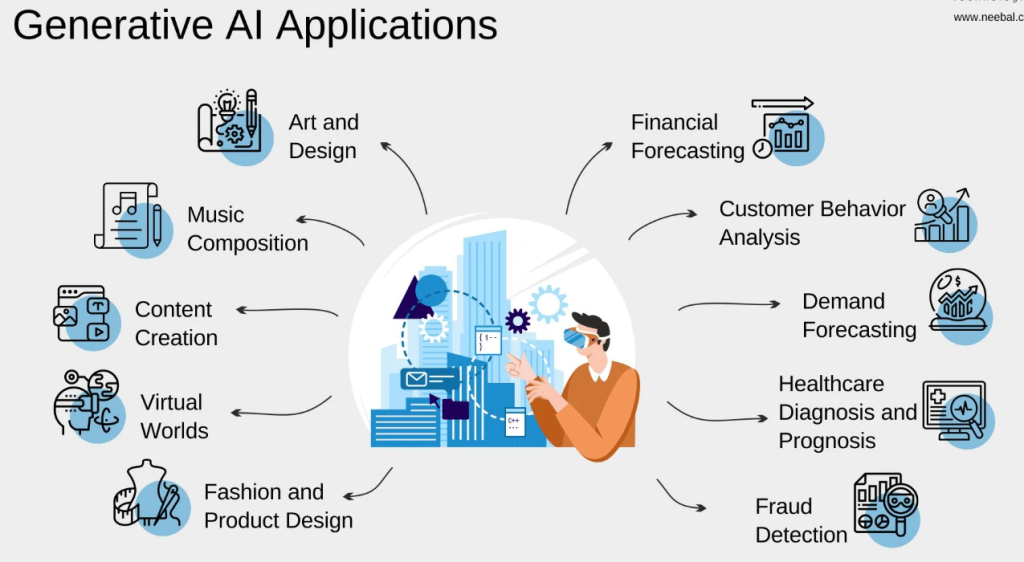

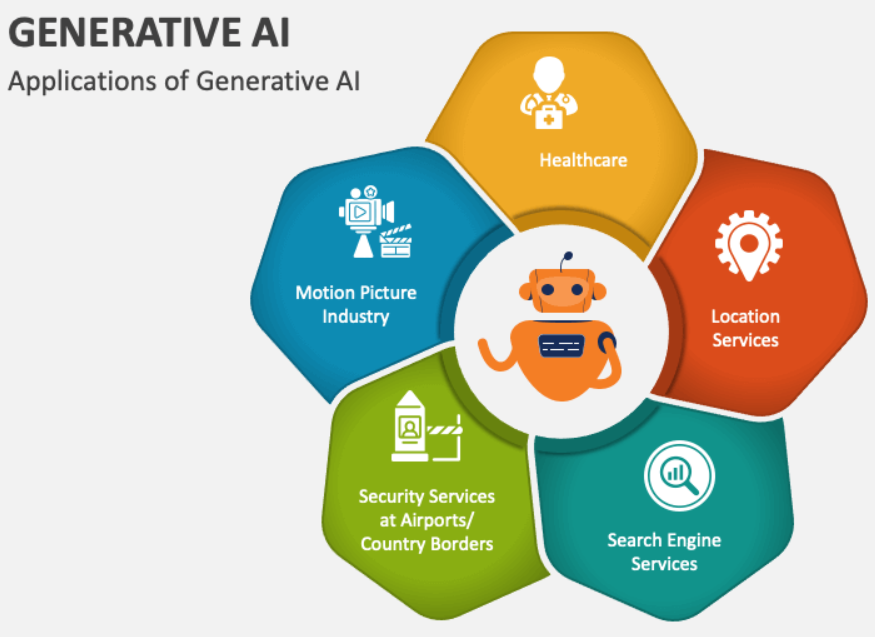

What are the applications of generative AI?

Generative AI has a wide range of applications across various fields. Here are some of the key applications:

| Application Area | Description |

|---|---|

| Content Creation | Generating text, articles, blogs, and reports automatically. Used in journalism, marketing, and blogging. |

| Creative Arts | Creating music, artwork, poetry, and other forms of creative content. AI tools assist artists and musicians. |

| Chatbots and Virtual Assistants | Providing customer service, technical support, and general assistance in various industries through conversational agents. |

| Healthcare | Assisting in medical research, generating medical reports, and providing health advice through virtual assistants. |

| Gaming | Creating game content, designing characters, and generating narratives dynamically within games. |

| Education | Developing personalized learning experiences, creating educational content, and providing tutoring services. |

| Finance | Generating financial reports, conducting market analysis, and providing investment advice through AI models. |

| Entertainment | Producing movie scripts, generating storylines for TV shows, and creating immersive experiences in virtual reality. |

| Marketing and Advertising | Crafting personalized advertisements, generating marketing copy, and creating promotional content. |

| Data Augmentation | Enhancing datasets for training machine learning models by generating synthetic data. |

| Design and Architecture | Generating design concepts, creating 3D models, and assisting in architectural planning. |

| Code Generation | Assisting software developers by generating code snippets, automating repetitive coding tasks, and suggesting code improvements. |

| Social Media | Crafting social media posts, generating hashtags, and automating content scheduling and posting. |

| Legal Assistance | Drafting legal documents, generating contracts, and assisting with legal research and compliance. |

| Language Translation | Providing high-quality translations and generating multilingual content for global audiences. |

These applications illustrate the versatility and potential of generative AI to transform various industries by automating complex tasks, enhancing creativity, and providing personalized experiences.

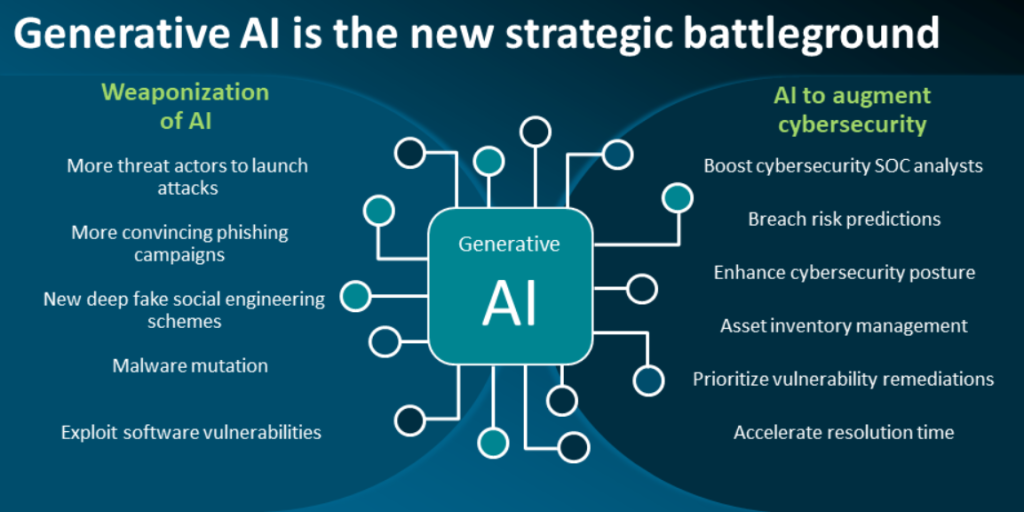

Generative AI in WAR and Future Battle

Generative AI has the potential to significantly impact warfare and the nature of future battles. Here’s a breakdown of how it might be used and the considerations involved:

Potential Applications:

- Intelligence gathering and analysis: AI can analyze vast amounts of data from satellites, drones, and other sources to identify enemy positions, movements, and weaknesses.

- Generating synthetic environments: AI can create realistic simulations of potential battlefields for training soldiers and testing military strategies.

- Cyber warfare: AI can be used to launch sophisticated cyberattacks on enemy infrastructure and communication systems. It can also be used to defend against such attacks.

- Autonomous weapons systems: AI-powered drones, tanks, or other weapons systems could potentially make decisions on their own about engaging targets, raising significant ethical concerns.

- Logistics and resource management: AI can optimize logistics by predicting supply needs and streamlining deployment and resupply operations.

- Misinformation and propaganda: AI could be used to generate fake news or social media content to manipulate public opinion and disrupt enemy morale.

Challenges and Considerations:

- Ethical concerns: The use of autonomous weapons and the potential for unintended consequences raise serious ethical questions about who is responsible for casualties caused by AI systems.

- Bias and explainability: AI models can inherit biases from the data they are trained on. Ensuring fairness and transparency in AI-driven decisions is crucial in warfare.

- Hacking and manipulation: Adversaries could potentially hack or manipulate AI systems used for military purposes, leading to disastrous consequences.

- Human oversight: Maintaining human control over critical decision-making processes remains essential, even with advanced AI capabilities.

- Arms race: A potential arms race in AI-powered weaponry could lead to increased instability and the risk of escalation.

The Future of Warfare:

The impact of generative AI on warfare is still unfolding. It’s likely that future battles will involve a complex mix of human and AI capabilities. International cooperation and responsible development of AI for military purposes will be essential to mitigate risks and ensure ethical use of this powerful technology.

What are GANs and how do they work?

GANs, which stands for Generative Adversarial Networks, are a type of generative AI model that works in a unique way. Imagine it as a competition between two AI models:

- Generator: This model acts like an artist, constantly trying to create new and realistic data, be it images, text, or even music. It starts with a random noise vector and uses its knowledge to transform it into something that resembles the kind of data it’s been trained on.

- Discriminator: This model plays the role of the art critic. Its job is to examine both real data (from the training set) and the data generated by the forger (the generator), and determine if it’s real or fake.

Here’s how the training process works:

- Round 1: Generator’s Turn: The generator takes a random noise vector as input and uses its internal magic to create new data. This could be a new image that looks like a real photo or a piece of text that sounds like a real sentence.

- Round 1: Discriminator’s Turn: The discriminator receives both the newly generated data and some real data (from the training set). It analyzes both samples and tries to classify them as either real or fake.

- Feedback and Learning: Based on the discriminator’s success or failure in identifying the fake data, both models are updated. The generator is adjusted to improve its ability to fool the discriminator, while the discriminator is fine-tuned to become better at spotting the forgeries.

- Repeat: This adversarial process keeps going, round after round. Over time, the generator learns to create increasingly realistic data, while the discriminator becomes more skilled at spotting fakes. Ideally, they reach a point where the discriminator struggles to tell the difference between real and generated data, signifying that the generator has achieved a high level of mastery.

- The Final Act: Once training is complete, the generator model is the one we keep. We can then use it to generate new and creative data points that are similar to the kind of data it was trained on.

Here are some key things to remember about GANs:

- The constant competition between the generator and discriminator is what drives GANs to improve.

- The quality of the generated data depends heavily on the training data. The more diverse and high-quality the training data, the better the results.

- GANs are a powerful tool for generating new data, but they can also be susceptible to biases present in the training data.

What is a VAE and how is it different from GANs?

Here is a comparison between Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) in a tabular format:

| Feature | Variational Autoencoders (VAEs) | Generative Adversarial Networks (GANs) |

|---|---|---|

| Architecture | Encoder-Decoder structure | Generator-Discriminator structure |

| Core Components | Encoder, Decoder, Latent Space | Generator, Discriminator |

| Learning Objective | Reconstruction of input data | Generation of data that can fool the discriminator |

| Training Process | Minimizes reconstruction error and enforces latent space distribution | Adversarial process where generator and discriminator compete |

| Loss Function | Reconstruction loss (e.g., MSE) and KL divergence | Adversarial loss (e.g., binary cross-entropy) for both generator and discriminator |

| Latent Space | Explicitly modeled, typically Gaussian distribution | Implicitly modeled through adversarial training |

| Output Quality | Generally produces smoother outputs | Can produce high-quality, realistic outputs but might have artifacts |

| Stability of Training | Relatively stable and easier to train | Can be unstable and challenging to train |

| Generation Process | Samples from a known distribution (e.g., Gaussian) and decodes to data space | Samples noise and transforms it into data |

| Primary Use Cases | Data compression, anomaly detection, generating smooth variations of data | Image generation, data augmentation, creative applications |

| Example Applications | Denoising autoencoders, interpolation between data points | Deepfakes, image-to-image translation, super-resolution |

| Strengths | Robust probabilistic framework, interpretable latent space | High-quality, sharp, and realistic outputs |

| Weaknesses | Outputs might lack sharpness and fine details | Training can be tricky and outputs might have noise or artifacts |

What are transformer models in generative AI?

Transformer models are a powerful architecture used in generative AI, particularly for tasks involving sequential data like text. They excel at understanding the relationships between different parts of a sequence, which makes them well-suited for tasks like:

- Text generation: They can be used to create realistic and coherent text formats, like poems, code, scripts, musical pieces, emails, or letters.

- Machine translation: They can translate text from one language to another while preserving the meaning and style of the original text.

- Text summarization: They can condense lengthy pieces of text into shorter summaries that capture the essential points.

Here’s what makes transformers unique:

- Attention mechanism: Unlike traditional RNNs, transformers don’t process data sequentially. They use an attention mechanism to focus on the most relevant parts of the input sequence when generating the output. This allows them to capture long-range dependencies in the data more effectively.

- Encoder-decoder structure: Many transformer models follow an encoder-decoder structure. The encoder takes the input sequence and creates a representation that captures its meaning. The decoder then uses this representation to generate the output sequence, one element at a time.

There are several variations of transformer models used in generative AI, including:

- Generative Pre-trained Transformers (GPT): These are powerful language models pre-trained on massive amounts of text data. They can be fine-tuned for various tasks like text generation, question answering, and summarization.

- BART (Bidirectional and Autoregressive Transformers): These models are trained on both the original text and its reversed version, allowing them to understand the context better and generate more fluent and coherent text.

Overall, transformer models are a major force in generative AI, pushing the boundaries of what’s possible in tasks that involve creating and manipulating textual data.

How is generative AI used in natural language processing (NLP)?

Generative AI has several applications in natural language processing (NLP), enabling machines to understand, generate, and manipulate human language in a variety of ways. Here are some key uses:

Text Generation

- Chatbots and Conversational Agents: Generative AI powers chatbots, enabling them to generate human-like responses in real-time, enhancing user interaction and customer service.

- Content Creation: AI can write articles, blog posts, product descriptions, and other content, helping automate the content creation process.

Language Translation

- Machine Translation: Models like Google Translate use generative AI to translate text from one language to another, improving the accuracy and fluency of translations.

Text Summarization

- Extractive Summarization: AI generates summaries by extracting key sentences or phrases from the text.

- Abstractive Summarization: AI creates a concise and coherent summary by understanding the context and generating new sentences, which can be more natural and informative.

Text Completion and Autocompletion

- Predictive Text Input: Generative AI assists in completing sentences or phrases in real-time, such as in email composition or coding environments (e.g., Gmail Smart Compose, GitHub Copilot).

Sentiment Analysis and Text Classification

- Generating Labels: AI can generate sentiment labels or categories for text data, enhancing tasks like sentiment analysis, topic modeling, and spam detection.

Question Answering

- Interactive Q&A Systems: Generative AI can generate answers to questions based on large datasets or specific documents, used in search engines and virtual assistants.

Dialogue Systems

- Conversational Interfaces: AI generates multi-turn dialogues, enabling complex interactions in virtual assistants like Siri, Alexa, and Google Assistant.

Text-based Games and Storytelling

- Interactive Fiction: AI can generate dynamic stories and text-based games, providing immersive and personalized experiences.

Code Generation

- Programming Assistance: Models like OpenAI’s Codex generate code snippets or entire programs based on natural language descriptions, aiding software development.

Example Models

- GPT-3 (Generative Pre-trained Transformer 3): One of the most advanced models for text generation, capable of understanding and generating human-like text across various tasks.

- T5 (Text-To-Text Transfer Transformer): Converts all NLP tasks into a text-to-text format, enabling a unified approach to different applications.

Benefits

- Efficiency: Automates repetitive and time-consuming tasks, increasing productivity.

- Scalability: Enables large-scale text generation and analysis.

- Personalization: Generates customized content and responses based on user input and context.

Challenges

- Quality Control: Ensuring the generated text is coherent, relevant, and free of biases or errors.

- Ethical Concerns: Addressing issues related to misinformation, plagiarism, and inappropriate content generation.

How can we ensure ethical use of generative AI content?

Ensuring ethical use of generative AI content requires a multifaceted approach that considers transparency, bias, accountability, and potential misuse. Here are some key strategies:

Transparency:

- Disclose the use of AI: Be upfront about the use of generative AI in content creation. This helps users understand the nature of the information they’re consuming.

- Explain the generation process: Provide information about the training data used and the limitations of the model. This fosters trust and avoids misunderstandings.

Bias Mitigation:

- Diverse training data: Train generative AI models on datasets that are inclusive and representative of various demographics and viewpoints. This helps reduce bias in the generated content.

- Bias detection and mitigation methods: Employ techniques to identify and mitigate biases within the model itself. This can involve fairness checks and adjustments to the training process.

Accountability:

- Human oversight: Maintain human oversight throughout the content generation process. Humans can review AI outputs and ensure they are aligned with ethical guidelines.

- Clear ownership: Establish clear ownership of the generated content. This is important for copyright purposes and to determine who is responsible for potential misuse.

Mitigating Misuse:

- Deepfake detection: Implement tools to detect and flag deepfakes and other manipulated content generated by AI.

- Regulation and guidelines: Develop regulations and guidelines for responsible AI development and use. This can help address potential issues like the spread of misinformation.

Additional Considerations:

- Privacy: Be mindful of privacy concerns when using personal data for training generative AI models. Ensure proper data anonymization and user consent practices.

- Safety: Consider the potential safety implications of generated content. For instance, AI-generated text could be used to create hate speech or propaganda.

How can generative AI be used in creative industries?

Generative AI is revolutionizing creative industries by offering a powerful set of tools that can assist and inspire human creativity. Here are some ways generative AI is making waves in different creative fields:

Music Composition:

- Generate new melodies, rhythms, and chord progressions. Composers can use AI to spark ideas, explore new sonic landscapes, or create backing tracks.

- Personalize music for different audiences or applications. AI can tailor music to specific moods, genres, or target demographics.

Visual Arts:

- Generate unique and realistic images or art styles. Artists can use AI to create concept art, explore variations on existing themes, or inspire new artistic directions.

- Automate repetitive tasks like image editing or background generation. This frees up artists to focus on more creative aspects of their work.

Writing and Design:

- Generate story ideas, scripts, or marketing copy. Writers can use AI to overcome writer’s block, brainstorm new concepts, or create different narrative options.

- Design layouts, logos, or user interfaces. AI can help generate initial design ideas or variations on existing themes.

Fashion and Entertainment:

- Design clothing or product mockups. AI can create realistic images of garments or products, allowing for faster prototyping and design exploration.

- Develop special effects or generate realistic environments in films or video games. AI can automate time-consuming tasks and create stunning visuals that enhance storytelling.

Beyond these specific examples, generative AI offers several broader benefits for creative industries:

- Increased Efficiency: AI can automate repetitive tasks, freeing up creative professionals to focus on higher-level aspects of their work.

- Democratic Access: Generative AI tools can make creative exploration more accessible to a wider range of people, regardless of their artistic background or technical skills.

- Inspiration and Innovation: AI can spark new ideas and help creators explore uncharted creative territories.

Future of Generative AI

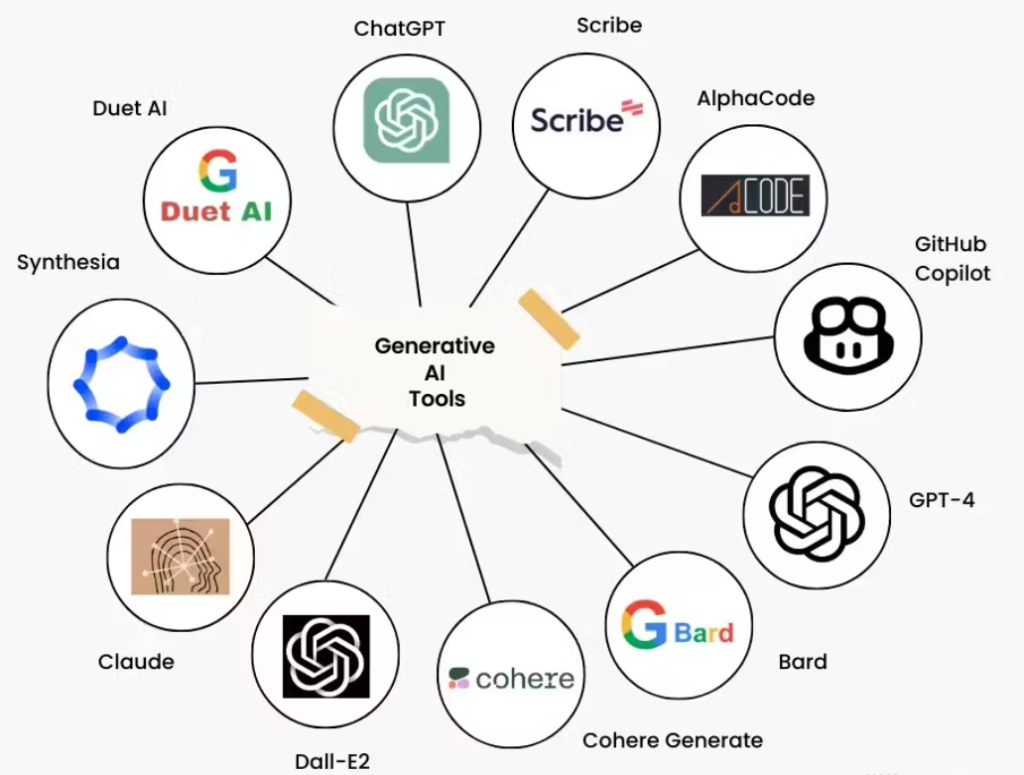

List of Popular Generative AI Application

Here is a table listing popular generative AI applications along with their descriptions and examples:

| Application | Description | Examples |

|---|---|---|

| Image Generation | Creating realistic images or artwork from scratch | DeepArt, DALL-E, StyleGAN |

| Text Generation | Producing human-like text based on prompts | GPT-3, OpenAI’s Chatbot, Copy.ai |

| Music Composition | Generating original music compositions | OpenAI’s MuseNet, AIVA, Amper Music |

| Language Translation | Translating text from one language to another | Google Translate, DeepL |

| Text Summarization | Condensing long documents into shorter summaries | OpenAI’s GPT-3, Google AI summarization tools |

| Chatbots and Conversational Agents | Engaging in human-like conversation with users | OpenAI’s Chatbot, Microsoft Xiaoice |

| Content Creation | Writing articles, blog posts, and other content | Jasper (formerly Jarvis), Writesonic |

| Data Augmentation | Enhancing datasets by generating additional data samples | Augmentor, GAN-based data augmentation tools |

| Code Generation | Writing code based on natural language descriptions | GitHub Copilot, OpenAI Codex |

| Game Development | Creating game assets, levels, and narratives | AI Dungeon, Procedural content generation tools |

| Deepfakes | Generating realistic videos by swapping faces | DeepFaceLab, Zao |

| Speech Synthesis | Generating human-like speech from text | Google WaveNet, Amazon Polly |

| Video Generation | Creating realistic videos from text or other inputs | Runway ML, Synthesia |

| Drug Discovery | Generating potential drug molecules | Atomwise, Insilico Medicine |

| 3D Object Generation | Creating 3D models from 2D images or text descriptions | NVIDIA’s GauGAN, 3D GA |

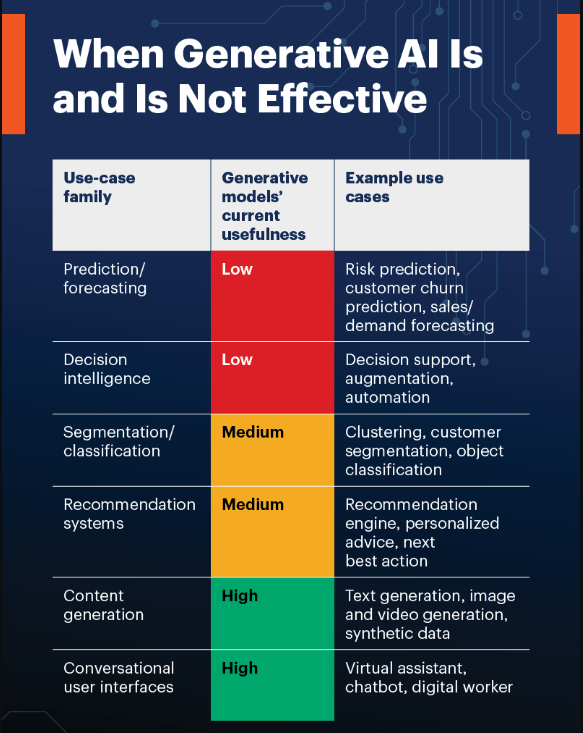

When Generative AI is not Effective

I’m a DevOps/SRE/DevSecOps/Cloud Expert passionate about sharing knowledge and experiences. I am working at Cotocus. I blog tech insights at DevOps School, travel stories at Holiday Landmark, stock market tips at Stocks Mantra, health and fitness guidance at My Medic Plus, product reviews at I reviewed , and SEO strategies at Wizbrand.

Do you want to learn Quantum Computing?

Please find my social handles as below;

Rajesh Kumar Personal Website

Rajesh Kumar at YOUTUBE

Rajesh Kumar at INSTAGRAM

Rajesh Kumar at X

Rajesh Kumar at FACEBOOK

Rajesh Kumar at LINKEDIN

Rajesh Kumar at PINTEREST

Rajesh Kumar at QUORA

Rajesh Kumar at WIZBRAND