What is scikit-learn?

Scikit-learn, also known as sklearn, is a popular open-source machine learning library for the Python programming language. It provides a wide range of algorithms and makes it easy to train and evaluate models. Scikit-learn is built on top of other scientific Python libraries, such as NumPy, SciPy, and Matplotlib, and is designed to work seamlessly with these libraries to create a comprehensive machine learning ecosystem.

Top 10 use cases of scikit-learn:

Here are the top 10 use cases of scikit-learn:

- Classification: Scikit-learn offers a variety of algorithms for classification tasks, such as support vector machines, decision trees, random forests, and k-nearest neighbors. It’s widely used for tasks like spam email detection, sentiment analysis, and image classification.

- Regression: Scikit-learn provides tools for performing various types of regression analysis, including linear regression, ridge regression, and Lasso regression. These are used for tasks like predicting house prices, stock market trends, and other continuous outcomes.

- Clustering: Clustering algorithms in scikit-learn, like k-means and hierarchical clustering, allow you to group similar data points together. It’s used in customer segmentation, image segmentation, and recommendation systems.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) help reduce the dimensionality of datasets, making it easier to visualize and analyze complex data.

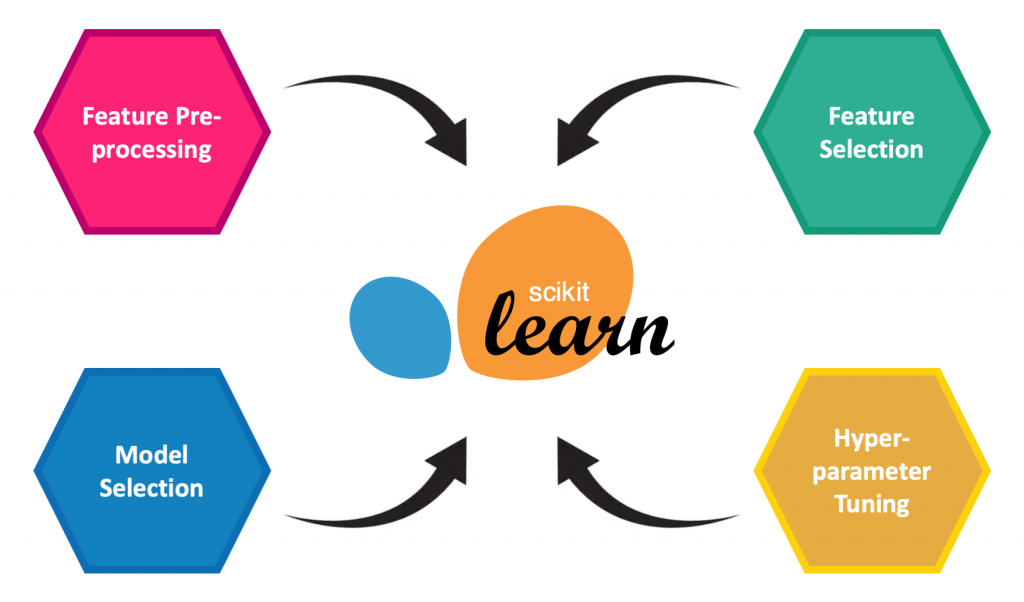

- Feature Selection: Scikit-learn provides methods to identify and select the most relevant features from a dataset, improving model performance and reducing overfitting.

- Model Selection and Evaluation: It offers tools for evaluating and comparing different machine learning models using techniques like cross-validation, grid search, and hyperparameter tuning.

- Natural Language Processing (NLP): Scikit-learn can be used for text analysis and NLP tasks, including text classification, sentiment analysis, and topic modeling.

- Anomaly Detection: Anomaly detection algorithms in scikit-learn help identify rare and unusual data points, which can be useful for fraud detection, network security, and quality control.

- Ensemble Methods: Scikit-learn supports ensemble techniques like random forests, gradient boosting, and AdaBoost, which combine multiple models to improve overall predictive performance.

- Preprocessing and Pipelines: Scikit-learn provides tools for data preprocessing, such as scaling, encoding categorical variables, and handling missing values. It also supports building data processing pipelines that can streamline the entire machine learning workflow.

These are just a few examples of the many use cases of scikit-learn. Its versatility and comprehensive set of functionalities make it an essential tool for machine learning practitioners and researchers.

What are the feature of scikit-learn?

Scikit-learn offers a wide range of features that make it a popular choice for machine learning tasks:

- Simple and Consistent API: Scikit-learn provides a consistent and easy-to-use API for various algorithms, making it user-friendly and accessible to both beginners and experienced machine learning practitioners.

- Comprehensive Documentation: The library comes with extensive documentation, tutorials, and examples, which helps users understand and implement machine learning concepts effectively.

- Variety of Algorithms: Scikit-learn supports a diverse set of machine learning algorithms for classification, regression, clustering, dimensionality reduction, and more. It includes both traditional and modern algorithms.

- Integration with Other Libraries: It seamlessly integrates with other scientific Python libraries, such as NumPy, SciPy, and Matplotlib, enhancing its capabilities for data manipulation, analysis, and visualization.

- Data Preprocessing: Scikit-learn provides tools for data preprocessing, including scaling, encoding categorical variables, handling missing values, and more. This ensures that data is in a suitable format for machine learning algorithms.

- Model Selection and Evaluation: The library offers utilities for model evaluation, including metrics, cross-validation, and techniques for hyperparameter tuning. This helps users select the best model and optimize its performance.

- Ensemble Methods: Scikit-learn supports ensemble techniques like random forests, gradient boosting, and bagging, allowing users to create powerful models by combining multiple weaker models.

- Feature Selection and Extraction: It includes tools for selecting relevant features from data and performing dimensionality reduction using techniques like PCA and t-SNE.

- Integration with Pipelines: Scikit-learn supports building data processing pipelines that encapsulate data preprocessing, feature extraction, and model training. This helps streamline the entire machine learning workflow.

- Open-Source and Active Community: Being an open-source project, scikit-learn benefits from a vibrant community of contributors and users who continually improve and expand its functionalities.

How Scikit-learn Works and Architecture?

Scikit-learn is designed to be modular, with consistent interfaces for various algorithms, making it easy to experiment and switch between different techniques. Here’s a simplified overview of how scikit-learn works:

- Data Preparation: The input data is prepared in a suitable format, often in the form of NumPy arrays or pandas DataFrames. Data preprocessing steps like scaling, normalization, and handling missing values are applied if needed.

- Algorithm Selection: You choose the appropriate algorithm for your task, whether it’s classification, regression, clustering, etc.

- Model Initialization: You initialize the selected algorithm with the desired parameters and create an instance of the model.

- Model Training: You provide the training data to the model, which learns the underlying patterns from the data. The model’s parameters are adjusted during training to minimize the error or loss function.

- Model Evaluation: You evaluate the trained model’s performance using evaluation metrics, cross-validation, or other techniques to ensure that it generalizes well to unseen data.

- Prediction: Once the model is trained and evaluated, you can use it to make predictions on new, unseen data.

- Tuning and Optimization: You can fine-tune the model’s hyperparameters using techniques like grid search or random search to improve its performance.

- Ensemble and Pipelines: Scikit-learn allows you to combine models using ensemble techniques and create processing pipelines to ensure consistent data preprocessing and modeling.

The architecture of scikit-learn follows a modular structure. The core components include:

- Estimators: These are objects that can learn from data. Each estimator in scikit-learn implements a

fitmethod to train the model and apredictmethod to make predictions. - Transformers: Transformers are a type of estimator that modifies or transforms the data, such as scaling features or encoding categorical variables.

- Pipelines: Pipelines are sequences of data processing steps (transformers) followed by an estimator. Pipelines help ensure consistent preprocessing and modeling.

- Utilities: Scikit-learn provides utility functions for tasks like model selection, cross-validation, and hyperparameter tuning.

- Datasets: The library includes some standard datasets that are commonly used for testing and experimenting with machine learning algorithms.

Overall, scikit-learn’s architecture emphasizes modularity, ease of use, and consistency, making it a versatile and accessible library for machine learning tasks.

How to Install scikit-learn?

There are a few ways to install scikit-learn. The following are the steps for each method:

Using pip

- Open a terminal window.

- Check if pip is installed by running the following command:

pip --versionYou can install it by running the following command, if pip is not installed:

python -m pip install --user pip- Once pip is installed, you can install scikit-learn by running the following command:

pip install scikit-learnUsing Anaconda

- Install Anaconda. Anaconda is a distribution of Python that comes with many pre-installed packages, including scikit-learn.

- Once Anaconda is installed, you can open a terminal window and type the following command to install scikit-learn:

conda install scikit-learnBuilding from source

- Download the scikit-learn source code from the scikit-learn website: https://scikit-learn.org/.

- Unzip the source code archive.

- Open a terminal window and navigate to the scikit-learn source code directory.

- Run the following command to build scikit-learn:

python setup.py installCode language: CSS (css)Which method should I use?

The best method to install scikit-learn depends on your operating system and your preferences. If you are not sure which method to use, I recommend using pip.

Here are some additional things to keep in mind when installing scikit-learn:

- You need to have Python 3 installed.

- You need to have pip installed.

- If you are installing scikit-learn from source, you need to have the following dependencies installed:

- NumPy

- SciPy

- Matplotlib

Basic Tutorials of scikit-learn: Getting Started

The following steps are the basic tutorials of scikit-learn:

- Install scikit-learn. You can follow the instructions in the previous section to install scikit-learn.

- Load a dataset. Scikit-learn comes with a number of built-in datasets, or you can load your own dataset. To load a built-in dataset, you can use the

load_iris()function.

from sklearn.datasets import load_iris

iris_data = load_iris()Code language: JavaScript (javascript)This will load the Iris dataset, which is a classification dataset with 3 classes and 4 features.

- Explore the dataset. Once you have loaded the dataset, you can explore it using the

head(),describe(), andinfo()functions.

print(iris_data.head())

print(iris_data.describe())

print(iris_data.info())Code language: CSS (css)This will print the first few rows of the dataset, the descriptive statistics of the features, and the information about the dataset.

- Split the dataset into training and testing sets. Before you can train a machine learning model, you need to split the dataset into training and testing sets. The training set will be used to train the model, and the testing set will be used to evaluate the model.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(iris_data.data, iris_data.target, test_size=0.35)

This will split the dataset into 65% training data and 35% testing data.

- Choose a machine learning algorithm. There are many different machine learning algorithms available in scikit-learn. The best algorithm to choose depends on the problem you are trying to solve.

For classification problems, you can choose from algorithms such as logistic regression, decision trees, and support vector machines. For regression problems, you can choose from algorithms such as linear regression, ridge regression, and Lasso regression.

- Train the model. Once you have chosen a machine learning algorithm, you need to train the model on the training set.

from sklearn.linear_model import LogisticRegression

logistic_regression = LogisticRegression()

logistic_regression.fit(X_train, y_train)Code language: JavaScript (javascript)This will train the logistic regression model on the training set.

- Make predictions. Once the model is trained, you can make predictions on the testing set.

predictions = logistic_regression.predict(X_test)This will make predictions for the testing set.

- Evaluate the model. Finally, you need to evaluate the model on the testing set. This will tell you how well the model performs on new data.

from sklearn.metrics import accuracy_score

accuracy = accuracy_score(y_test, predictions)

print(accuracy)Code language: JavaScript (javascript)This will print the accuracy of the model, which is the percentage of predictions that were correct.

These are just the basic steps involved in using scikit-learn. There are many other things you can do with scikit-learn, such as feature engineering, hyperparameter tuning, and ensemble learning.

👤 About the Author

Ashwani is passionate about DevOps, DevSecOps, SRE, MLOps, and AiOps, with a strong drive to simplify and scale modern IT operations. Through continuous learning and sharing, Ashwani helps organizations and engineers adopt best practices for automation, security, reliability, and AI-driven operations.

🌐 Connect & Follow:

- Website: WizBrand.com

- Facebook: facebook.com/DevOpsSchool

- X (Twitter): x.com/DevOpsSchools

- LinkedIn: linkedin.com/company/devopsschool

- YouTube: youtube.com/@TheDevOpsSchool

- Instagram: instagram.com/devopsschool

- Quora: devopsschool.quora.com

- Email– contact@devopsschool.com